Following on from the ‘interesting’ House Science Committee hearing two weeks ago, there was an excellent rebuttal curated by ClimateFeedback of the unsupported and often-times misleading claims from the majority witnesses. In response, Judy Curry has (yet again) declared herself unconvinced by the evidence for a dominant role for human forcing of recent climate changes. And as before she fails to give any quantitative argument to support her contention that human drivers are not the dominant cause of recent trends.

Her reasoning consists of a small number of plausible sounding, but ultimately unconvincing issues that are nonetheless worth diving into. She summarizes her claims in the following comment:

… They use models that are tuned to the period of interest, which should disqualify them from be used in attribution study for the same period (circular reasoning, and all that). The attribution studies fail to account for the large multi-decadal (and longer) oscillations in the ocean, which have been estimated to account for 20% to 40% to 50% to 100% of the recent warming. The models fail to account for solar indirect effects that have been hypothesized to be important. And finally, the CMIP5 climate models used values of aerosol forcing that are now thought to be far too large.

These claims are either wrong or simply don’t have the implications she claims. Let’s go through them one more time.

1) Models are NOT tuned [for the late 20th C/21st C warming] and using them for attribution is NOT circular reasoning.

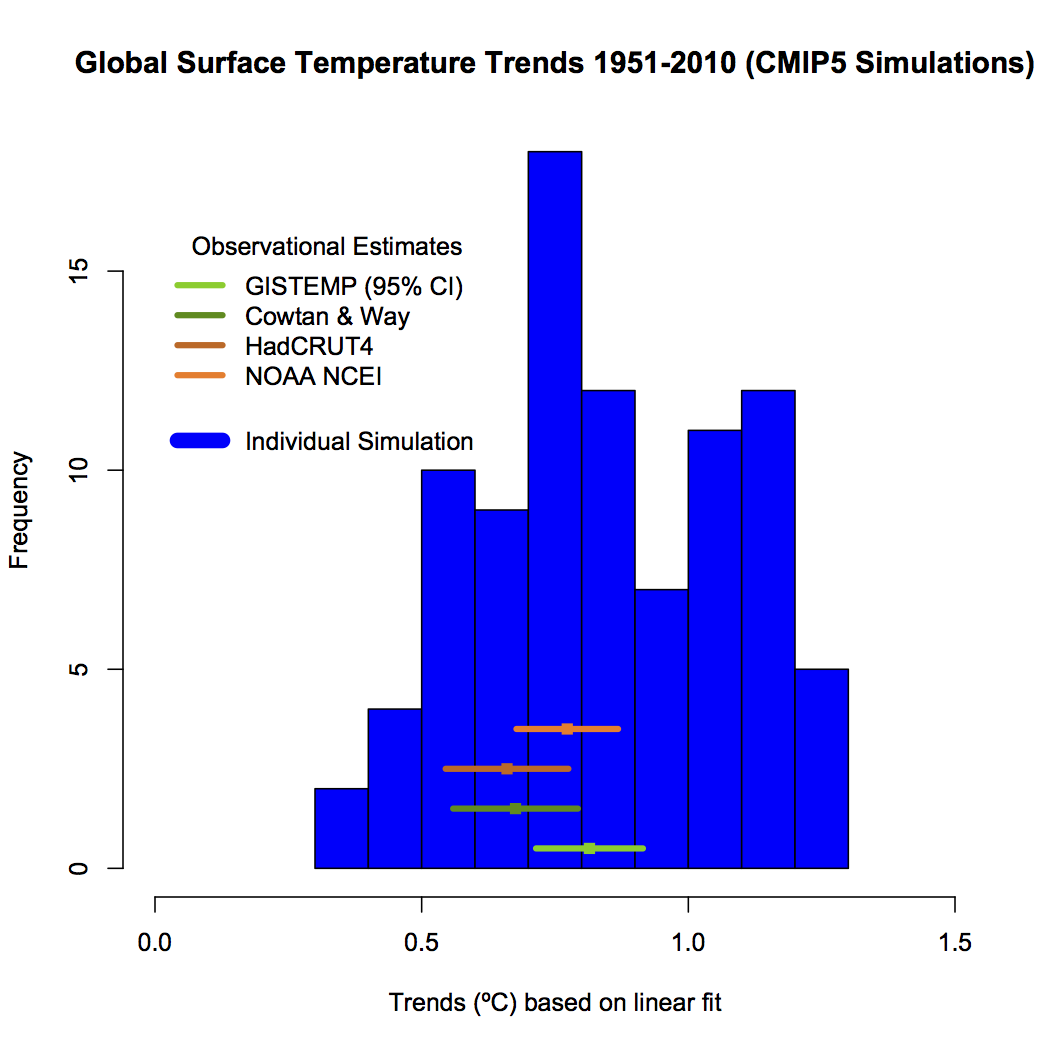

Curry’s claim is wrong on at least two levels. The “models used” (otherwise known as the CMIP5 ensemble) were *not* tuned for consistency for the period of interest (the 1950-2010 trend is what was highlighted in the IPCC reports, about 0.8ºC warming) and the evidence is obvious from the fact that the trends in the individual model simulations over this period go from 0.35 to 1.29ºC! (or 0.84±0.45ºC (95% envelope)).

Ask yourself one question: Were these models tuned to the observed values?

Second, this is not how the attribution is done in any case. What actually happens is that the fingerprint of different forcings are calculated independently of the historical runs (using subsets of the drivers) and then matched to the observations using scalings for the patterns generated. Scaling factors near 1 imply that the models’ expected fingerprints fit reasonably well to the observations. If the models are too sensitive or not enough, that will come out in the factors, since the patterns themselves are reasonably robust. So models that have half the observed trend, or twice as much, can still help determine the pattern of change associated with the drivers. The attribution to the driver is based on the best fits of that pattern and others, not on the mean or trend in the historical runs.

2) Attribution studies DO account for low-frequency internal variability

Patterns of variability that don’t match the predicted fingerprints from the examined drivers (the ‘residuals’) can be large – especially on short-time scales, and look in most cases like the modes of internal variability that we’ve been used to; ENSO/PDO, the North Atlantic multidecadal oscillation etc. But the crucial thing is that these residuals have small trends compared to the trends from the external drivers. We can also put these modes directly into the analysis with little overall difference to the results.

3) No credible study has suggested that ocean oscillations can account for the long-term trends

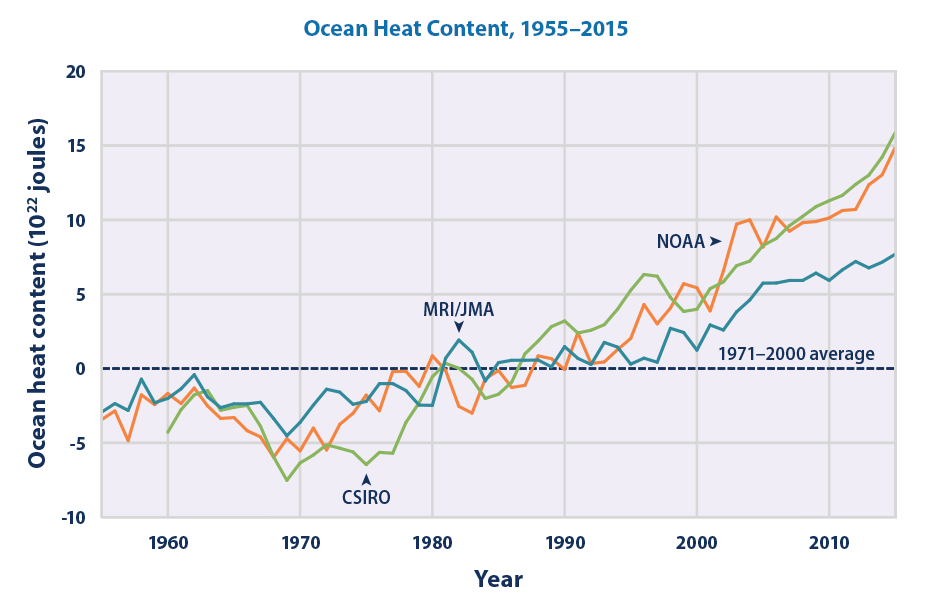

The key observation here is the increase in ocean heat content over the last half century (the figure below shows three estimates of the changes since 1955). This absolutely means that more energy has been coming into the system than leaving.

Now this presents a real problem for claims that ocean variability is the main driver. To see why, note that ocean dynamics changes only move energy around – to warm somewhere, they have to cool somewhere else. So posit an initial dynamic change of ocean circulation that warms the surface (and cools below or in other regions). To bring more energy into the system, that surface warming would have to cause the top-of-the-atmosphere radiation balance to change positively, but that would add to warming, amplifying the initial perturbation and leading to a runaway instability. There are really good reasons to think this is unphysical.

Remember too that ocean heat content increases were a predicted consequence of GHG-driven warming well before the ocean data was clear enough to demonstrate it.

4) Indirect effects of solar forcing cannot explain recent trends

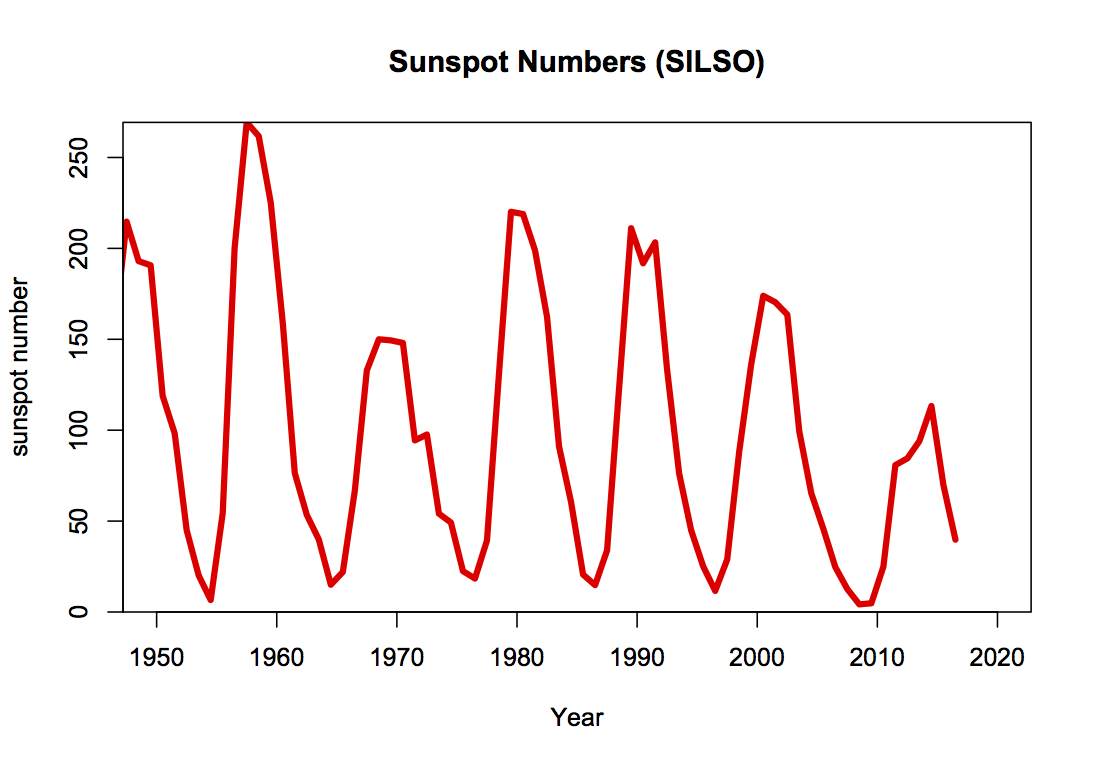

Solar activity impacts on climate are a fascinating topic, and encompass direct radiative processes, indirect effects via atmospheric chemistry and (potentially) aerosol formation effects. Much work is being done on improving the realism of such effects – particularly through ozone chemistry (which enhances the signal), and aerosol pathways (which don’t appear to have much of a global effect i.e. Dunne et al. (2016)). However, attribution of post 1950 warming to solar activity is tricky (i.e. impossible), because solar activity has declined (slightly) over that time:

5) Aerosol forcings are indeed uncertain, but this does not impact the attribution of recent trends very much.

One of the trickier issues for fingerprint studies is distinguishing between the patterns from anthropogenic aerosols and greenhouse gases. While the hemispheric asymmetries are slightly larger for aerosols, the overall surface pattern is quite similar to that for greenhouse gases (albeit with a different sign). This is one of the reasons why the most confident statements in IPCC are made with respect to the “Anthropogenic” changes all together since that doesn’t require parsing out the (opposing) factors of GHGs and aerosols. Therefore in a fingerprint study that doesn’t distinguish between aerosols and GHGs, what the exact value of the aerosol forcing right is basically irrelevant. If any specific model is getting it badly wrong, that will simply manifest through a scaling factor very different from 1 without changing the total attribution.

What would it actually take to make a real argument?

As I’ve been asking for almost three years, it is way past time for Curry to shore up her claims in a quantitative way. I doubt that this is actually possible, but if one was to make the attempt these are the kind of things needed:

- Evidence that models underestimate internal variability at ~50-80 yr timescales by a factor of ~5.

- Evidence that indirect solar forcing can increase the long-term impact of solar by a factor of 3 on centennial time-scales or reverse the sign of the forcing on 50-80 yr timescales (one or the other, both would be tricky!).

- Evidence that warm surface ocean oscillations are associated with increased downward net radiation at the TOA. [This is particularly hard because it would mean the climate was fundamentally unstable].

- Evidence that the known fingerprints of different forcings are fundamentally wrong. Say, that CO2 does not cool the stratosphere, or that solar forcing doesn’t warm it.

Absent any evidence to support these statements, the claim that somehow, somewhere the straightforward and predictive mainstream conclusions are fundamentally wrong just isn’t credible.

References

- E.M. Dunne, H. Gordon, A. Kürten, J. Almeida, J. Duplissy, C. Williamson, I.K. Ortega, K.J. Pringle, A. Adamov, U. Baltensperger, P. Barmet, F. Benduhn, F. Bianchi, M. Breitenlechner, A. Clarke, J. Curtius, J. Dommen, N.M. Donahue, S. Ehrhart, R.C. Flagan, A. Franchin, R. Guida, J. Hakala, A. Hansel, M. Heinritzi, T. Jokinen, J. Kangasluoma, J. Kirkby, M. Kulmala, A. Kupc, M.J. Lawler, K. Lehtipalo, V. Makhmutov, G. Mann, S. Mathot, J. Merikanto, P. Miettinen, A. Nenes, A. Onnela, A. Rap, C.L.S. Reddington, F. Riccobono, N.A.D. Richards, M.P. Rissanen, L. Rondo, N. Sarnela, S. Schobesberger, K. Sengupta, M. Simon, M. Sipilä, J.N. Smith, Y. Stozkhov, A. Tomé, J. Tröstl, P.E. Wagner, D. Wimmer, P.M. Winkler, D.R. Worsnop, and K.S. Carslaw, "Global atmospheric particle formation from CERN CLOUD measurements", Science, vol. 354, pp. 1119-1124, 2016. http://dx.doi.org/10.1126/science.aaf2649

One very minor aside. For some reason Judy thinks that I think that “most”, “>50%” and “more than half” are distinct quantities. For the record, I do not. She might be a little confused because she herself wrote a paper in which she declared that the distinction between “most” and “more than half” was somehow important. It was not then, and it is not now.

Hi Gavin,

I think the statement below goes a little too far:

“To bring more energy into the system, that surface warming would have to cause the top-of-the-atmosphere radiation balance to change positively, but that would add to warming, amplifying the initial perturbation and leading to a runaway instability. There are really good reasons to think this is unphysical.”

We have a paper (Brown et al., 2014) that showed that global TOA energy imbalances can be internally generated and maintained for at least a decade in the CMIP5 models before the Planck Response eliminates the imbalance. Also, it is not necessarily clear that a decade is the maximum timescale at which this could occur.

Brown, P. T., W. Li, L. Li, and Y. Ming (2014), Top-of-atmosphere radiative contribution to unforced decadal global temperature variability in climate models, Geophys. Res. Lett., 41, 5175–5183, doi:10.1002/2014GL060625.

[Response: I don’t think I’m disputing that there can be decadal variations in TOA balance – but to get surface warming *and* sustained multi-decadal positive imbalance is much harder. – gavin]

Solar irradiance does not vary nearly as much as sunspot number. While the later is useful, as it is all that we have earlier than 1978 or so, direct measurements of irradiance show that the variation is at best 1 part in 1300 over the last forty or so years

[Response: True – but all measures of solar activity show bascially the same decline over this period. I might make a figure with more of them though… – gavin]

Gavin, It seems to me that your first figure is largely irrelevant. In lots of other measures CMIP5 is not as convincing, such as cloudiness as a function of latitude, tropical TMT, global TMT, precipitation, sea surface temperature distribution, etc. Further, recent work shows that ECS of a model can be largely tuned using details of the tropical convection and cloud parameterizations. You know that convection is an ill posed problem because of the turbulent shear layers that play a prominent role. It’s thus not surprising that the parameterizations on course grids are no more than educated guesses.

I also think that it strains credulity to say that CMIP5 models were not tuned for the historical period. Perhaps not explicitly, but modelers know what this record is and so its hard to know for sure whether they discarded some of the large number of parameterization possibilities based on this. I seem to recall that they are tuned for the TOA radiative balance explicitly.

[Response: Read the papers. The models are not tuned to get the temperature trend ‘right’ (since it is clear that they don’t!). TOA radiative balance (at the pre-industrial!) is a sine qua non of doing simulations – it has no implication for the attribution argument. – gavin]

Rather than saying that Judith is wrong, it would be more accurate to say that you disagree with Judith and for that matter Nic Lewis on the scientific validity of AOGCM’s for predicting climate patterns or for that matter climate sensitivity.

[Response: No. Read it all again. I am pretty clear that there is no evidence for her claims and that her conclusion does not rest on a logical, quantitative argument. What I am asking for is a better argument. Maybe one exists? If it gets presented, I’ll evaluate it again. Not sure what Lewis has to do with this argument – in the paper they wrote together, they *assume* that the 20th C trends are anthropogenic! – gavin]

Nic gives a good exposition of some of these issues here:

https://niclewis.files.wordpress.com/2016/03/briefing-note-on-climate-sensitivity-etc_nic-lewis_mar2016.pdf

“One very minor aside. For some reason Judy thinks that I think that “most”, “>50%” and “more than half” are distinct quantities.”

Yes, that struck me as one of the many oddities about Judith’s (lack of) understanding of the contribution of human activity to global warming. She puts herself forward as someone who knows stuff, but arithmetic isn’t a strong suit. (I also get the impression from her blog articles that she hasn’t kept up with climate science literature of the past dozen years or so.)

http://blog.hotwhopper.com/2015/01/what-never-occurred-to-judith-curry-and.html

Gavin,

“1) Models are NOT tuned [for the late 20th C/21st C warming]”

Practice and philosophy of climate model tuning across six U.S. modeling centers

http://www.geosci-model-dev-discuss.net/gmd-2017-30/

“Abstract

Model calibration (or “tuning”) is a necessary part of developing and testing coupled ocean-atmosphere climate models regardless of their main scientific purpose. There is an increasing recognition that this process needs to become more transparent for both users of climate model output and other developers. Knowing how and why climate models are tuned and which targets are used is essential to avoiding possible misattributions of skillful predictions to data accommodation and vice versa. This paper describes the approach and practice of model tuning for the six major U.S. climate modeling centers. While details differ among groups in terms of scientific missions, tuning targets and tunable parameters, there is a core commonality of approaches. However, practices differ significantly on some key aspects, in particular, in the use of initialized forecast analyses as a tool, the explicit use of the historical transient record, and the use of the present day radiative imbalance vs. the implied balance in the pre-industrial as a target.”

See also (per your discussion paper) …

The Art and Science of Climate Model Tuning

http://journals.ametsoc.org/doi/abs/10.1175/BAMS-D-15-00135.1

“Abstract

The process of parameter estimation targeting a chosen set of observations is an essential aspect of numerical modeling. This process is usually named tuning in the climate modeling community. In climate models, the variety and complexity of physical processes involved, and their interplay through a wide range of spatial and temporal scales, must be summarized in a series of approximate submodels. Most submodels depend on uncertain parameters. Tuning consists of adjusting the values of these parameters to bring the solution as a whole into line with aspects of the observed climate. Tuning is an essential aspect of climate modeling with its own scientific issues, which is probably not advertised enough outside the community of model developers. Optimization of climate models raises important questions about whether tuning methods a priori constrain the model results in unintended ways that would affect our confidence in climate projections. Here, we present the definition and rationale behind model tuning, review specific methodological aspects, and survey the diversity of tuning approaches used in current climate models. We also discuss the challenges and opportunities in applying so-called objective methods in climate model tuning. We discuss how tuning methodologies may affect fundamental results of climate models, such as climate sensitivity. The article concludes with a series of recommendations to make the process of climate model tuning more transparent.”

So perhaps a simple question or perhaps a rabbit hole question …

What is/are the major difference(s) between how JC uses the word “tuning” and you and yours use of the word “tuning” anyways?

[Response: Everyone understands that tuning in general is a necessary component of using complex models. (There was a discussion on RC previously that goes into in more detail). But the tunings that actually happens – to balance the radiation at the TOA, or an adjustment to gravity waves to improve temperatures in the polar vortex etc. have no obvious implication for attribution studies. Curry is claiming that the temperature trends themselves have been fixed ahead of time (by some means) and that this would make attribution circular. But since that did not actually happen (as evidenced by the graph above), her conclusion does not logically follow. – gavin]

Finally a sort of rhetorical question … Do you and yours consider climate model projections closer to the applied sciences then to the pure sciences?

Ask her about the unprecedented amount of CO2 in the atmosphere, again again and again. Don’t let her direct a distracting and confusing bullshit bingo. Her argument scope is to distract, and to confuse debates.

Gavin, can you explain that second paragraph under your item “1)” in some other way? You’ve lost me. I understand forcings, but things went adrift when you started talking about “fingerprints” of forcings being calculated independently, using “subsets of drivers”, and when you talk about “patterns of change associated with drivers”.

[Response: I can take any of the individual drivers – GHGs, solar, volcanoes, aerosols, ozone etc. and calculate the changes just from that one component. If I change stratospheric ozone, I get a distinct change, or if I look at the impacts of a volcanic eruption, or if I see what happens when doubled CO2. (There is a good Hansen paper on this). I can also do subsets, where I look for the pattern from all the natural factors, say, and then one with all the human-related factors separately. This is what the studies quoted in IPCC do. Each of these simulations has a change in the surface temperature, the temperature with height through the atmosphere, the heat flux into the ocean, the rainfall, sea level pressure etc.- these are what I mean by the ‘patterns of change’. The fingerprint studies take these patterns and see how well they can be fitted to the observations using scaling factors. What can’t be explained (the residuals) is associated with internal variability or noise in the observations. This works as long as the patterns (‘fingerprints’) from the drivers are distinct from the patterns you would get from internal variability, but the more complete and multi-variate the observations, the less that is a problem. Better? – gavin]

Gavin @1,

I think the biggest take-away from Judy “Red Team Leader” Curry’s muddled account of the complexities of “‘Most’ versus ‘more than half’ versus ‘> 50%’” is her admission that she had not considered the possibility of natural forcings being a negative forcing until very recently. It isn’t entirely clear what she refers to as “this exchange,” but we are surely talking 2014. Up to this time, the fundamental idea that a wobbling-up-&-down natural cycle could go negative appears not to have occurred to her. That is surely a pretty profound oversight for a wobblologist.

David@4,

I think that rather than saying that Judy is wrong, one might instead say that she is “not even wrong”. She has consistently refused to make any sort of argument that goes beyond waving her hands. Of all the pseudoskeptics, I find her the least insightful.

OP:

Gavin, you are a dedicated scientist, committed to following the truth wherever it leads. Your blog post is aimed at RC regulars, who are (with a few notable exceptions) likewise trying not to fool themselves. The evidence you present is verifiable, your logic ineluctable.

Curry’s target audience, by stark contrast, are people who are quite happy to fool themselves. She has no need to offer them evidence or logic. Indeed, few if any of her blog readers will allow evidence and logic to interfere with their prejudices.

Sadly, it’s no mystery why Curry’s testimony was solicited by the House Science Committee and yours was not.

Thank you for a needed analysis of Curry’s continued obfuscations. This analysis continues the pertinent and valuable RealClimate tradition of getting the climate story told straight.

However, and without even the slightest implication of criticism of this important tradition, I’ve been thinking for some time that RealClimate could expand its scope in light of the body of evidence that living species and systems have already been and will continue to be affected by increasing anthropogenic heat — and all that follows in the wake of this rising heat.

Just within the past year alone, a minimum of two highly important articles (Scheffer et al 2016, Pecl et al 2017) demonstrate the urgency evident for life on Earth.

References

Scheffers et al. The broad footprint of climate change from genes to biomes to people. Science, 11 NOVEMBER 2016

Pecl et al. Biodiversity redistribution under climate change: Impacts on ecosystems and human well-being. Science March 31 2017

Dr Judith Curry remains unconvinced of many, many things.

One never knows which of her non-arguments to take the least seriously…

There’s the ‘Italian flag’ non-argument.

There’s the ‘stadium wave’ non-argument.

There’s the ‘pause’ non-argument.

There’s the ‘it could all be natural internal variability’ non-argument.

There’s the ‘Lewis might be right’ non-argument.

There’s the ‘Ridley might be right’ non-argument.

There’s the ‘Montfort might be right’ non-argument.

There’s the ‘because free speech’ non-argument.

There’s the “I’m mostly concerned about the behavior of other scientists” non-argument.

And of course, there is her “I vote for more air conditioning in these susceptible regions” non-argument.

Judith Curry is the go-to expert on epistemic wait.

David #4 – Your comment suggests that the Christy MT comparisons with the CMIP5 models, as presented in his recent congressional testimonies, indicates a serious disagreement. But, it’s well known that the basic MT measurements are impacted by stratospheric cooling, thus Christy (and others) must adjust the model results to simulate the MT product before making that comparison. This adjustment requires the use of another model to modify the CMIP5 results to produce simulated MT series, a process which is also questionable, IMHO. For starters, the transformation does not include surface emissivity variation, instead including only on a single value applied to the surface air temperature. Thus, the differences between ocean and land, as well as the differences between open ocean and sea-ice, are not captured. The impact of high elevation land forms, such as the Himalayas, the Andes and the Antarctic, are also left out.

But, I suspect that Christy’s comparison isn’t intended to present a valid comparison. Instead, he knows that others have latched onto these presentations (for example, Monckton’s outpourings), promoting the notion that the result proves the models don’t follow changes in the real world, while ignoring the caveats usually attached to such comparisons. Remember, Christy is a Baptist preacher of sorts, thus he knows how to sell the invisible to the ignorant and gullible listener…

Curry is a high level troll with credentials, a sophisticated lexicon and understanding of the science who chooses to market her skills to entrenched interests who will reward her for this work. She is demonstrating scientific skills the way a card dealer demonstrates dexterity in a game of three card monte.

oh, don’t feed the trolls with substantial arguments, simply identify them as trolls who are not engaging in a meaningful exchange of ideas and arguments that might increase our collective understanding.

Groan. My forehead hurts from face-palms.

I’m curious. What are the, for example, top 3 predictions by any of the “Red Team” members that have turned out to be *better* than those of mainstream climate science. (Stipulating, of course, that none of the competing predictions are likely to be spot on.)

Naturally, preference given to peer-reviewed work that proposes mechanisms. But heck, any prediction would still answer my question, if it were clearly specified, significant, long-term (=decades), and testable.

it is important not to elide the history of climate science , a relatively young work in progresss, with the mature and ancient art of advertisining.

The three principle lessons og Climateball -watching thus far remain:

1: House Commmittees in thrall to fossil fuel lobbyists willl call on Curry’s claque whenever it is assembled, as with every succcessive Heartland Meeting that issues a press release.

2. Those regarding climate science as a means of delivering the electorate from an existential threat will issue a press release whenever a substantive addition to that discipline is published. or more often , a minor addition is made.

3. the geophysical systems response, being equally indifferent to climate fim-making, and noises made by both sides of K-Street, will proceed at a rate generally frustrating to climate change publicists. because regional weather variation willl continue to capture public attention to a spontaneously greater degreee than publicized climate change.

Absent interest in science on the right, and bipartisanship in public science education , this ground state may continue on a decadal scale.

This time I have 2 days until the next lecture — but I want to understand this. Can you recommend a nice fingerprinting reference?

My planned argument is as follows:

1. The internal energy of the climate system has increased from 1970 (or so). With ARGO and the Expendible bathythermographs one gets ocean warming. With MSUs and AMSUs one gets the atmosphere; boreholes for the land surface, GRACE for ice. Figure 1 of Box 3.1 of Working Group 1, AR5 IPCC shows this nicely with error bars. About 200 ZJ added to climate over the 35 years (1971-2006) based on temperatures and lost ice mass. (skipped evaporation of water – warmer air holds more water – column has been measured etc. Skipped geothermal.Fourier did not think it was much..I think that that is all left out.)

2. Spectroscopy and Keeling plus radiation heat transfer calculations gives you around 630 ZJ added by added CO2 and around 1200 added by all added GHGs (Murphy et al, 2009).

3. The added energy by added GHGs is several times the amount needed to explain the observed warming. Energy has the convenient property of being the cause called for by the effect. Add energy and temperatures go up and ice melts. So how to get rid of ‘excess’ energy? SigmaTtothefourth helps here, a couple of nice volcanoes and residual assigned to aerosols. Other aerosol mavens have asked Why we haven’t warmed more.

4. Dr. Curry’s problem (apart from having had to serve as department chair – a true Gulag appointment if you ask me – would Lindzen agree? WSJ) is that her explanation requires that:

a) For each ZJ added by known forcings (added GHGs for example) she must deep six a ZJ somewhere we can’t find it and supply a ZJ from the ocean while it warms. We know how much energy has been added. The usual suspects have been rounded up and have done more than the needed work (and heat transfer). If you want to unseat them as causes, you have to get rid of what they added and find another source to accomplish the observed deed. The “cycle” needs to have radiated an undetected 1200 ZJ to space, while finding 1200 ZJ somewhere to accomplish the observed warming. So we need something like the IRIS effect to sneak out something like 0.3 w/m2 without CERES seeing it (OK). The IRIS effect must be a negative feedback sufficient to cancel out the GHG additions and another 0.3 w/m2 must be found to warm climate.

We know GHGs warm and that they warm sufficiently to explain the observed effects(more than sufficiently -by a factor of a few). Dr. Curry attacks Occam and physics and must beat them both to save the phenomenon of climate cycles. She needs hundreds of ZJ to warm oceans, atmosphere and land, and to melt ice. And those ZJs need to come from somewhere not monitored enough to see it. And she needs to get rid of hundreds of known ZJs from GHGs. If you argue that all known forcings must be jettisoned, that comes to nearly 2 w/m2. But on the other hand the Murphy paper has a pretty large residual as well.

So is energy balance the knock-out punch for Judy?

Chuck (class starts in 44 hours)

I don’t know much science but I know a bit about people. Judith Curry needs to feel “attacked” so she can play the victim.

Distinguished scientists challenging her hand-waving and waffle is all that validates her. If the deniers didn’t need a tame climate scientist she would be irrelevant and ignored.

What is important in science is the balance of informed opinion. Ultimately it doesn’t matter what one scientist argues if the explanation has been considered and dismissed by experts.

It goes without saying, the Science, Space & Technology Committee isn’t interested in science. Appeals to authority are fine, just like real courts. Truth is secondary to process and obfuscation the defence strategy.

Perhaps scientists needs a lesson from doctors and dentists. Some train as lawyers, for the professions to better deal with disputes and litigation.

An incentive for scientists would be higher incomes and greater job security…

I’m just a lay person on climate, apart from some very basic stuff at university physical geography level. But even I can see you can’t attribute global warming since 1900 to ocean cycles. There’s no correlation between the PDO cycle, and global warming since 1900,as in the graph below. In particular the PDO cycle index peaks at a warm phase about 1985 and has been reducing since then.

https://www.skepticalscience.com/Pacific-Decadal-Oscillation.htm

What mystifies me is why Judith Curry can’t see such an obvious thing, given her qualifications. I don’t know what it would be, but would guess she is an attention seeker, or possibly has some deeper ideology driving her scepticism.

But making provocative claims is going to get attention (like Trump does) and will have certain benefits, to Judith Curry that is. The trouble is her claims are illogical, and just lead the general public astray. This really gets on my nerves.

NigelJ @ 21

Looking at the quote, I don’t see Judith mentioning the PDO

And “no correlation?

http://woodfortrees.org/plot/jisao-pdo/plot/hadcrut4gl/from:1900/scale:3

[Response: PDO comes up often in Curry’s statements. As for correlation, 1) that’s not an impressive correlation and 2) the entire idea is silly because the PDO is a statistical measure of observed climate, which includes climate change. They are not independent, so a correlation like this wouldn’t demonstrate anything. –eric]

@23

The correlation is quite low if you measure it. Below 10% AFAIK.

You can “see” this on a scatter plot. It looks like a big blob, not any obvious

lining up of the points.

Or you can put some filtering on your example plot which as it stands might

tune in on a Madonna due to all that noise:

http://woodfortrees.org/plot/jisao-pdo/mean:30/plot/hadcrut4gl/from:1900/scale:3/mean:30

Keith Woolard 23,

What are you, 12 years old? Do you think you are fooling anyone?

http://woodfortrees.org/plot/jisao-pdo/from:1900/trend/plot/hadcrut4gl/from:1900/trend

Oooh, look, I played with WFT and showed there is no correlation at all…

Sheesh…

Help with glitch please!

When I try to access this post (Judith Curry non-argument) in Firefox it hangs every time. No problem in Safari, and no problems with previous posts and comments in Firefox.

Any ideas?

Chuck — I think you have a very good idea there. If “cycles” are driving the warming, where are they getting the energy to do so? The whole point about cycles is that they go up and down. They don’t go steadily up.

nigelj – to figure Pressor Curry out, you should look at the work of Tsonis et al. Basically they use the PDO until ~1985, coupling and synchronizing their way through the 20th century, whatever, and then drop it like a hot rock.

Which is where they went completely off the rails.

Maybe not that much added value, but since I already did the plot, you can also combine the results of #24 & #25. I’m not the most sophisticated stats guy in the world, but the fact that the 117-year trends are of opposite sign would seem to be–discouraging.

http://woodfortrees.org/plot/jisao-pdo/mean:30/plot/hadcrut4gl/from:1900/scale:3/mean:30/plot/jisao-pdo/trend/plot/hadcrut4gl/from:1900/trend

This suggestion from Judith:

has a good response from gavin. But I think he may have missed sufficiently emphasizing this part in his response: “… top-of-the-atmosphere radiation balance to change positively …”

If the oceans were driving energy into the atmosphere (over a significantly long enough period to account for observed warming trends in the atmosphere, then I imagine we’d also see the TOA warming and consequently more energy (power, taken per unit time) radiating into space. But instead, as I understand things, we see from satellites a slightly cooler apparent level of radiation. The opposite of what would be expected if ocean sources were the key.

(I understand, of course, that the “TOA” is a simplifying construction and that reality is more complex than such a single concept implies. But the point remains, regardless of that more complex reality.)

As an aside of my own: I was kind of shocked to read here that Judith Curry could pretend conscientiousness while at the same time hand-wave so obviously, throwing out what appears to be broad-brush quantities spanning from “some” (20%) to “all” (100%) of recent warming.

Russell:

“Those regarding climate science as a means of delivering the electorate from an existential threat” will do what they do. For trained scientists, however, sufficient climate science has already been conducted. Per J. Nielsen-Gammon:

We now have the National Academy report. It begins with these two sentences (all-caps in the original):

In all humility, that’s more than good enough for me; and from it my own threat assessment ensues. With the electorate only as a constraint, I’m interested in forestalling an existential threat to any number of the Biosphere’s components, potentially including blog irritant “Mal Adapted”.

Keith Woolard @23

Some (but only some) of the warm points in the PDO index roughly line up with some temperature spikes over the last 100 years or so. It’s a weak correlation at best, and breaks down after 1985, which is the general period of global warming of most interest.

But the actual PDO index isn’t increasing, it just fluctuates or oscillates like el nino, forming an overall flat line longer term. So there is no trend. This can’t possibly be driving a long term temperature increase.

And you have not shown me why you think the PDO would cause a longer term warming. You don’t explain a causation mechanism. We know it just shifts heat around different parts of the oceans and sometimes between oceans and atmosphere, in roughly 20 year cycles, so is just a natural ocean cycle, a little like a slightly longer version of el nino. Therefore it cant drive long term temperature increases over century timescales, only short term fluctuations.

Now I would love that the PDO would explain global warming,and end the debate. But it just doesn’t. It has been eliminated as a suspect, “beyond reasonable doubt”.

Eric Swanson (#14).

Attacking Cristy’s graph is a staple of consensus enforcement. I note however that independently, Real Climate has produced a similar graph that conveys the same information in a different form. Modulo minor baselining issues and averaging methods the divergence is not much different. I assume Real Climate was very careful to use the exact definition of TMT in doing their AOGCM evaluations.

https://www.realclimate.org/images//tmt_trop_trends_2016.png

I think you all (Zebra, Kym and Eric)have completely missed the point of my comments.

I am not defending Dr Curry, I am not claiming that the PDO “causes” climate change. There was a blatant error in Nigelj’s comment. I simply pointed this out.

Sure you can filter the time series and get a different result as both Kym and Zebra have done but there is a clear correlation in the medium and high frequencies.

This is meant to be a science blog, let’s call errors any time they occur, not just when “the red team” make them.

For Keith Woolard, who forgot to check two boxes in his Woodfortrees chart.

You were relying on your eyeballs, weren’t you?

http://woodfortrees.org/plot/jisao-pdo/trend/plot/hadcrut4gl/from:1900/scale:3/trend

There can be no quantitative argument proving that AGW exists or doesn’t exist. This is because we only know the “global” temperature to even a rough degree of certainty for perhaps the past 50 to “maybe” 100 years – an insignificant period in the climate of earth. Before that, we have scattered, sparse records using instruments of questionable accuracy and “stories” of past climate warming and cooling periods. So we can’t even say we have warming today, much less that it is caused by humans.

Doesn’t mean AGW isn’t real; it’s just that you can’t quantify it.

[Response: Lol. – gavin]

That last sentence would be more accurately written:

“Doesn’t mean AGW isn’t real; it’s just that you can’t quantify it compared to the distant past.”

No, ice records are not accurate “thermometers”.

@35

Apparently we need to check the “correlate high frequency components please” box. :)

http://woodfortrees.org/plot/jisao-pdo/mean:30/normalise/plot/hadcrut4gl/from:1900/mean:30/detrend:0.86/normalise

As they say, hypothesis and observation diverge after ’85.

KIA: There can be no quantitative argument proving that AGW exists or doesn’t exist.

BPL: Sure there can. You just can’t think of one. But your failure of imagination says nothing about what other people can come up with.

KIA: we only know the “global” temperature to even a rough degree of certainty for perhaps the past 50 to “maybe” 100 years – an insignificant period in the climate of earth.

BPL: Thermometer records go back to the 1600s. Enough for a good estimate of global temperature go back to 1850, which by my calculation is 167 years ago. And for before that, we have proxies. Google “paleoclimatology.”

KIA: Before that, we have scattered, sparse records using instruments of questionable accuracy

BPL: Questionable in what way?

KIA: and “stories” of past climate warming and cooling periods.

BPL: Not “stories.” Inductions based on evidence. You know, the whole “science” thing.

KIA: So we can’t even say we have warming today, much less that it is caused by humans.

BPL: We have warming today, and it is caused by humans.

I prefer my WfT versions of the PDO and the GMST.

Because I was drawing them long before this paper came out:

Impact of decadal cloud variations on the Earth’s energy budget

From the abstract:

… Specifically, the decadal cloud feedback between the 1980s and 2000s is substantially more negative than the long-term cloud feedback. This is a result of cooling in tropical regions where air descends, relative to warming in tropical ascent regions, which strengthens low-level atmospheric stability. Under these conditions, low-level cloud cover and its reflection of solar radiation increase, despite an increase in global mean surface temperature. These results suggest that sea surface temperature pattern-induced low cloud anomalies could have contributed to the period of reduced warming between 1998 and 2013, and offer a physical explanation of why climate sensitivities estimated from recently observed trends are probably biased low 4.

Seen this way, the PDO, whatever the heck it is, lines up quite well throughout.

Can someone write such a piece for a general audience and circulate it? I think it’s very important to do, because otherwise the House Science commmittee will have succeeded in confusing the public, yet again. Perhaps I missed it, but I’ve yet to see a well written piece (for general audience) on this House Science theater (horror show?).

David #33 wrote: “Attacking Cristy’s graph is a staple of consensus enforcement.”

But there’s good reason for serious scientists to attack Christy’s use of the MT in his testimony. There’s a long history of concerns about the validity of the MSU2/AMSU5 data (aka: the MT), going back to Spencer and Christy’s work published in 1992 in which they introduced the TLT (or LT) as a correction for the MT. It was their claim at the time that the MT is contaminated by emissions from the stratosphere, which has a well known cooling trend. That Christy should sweep all this under the rug by presenting the MT without the usual and necessary qualifications is a good reason to condemn Christy for this obvious bias. The members of Congress can’t be expected to understand what he did, given their lack of technical training, and Christy hasn’t published his work either.

Christy’s graph gives the visual impression that there’s little tropical warming, which is bogus. Roy Spencer let the cat out of the bag when he mentioned that their MSU3/AMSU7 series (called TP for tropopause) is also impacted by stratospheric cooling and that a correction could be applied to the TP just as the MT is corrected producing the LT series. Spencer’s correction (which I call “Upper Troposphere or UT) uses the equation:

UT = 1.4 x TP – 0.4 x LS

Applying this equation, the UT series for tropics results in a trend of 0.13 K/decade, which is in close agreement with the LT tropical trend of 0.12 K/decade. Compare those values with the MT tropical trend of 0.08 K/decade and you will (hopefully) understand why Christy’s presentation appears to be biased…

Scientists today are talking warming in the small range of 0 to 2 C. Paleoclimatology cannot tell us temperature to this degree of accuracy/precision in the distant past. It can tell us that temps went up or down, but there is no way that it can tell us exactly how much. Paleoclimatologists dream that it can, and they even put labels on graphs indicating such high accuracy and precision but it’s wishful thinking. There has even been disagreement and correction of the temp data measured in the past 20 years with digital and satellite instruments! And we are expected to believe they can give the same precision/accuracy 200,000 years ago? Get real!

AGW may well be real. The computer models may be correct. But making claims that we know with certainty the global temperature in the distant past is laughable.

[Response: Good thing no-one here made that claim then! Please try to do a little better than just throwing up transparent strawmen. Go on, try, it might be fun. – gavin]

This probably isn’t the place for it, but it would be very helpful to post that with an explanation of why you made the choices you did. A lot of people link to WfT who don’t know how to use the tools there (or who’ve been fooled looking at charts accompanied by misleading claims, as eyeballed by the guy above).

The self-appointed Mr. Know It All:

Like many AGW-deniers, MKIA uses the second-person pronoun inappropriately: he can’t quantify AGW compared to the distant past. It may be that ice records are the only sources of paleoclimate data he knows about. Regardless, he’s demonstrated the Dunning-Kruger effect again.

The true value of people like Curry is as the credentialed climate scientist who says what people seeking to avoid climate responsibility want to hear; it gives policy makers and people with relevant responsiblities a reasonable seeming justification to ignore what the vast majority of other climate scientists tell them – or more significantly what the formal reports and advice commissioned and recieved by governments from institutions of science tell them.

People in positions of trust and responsibility, provided with expert advice that provides clear warning of serious consequences of excessive fossil fuel use are almost certainly acting negligently in choosing to believe Curry in order to have grounds to disbelieve the 97%.

I’m not sure that this back and forth of addressing the arguments she and her ilk produce is doing anything but raising their profile. “Red Team”? In the form the proponents want it would be a show trial with pre-ordained outcome, undertaken with prejudice, yet the public – and policy makers – would benefit from some kind of deep review and thorough explanations in accessible format – perhaps a collaboration between the best documentary makers and National Academies of Sciences/Royal Society; An Inconvenient Truth with the imprimatur of the world’s leading scientific institutions rather than with the baggage of partisan politics or “mere” documentary, ie entertainment makers, with every element backed by peer reviewed documentation.

As I envisage it –

Introduce the organisations – their workings, their achievements and why they are held in such high regard. Document the process of selection of the lead team, which should include both those with first hand, high level expertise and those with related but independent capabilities as a check and balance. Introduce the team as human beings as well as leading scientists. Go over every significant element from the fundamentals upwards. Use the extraordinary opportunities modern methods provide for visualisations of climate processes. It may be, in some ways, a “reality TV” style program at it’s public face – a video documentary of the review process.

There is a legitimate place for appeals to authority, when that authority has the necessary skills and applies proper scepticism and documents it.

I’ve mentioned this idea in comments here and there but – were I to begin a petition, to request such a deep review from National Academies would people here support it? Or has anyone any suggestions or alternative proposals?

“And so, science is a fundamental part of the country that we are. But in this, the 21st century, when it comes time to make decisions about science, it seems to me people have lost the ability to judge what is true and what is not; what is reliable, what is not reliable; what should you believe, what should you not believe. And when you have people who don’t know much about science standing in denial of it and rising to power, that is a recipe for the complete dismantling of our informed democracy.” – Neil deGrasse Tyson

March for Science – Today

If not now… when?

The main thing that the figure in 1) in the post demonstrates is that the models predict more warming than the observations show.

Gavin Schmidt a real problem – Wall Street Journal.

http://www.wsj.com/video/opinion-journal-how-government-twists-climate-statistics/80027CBC-2C36-4930-AB0B-9C3344B6E199.html

31

Thanks to Mal for demonstrating the stability of the ground state, by stating the authority of National Academy reports, and then working around the absence from the one cited of the words ( your all caps. not mine),

EXISTENTIAL THREAT.