With the official numbers now in 2015 is, by a substantial margin, the new record-holder, the warmest year in recorded history for both the globe and the Northern Hemisphere. The title was sadly short-lived for previous record-holder 2014. And 2016 could be yet warmer if the current global warmth persists through the year.

One might well wonder: just how likely is it that we would be seeing these sort of streaks of record-breaking temperatures if not for human-caused warming of the planet?

Precisely that question was posed by several media organizations a year ago, in the wake of the then-record 2014 temperatures. Various press accounts reported odds anywhere from 1-in-27 million to 1-in-650 million that the observed run of global temperature records (9 of the 10 warmest years and 13 of the 15 warmest years each having had occurred since 2000) might have resulted from chance alone, i.e. without any assistance from human-caused global warming.

My colleagues and I suspected the odds quoted were way too slim. The problem is that each year was treated as though it were statistically independent of neighboring years (i.e. that each year is uncorrelated with the year before it or after it), but that’s just not true. Temperatures don’t vary erratically from one year to the next. Natural variations in temperature wax and wane over a period of several years.

For example, we’ve had a couple very warm years in a row now due in part to El Niño-ish conditions that have persisted since late 2013 and it is likely that the current El Niño event will boost 2016 temperatures as well. That is an example of a natural variation that is internally-generated. There are also natural variations in temperature that are externally-caused or ‘forced’, e.g. the multi-year cooling impact of large, explosive volcanic eruptions like the 1991 Mt. Pinatubo eruption, or the small-but-measurable changes in solar output that occur on timescales of a decade or longer. Each of these natural sources of temperature variation lead to correlations in temperature from one year to the next that would be present even in the absence of global warming. These correlations must be taken into account to get reliable answers to the questions being posed.

The particular complication at hand is referred to, in the world of statistics as “serial correlation” or “autocorrelation“. In this case, it means that the effective size of the temperature dataset is considerably smaller than one would estimate based purely on the number of years available. There are N=136 years of annual global temperature data from 1880-2015. However, when the natural correlations between neighboring years are accounted for, the effective size of the sample is a considerably smaller N’~30. That means that warm and cold periods tend to occur in stretches of roughly 4 years at a time. Runs of several cold or warm years are far more likely to happen based on chance alone than one would estimate under the incorrect assumption that natural temperature fluctuations are uncorrelated from one year to the next.

One can account for such effects by using a more sophisticated statistical model that faithfully reproduces the characteristics of actual natural climate variability. My co-authors and I used such an approach to more rigorously assess the likelihood of recent runs of record-breaking temperatures. We have now reported our findings in an article just published in the Nature journal Scientific Reports. With the study having come out shortly after New Year, we are able to update the results from the study to include the record new 2015 temperatures.

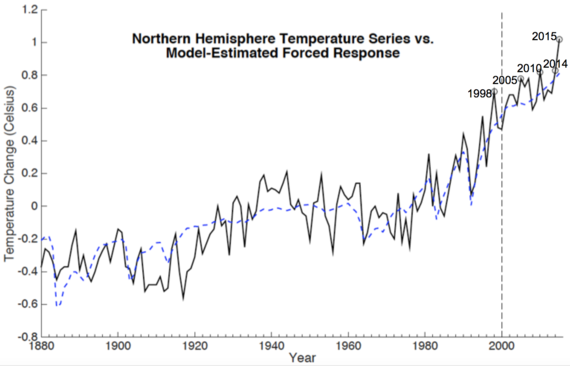

Our approach combines information from the state-of-the-art climate model simulations used in the most recent report of the Intergovernmental Panel on Climate Change (IPCC) with historical observations of global and Northern Hemisphere (NH) average temperature. Averaging over the various model simulations provides an estimate of the ‘forced’ component of temperature change–the component that is driven by external natural (i.e. volcanic and solar) and human (emission of greenhouse gases and pollutants) factors.

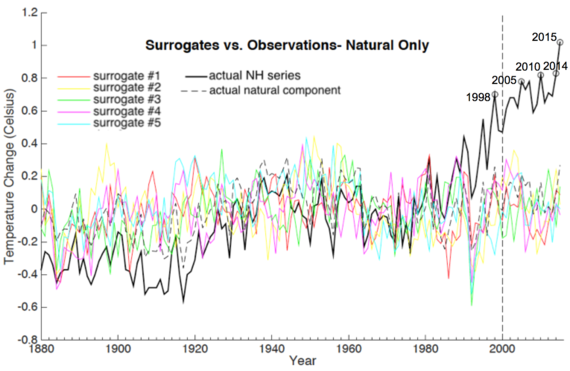

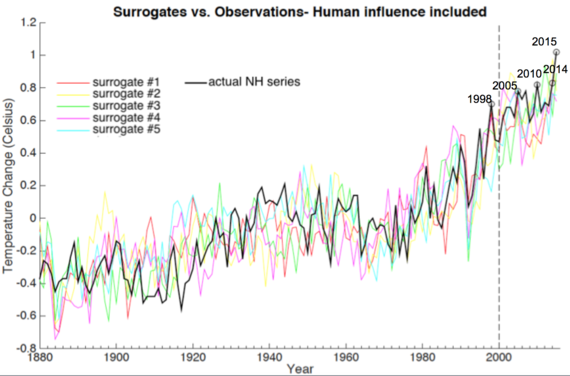

Using the statistical model, we generate a million alternative versions of the original series, called ‘surrogates’, each of which has the same basic statistical properties as the original series, but differs in its historical details, i.e. the magnitude and sequence of individual annual temperature values. Adding the forced component of natural temperature change (due to volcanic and solar impacts) to each of these surrogates yields an ensemble of a million surrogates for the total natural component of temperature variation.

These surrogates can be compared with the estimated natural component of the actual NH series as well as the full NH series itself (Figure 2). Tabulating results from the surrogates, which provide a million alternative, realistic scenarios of natural climate variability, we are able to diagnose how often a given run of record temperatures is likely to have arisen naturally. Our just-published study, having been completed prior to 2015, analyzed the data available through 2014, assessing the likelihood of 9 of the warmest 10 and 13 of the warmest 15 years having each occurred since 2000.

Updating the analysis to include 2015, we find that the record temperature run is even less likely to have arisen from natural variability. The odds are no greater than 1-in-300,000 that 14 of the 16 warmest years would have occurred since 2000 for the NH. The odds of back-to-back records (something we haven’t seen in several decades) as witnessed with 2014 and 2015, is roughly 1-in-1500.

We can also use the surrogates to assess the likelihoods of individual annual temperature records, such as those that occurred during 1998, 2005, 2010, 2014 and now 2015. Here we require not only that particular years are warmer than certain previous years, but that they reach a particular threshold of warmth. This is even less likely to happen in the absence of global warming, for reasons that are obvious from Figure 2: the natural temperature series almost never exceeds a maximum value of 0.4C relative to the long-term average, while the warmest actual year–2015–exceeds 1C. For none of the record-setting years–1998, 2005, 2010, 2014, or 2015–do the odds exceed 1-in-a-million for temperatures having reached the levels they did due to chance alone, for either the NH or global mean temperature.

Using data through 2014, we estimate a 76% likelihood that 13 of the warmest 15 years would occur since 2000 for the NH. Updating the analysis to include 2015, we find there is a 76% likelihood that 14 of the 16 years would occur since 2000 as well. The likelihood of back-to-back records during the two most recent years 2014 and 2015 is just over 8%, still a bit of a fluke, but hardly out of the question.

As for individual record years, we find that the 1998, 2005, 2010, 2014, and 2015 records had likelihoods of 7%, 18%, 23%, 40% and 7% respectively. So while the 2014 temperature record had nearly even odds of occurring, the 2015 record had relatively long odds.

There is good reason for that. The 2015 temperature didn’t just beat the previous record, but smashed it, coming in nearly 0.2C warmer than 2014. The 2015 warmth was boosted by an unusually large El Niño event–indeed, by some measures, the largest on record. A similar story holds for 1998 which, prior to 2015, was itself the largest El Niño on record. It too boosted 1998 warmth, which beat the previous record (1995) again by a whopping 0.2C. Each of the two monster El Niño events was, in a statistical sense, somewhat of a fluke. And each of them imparted considerably greater large-scale warmth than would have been expected from global warming alone.

That analysis, however, neglects one intriguing possibility. Could it be that human-caused climate change is actually boosting the magnitude of El Niño events themselves, leading to more monster events like the ’98 and ’15 events? That proposition indeed finds some support in the recent peer-reviewed literature. If the hypothesis turns out to be true, then the record warmth of ’98 and ’15 might not have been flukes after all.

To summarize, we find that the various record temperatures and runs of unusually warm years since 2000 are extremely unlikely to have happened in the absence of human-caused climate change, and reasonably likely to have happened when we account for climate change. We can, in this sense, attribute the record warmth to human-caused climate change at a high level of confidence.

Will the onslaught of record-breaking temperatures finally put the discredited “global warming has stopped” talking point to rest? Alas, probably not.

But the next time you hear someone call into question the threat of human-caused climate change, you might explain to them that the likelihood we would be witnessing the recent record warmth in the absence of human-caused climate change is somewhere between one-in-a-thousand and one-in-a-million. You might ask them: Would you really gamble away the future of our planet with those sorts of odds?

Other versions of this commentary have been published at LiveScience and Huffington Post

In the interest of rhetorical pre-emption could you sketch us a decadal graph chronicling the changes in instrumentation and statistical methods introduced since the 19th century establishment of international magnetic and meteorologial networks by Humboldt and Golitsyn ?

Oh Russell, you had me reaching for my blood pressure meds at this first comment… then I realized you offer a great insight into climatological ‘pataphysics. Thanks. Expect RC to touch on that soon.

As Mike has learned, any failure to draw a graph invites somebody’s PR firm to draw one of their own.

Pataphysics as proposed by a climatological psychoceramic, I imagine.

Where is this last curve going? If 2016 mirrors 1998 will we see an incredible spike again this summer? So far in Texas this winter El Niño has turned into warm wimpzilla.

Unfortunately, a lot of people play the lottery.

Autoregressive models are notoriously difficult to identify (especially with only 135 data points) and the inference is strongly dependent on identifiability. (i.e. It’s hard to be sure you are using the correct statistical model, and your conclusions change a lot if you aren’t.)

I suspect this is close to the best that can be achieved with purely statistical models and it does illustrate two things:

1) Naive calculation of the odds of the current temperature trend given no forcing will give a false impression of how unlikely the trend is; conversely,

2) Reasonable autoregressive assumptions still indicate the temperature trend is highly unlikely without forcing.

[Response: Thanks for your comment, which is well taken. We did investigate a variety of alternative statistical models (AR1, ARMA, persistent red noise) to explore the sensitivity of the results to the precise time series model used, but there are certainly other approaches that good have been taken and are worth exploring. Think of this study as more a proof-of-concept for how we should think about these sorts of questions. -Mike]

The large font of the first paragraph below figure three camouflages it as part of the figure legend. If it is skipped, the subsequent paragraph doesn’t make sense. …just FYI.

Indeed an excellent question.

Thank you for making the paper publicly available.

Any kind of warming could have produced the results, not just human caused warming.

[Response: Sorry, but that makes no sense. What “any kind of warming” are you talking about? We have accounted for all known natural and anthropogenic forcings that are relevant on these timescales in our analysis. All that’s left then is internal variability. And we show in in the study that internal variability is extremely unlikely to have produced anything approaching the observed temperature records. So you’re then left speculating about what? Cosmic Rays? Some other favorite “magic missing factor”. That’s the stuff of denialist lore, not legitimate science. But thanks for stopping by :-) -Mike]

What do you say to Judith Curry’s recent rebuttal with copious comments?

http://judithcurry.com/2016/01/26/on-the-likelihood-of-recent-record-warmth/

[Response: Does anybody serious still listen to anything Judith Curry has to say. I don’t read her incoherent, confused, and misguided blog. But she’s always free to submit a comment for peer review. Here’s what happened last time she did that.

Curry has advocated a fatally-flawed methodology which defines everything left over after linear-detrending as natural variability. We have debunked this 4 times now in the peer-reviewed literature during the the past 2 years:

Mann, M.E., Steinman, B.A., Miller, S.K., On Forced Temperature Changes, Internal Variability and the AMO, Geophys. Res. Lett. (“Frontier” article), 41, 3211-3219, doi:10.1002/2014GL059233, 2014.

Steinman, B.A., Mann, M.E., Miller, S.K., Atlantic and Pacific multidecadal oscillations and Northern Hemisphere temperatures, Science, 347, 998-991, 2015.

Steinman, B.A. Frankcombe, L.M., Mann, M.E., Miller, S.K., England, M.H., Response to Comment on “Atlantic and Pacific multidecadal oscillations and Northern Hemisphere temperatures”, Science, 350, 1326, 2015.

Frankcombe, L.M., England, M.H., Mann, M.E., Steinman, B.A., Separating internal variability from the externally forced climate response, J. Climate, 28, 8184-8202, 2015.

Just to be clear: Curry’s erroneous method for defining natural variability fatally compromises pretty much everything she says or does when it comes to the issue of distinguishing between anthropogenic and natural variability. -Mike]

[Response: I might add 3 quick responses to her 3 points of criticism.

1. Discrepancy of surface and satellite records: read Lewandowski on this – the satellite data are irrelevant here since we’re looking at surface temperatures.

2. Alleged model-data mismatch: Look at our Fig. 1 which shows it. We attribute all mismatch to internal natural climate variability. If model error contributes to a mismatch, then we overestimate internal natural climate variability, which would increase the odds that temperature records could happen by chance natural variability. Thus our approach is conservative.

3. Seperating out natural from anthropogenic parts unsolved: Indeed there is not one generally agreed and clear-cut way to precisely seperate natural from anthropogenic climate variations. That is why we try three different approaches in our paper, including one that extremely generously (to the extent of being unrealistic) provides for internal variability and which we use “as an extreme upper bound estimate on both the amplitude and persistence of climatic noise” (quote frome the paper).

So while Curry’s criticism (1) is a red herring, we have not “glossed over” her points (2) and (3) as she claims but rather treated them properly and conservatively in the paper. She is very welcome to provide her own analysis of the probability of records in the peer-reviewed literature, and the community will judge which is more convincing – that is how science works. I look forward to it! -stefan]

Don’t mean to pile on or ftt, but aren’t there lots of signs that this not “any kind of warming”? Only GHG triggered warming is likely to warm the poles much more than the equators, right? Same with the fact that the winters are warming faster than the summers, that the nights are warming faster than the days, the lower troposphere is warming while the stratosphere (iirc) is cooling slightly. Aren’t all of these (and more) fingerprints of warming that can only be caused by increases in greenhouse gasses?

If there is some other “kind of warming” that Mr. DaSilva think could account for these patterns, he ought to enlighten us. We’re all ears.

Isn’t the ratios of carbon ratios being added to the atmosphere, biosphere and oceans tell us that it all comes from burning fossil fuels and land use changes?

I thought that c12 to c13 ratios indicate that its all coming from what us humans do?

For Pete Best: I copypasated your question into the search; this popped right up:

How do we know that recent CO2 increases are due to human activities?

Click the link to get the additional references mentioned

Energy comes in many forms and can be transformed the form we use now warms the planet in a unregulated way. My idea transforms energy in a way that can be regulated and used to cool Earth at desired set point.. Right now we know that set point is far to high and must be lowered. I need climate scientists to join me in my quest to find that sweet spot in temperature and maintain it there… Ocean Tunnels are the way.Trying to get tunnels computer modeled by a university and to have a working scale model built to show proof of concept. Climate scientists are welcome. Join the Ocean Tunnels group here to help get this done. Don’t just click like… Join: https://www.facebook.com/groups/1548937018758434/

Thanks, Mike, for this research. I think the large increase in ocean heat content makes the succession of warmer years quite likely, and not due to internal variation. We can’t forget conservation of energy. But I hope to pose and get some reaction to a similar question.

What is the likelihood of a certain climate event occurring at least once before a given date? For instance, what is the chance of fatal wet bulb temperatures somewhere by 2050? by 2040? 2030? Or, what is the chance of floods some places and heat waves other places causing a massive global famine one time or more by the same dates?

Are these questions similar to the coinciding birthdays problem? With over 20 people in a room, the chance of two of them having the same birthday is over .5.

[Response: Hi Pete, thanks for your comments. Re heat content, well I would argue that this is implicit in the forced response we are using (from CMIP5 models that have that physics) and in the correlation structure we are estimating from the residuals. But perhaps what you’re getting at is the possibility that the correlation structure of the noise itself is changing as a result of climate change itself. Were that the case, it would indeed not be incorporated in our analysis. But given only 136 years of data, it would be extremely difficult to estimate a secular trend in the parameters of the statistical model (there is already a fair amount of uncertainty in fitting the statistical model with this short a time series, as one of the commenters noted earlier. As for the other analyses you mention, indeed–these are ALL worthwhile. Were that I had the time and resources do pursue each of those lines of inquiry… -Mike]

I forgot to add that autocorrelations may not have the same effect as in your (Mike’s) problem. Three similar years in a row may make it more likely that drought, say, will overcome agriculture.

Inspired by 12: As an ordinary member of the public, I do not experience (global) warming at my location. Instead over the last 4 or 5 years I am clearly experiencing the greenhouse effect. To build a hot-house, you trap as much sun as you can in a confined space and then hold on to the heat longer. The filter maximises heat input and minimises heat loss. But it is a filter, so the pure sun above the filter may actually be hotter.

5 years ago, the sky was mainly clear, giving 30 to 40 temperatures during the day and 10 to 20 during the night. The last few years, it is often cloudy, causing lower temps during the day and higher during the night. Keeping records of average temperatures does not capture this, cause they average to similar values. Keeping Min and Max does not capture this, cause there are still some days without cloud resulting in very high and very low temperatures.

Most days this summer have been in the mid-20tees, but I just had 3 days of about 38. Not real high, cause I have often had mid 40-tees, even 48, but these 3 days felt even hotter than that. Unbearable, sweat dripping, almost like being in a hot-house :)

I would love to blame human induced climate change for these dismal summers, so I have requested from our Weather Bureau detailed temperature data from a relatively local station (40km). Unlike most other stations, they where automated in 2006 and capture weather data at 1-minute intervals. Surely with the right analysis (help?), I can once and for all prove that Climate Change is wrecking my weather ?

On why we know the warming is human-caused:

http://bartonlevenson.com/AnthropogenicCO2.html

Regarding point #6 (Bruce Tabor) above and in part because its marginally pertinent anyway, a bunch of expert statisticians and then a dunce (me) looked at the upper-tercile streak of lower-48 states temperatures and tried to calculate the odds under various assumptions. They each developed their own model then compared. The paper (still pay-walled til May) is here:

http://onlinelibrary.wiley.com/doi/10.1002/2013JD021446/full.

The online supplement includes quite a lot of R routines they used that folks may find useful.

Distinct from the present analysis no climate models were used. But the bottom-line conclusions were very much the same vein. US lower-48 series is noisier than global and the metric used (tercile strings) distinct so they are not directly comparable.

#19–Thanks, Barton. That’s a good summation.

Here’s another one–a little more length, and a little more glitz (though lacking the references), here:

http://hubpages.com/politics/How-Do-We-Know-That-Humans-Are-Responsible-For-Rising-CO2

#15 Patrick – So you want to speed up the heating of the oceans?

#22 Jef Negative the concept regulates the ocean temperature based on a given setpoint of 3 way temperature flow control valve. The temperature of the Ocean can be regulated to a lower value over a certain time scale. Sam Carana has a nice write on the tunnels here. http://arctic-news.blogspot.com/2015/02/multiple-benefits-of-ocean-tunnels.html or you can go to my Ocean Tunnels FB page to learn more about them here. https://www.facebook.com/groups/1548937018758434/

> Patrick … Sam Carana

Patrick, that’s not a recommendation. You can look him up.

Op. cit.: https://www.realclimate.org/?comments_popup=17328#comment-552429

#24 Ok Hank then I recommend it.

#11, Stefan´s response: “the satellite data are irrelevant here”;

Why only these satellite data and not the CERES or GRACE data for example?

AntonyIndia,

CERES and GRACE are also irrelevant here–and for the same reason. They aren’t measuring temperature. AMSU instruments also are not measuring ground temperature. Try to keep up.

Climate is stats of weather over last 30 years. Shouldn’t your paper have addressed probabilities of 27 of 30 warmest years occurring in the last 30 years rather than 13 of 15 or 9 of 10? (27 of 30 is what I count for GISS LOTI, other records might be different.)

[Response: Thanks for the question Crandles. We did indeed address this point in the article (emphasis added):

-Mike]

I think that it’s interesting that some people still believe that the recent climate change is just due to the Earth’s natural warming. The information on this post saying that the odds that the temperatures in the past 15 years have been so high just by chance is between 1-in-27 million and 1-in-650 million. This fact alone clearly supports that global warming is real.

> 1-in-27 million and 1-in-650 million

But people think in terms of their _own_ odds.

Climate risk isn’t the chance any individual takes.

It’s the chance the planet takes.

http://www.npr.org/sections/thetwo-way/2016/01/09/462504101/-900-billion-prize-1-in-292-million-odds-and-a-few-more-lottery-numbers

> 1-in-27 million and 1-in-650 million

Those figures are not credible. See the fourth paragraph of the blog article you are responding to.

[Response: Ummmm. You do realize that our article is about *why* those numbers aren’t credible, and how to do this properly? And by the way, we didn’t respond to any “blog articles”. I cite several media reports. These are not the same thing. Thanks for stopping by. -Mike]

Re. Mike’s response to #31: I was responding to comments immediately preceding mine, in particular Alex’s #29, not to the article itself. I was surprised to see commenters quote the figures rejected in the article’s fourth paragraph as if the article endorsed those figures instead. I apologize for the misunderstanding.

[Response: Oops, my bad. Thanks for the clarification, and sorry for my mis-reading of your comment! -Mike]

Maybe on a pathway to perennial El Nino’s even?

A potential future world scenario, driven by rapid regional changes http://climatestate.com/2016/02/05/a-potential-future-world-scenario-driven-by-rapid-regional-changes/

#33 Chris Machens

That’s an interesting article on the possibility of imminent rapid changes in the global climate — but I wish the author had asked a language expert to fix his horrible English before posting it. Bad English is not only irritating but also impedes understanding.

Digby Scorgie, you can make a difference, comment below the article and point out what needs to be fixed. This would be very much appreciated. Thanks.

#34 “horrible English” Pretty harsh comment with no good purpose. The author is German if you had bothered to check and not a native English speaker, but even then his text is beyond what the typical US college graduate can manage …so not that far off fluent. While less than perfect English might annoy you, you might also want to consider how many are going to find your type of response..really annoying.

Sorry, Chris. Fixing the bad English in all the website articles I read would be a full-time occupation. At my, ahem, advanced age I don’t have the time.

It is analogous to an Apollo moon shot. Every minute it’s higher than at the previous minute. Of course with the proviso that the O-rings perform properly.

#36 Jim

On the few occasions I ventured something in my second language I made damn sure a native speaker checked it first. And sometimes I’ve returned the favour.

However, this is not the forum to debate the necessity for good language skills, including in science. It should be self-evident. I propose we now drop the subject and annoy the moderators no further.

It is interesting that people don’t believe that the Earth’s climate is changing because of humans. The CO2 gases we put into the atmosphere cause the infrared rays to bounce back onto the Earth’s surface causing it to rise in heat. Also just from the article stating that the odds of this warming being coincidental is “anywhere from 1-in-27 million to 1-in-650 million” supports the fact that global warming is actually happening.

I find it intriguing that we have always been told that in 10 or 15 years we will be greatly impacted by climate change, but from this article 10-15 years is going to be a overstatement.

From 1880 to 1920 (at least 1905-1920) it seams that there is also a statistical difference (in reverse form). Black carbon? Both 1905-1920 and 2000-2015 Will decrease the probability of “natural only” (?)

“…seeing these sort of streaks of record-breaking temperatures”

It is unclear to me how a climate scientist can then announce

According to Dr. Tamsin Edwards: “Over the past 17 years or so there has been a slowdown — even a pause — in the rate of warming of the atmosphere. ”

https://news.vice.com/article/there-is-some-uncertainty-in-climate-science-and-thats-a-good-thing

And thats it, no mention of the ocean, the recent record breaking years… most people will just think, oh really no warming …

thus is misleading, and is fodder for people who think there is no warming.

[Response: By any reasonable parsing her statement is simply false. We have argued elsewhere that there was a temporary natural slowdown during the 2000s decade, but it’s fairly clear that this was (a) temporary, (b) has ended and (c) is consistent with what we understand about internal decadal variability. So like much of what I have read of her public commentary, this piece is at best misleading, i.e. it fails the truth-o-meter. -Mike]

[addendum (3/12/16): It has come to my attention that some people may not be familiar with the cultural reference of the “truth-o-meter”. It is something that is used by fact-checking organizations to assess whether or not an assertion is true. See e.g. politifact. It does not, nor has it ever, purported to evaluate the intent or honesty of the individual in question, but only whether or not the statement is true. This is all probably obvious and well-known to most people, but apparently not to some. -Mike]

#43 Chris Machens and Mike’s reply-

You only needed to read one more sentence!

I don’t see this as unreasonable at all. I get that the “pause” is controversial due to definitions and statistical fragility, but Tamsin’s quote is entirely consistent with Mike’s three summary points that most people agree with.

[Response: Sorry Chris, but no. The statement “even a pause” is not supportable. Full stop. -Mike]

#43, #44, with in-lines–Uh, that piece from Dr. Edwards says it’s from September of 2014. So, to be scrupulously fair, it was written *before* those ‘record breaking years.’

Moral: Always check the byline date–if they give it. (And if you’re controlling such things, give the byline date if you reasonably can.)

And why are we talking about it? Pretty forgettable at best, even if you miss the timeline.

That’s an interesting article on the possibility of imminent rapid changes in the global climate — but I wish the author had asked a language expert to fix his horrible English before posting it. Bad English is not only irritating but also impedes understanding.

Its good that the climate change community is always checking data provided to the public, a well informed public is import because of the skepticism in climate change. People just print data to get views and ratings without making sure the data is accurate, they fail to check other sources to check their data.

Copy-bot infestation detected.

Original:

Digby Scorgie says:

5 Feb 2016 at 7:48 PM

#33 Chris Machens

That’s an interesting article on the possibility of imminent rapid changes in the global climate — but I wish the author had asked a language expert to fix his horrible English before posting it. Bad English is not only irritating but also impedes understanding.

…

Copybot:

46

Donovan Rudolph says:

29 Feb 2016 at 8:26 AM

That’s an interesting article on the possibility of imminent rapid changes in the global climate — but I wish the author had asked a language expert to fix his horrible English before posting it. Bad English is not only irritating but also impedes understanding.