Guest Commentary by Jim Bouldin (UC Davis)

How much additional carbon dioxide will be released to, or removed from, the atmosphere, by the oceans and the biosphere in response to global warming over the next century? That is an important question, and David Frank and his Swiss coworkers at WSL have just published an interesting new approach to answering it. They empirically estimate the distribution of gamma, the temperature-induced carbon dioxide feedback to the climate system, given the current state of the knowledge of reconstructed temperature, and carbon dioxide concentration, over the last millennium. It is a macro-scale approach to constraining this parameter; it does not attempt to refine our knowledge about carbon dioxide flux pathways, rates or mechanisms. Regardless of general approach or specific results, I like studies like this. They bring together results from actually or potentially disparate data inputs and methods, which can be hard to keep track of, into a systematic framework. By organizing, they help to clarify, and for that there is much to be said.

Gamma has units in ppmv per ºC. It is thus the inverse of climate sensitivity, where CO2 is the forcing and T is the response. Carbon dioxide can, of course, act as both a forcing and a (relatively slow) feedback; slow at least when compared to faster feedbacks like water vapor and cloud changes. Estimates of the traditional climate sensitivity, e.g. Charney et al., (1979) are thus not affected by the study. Estimates of more broadly defined sensitivities that include slower feedbacks, (e.g. Lunt et al. (2010), Pagani et al. (2010)), could be however.

Existing estimates of gamma come primarily from analyses of coupled climate-carbon cycle (C4) models (analyzed in Friedlingstein et al., 2006), and a small number of empirical studies. The latter are based on a limited set of assumptions regarding historic temperatures and appropriate methods, while the models display a wide range of sensitivities depending on assumptions inherent to each. Values of gamma are typically positive in these studies (i.e. increased T => increased CO2).

To estimate gamma, the authors use an experimental (“ensemble”) calibration approach, by analyzing the time courses of reconstructed Northern Hemisphere T estimates, and ice core CO2 levels, from 1050 to 1800, AD. This period represents a time when both high resolution T and CO2 estimates exist, and in which the confounding effects of other possible causes of CO2 fluxes are minimized, especially the massive anthropogenic input since 1800. That input could completely swamp the temperature signal; the authors’ choice is thus designed to maximize the likelihood of detecting the T signal on CO2. The T estimates are taken from the recalibration of nine proxy-based studies from the last decade, and the CO2 from 3 Antarctic ice cores. Northern Hemisphere T estimates are used because their proxy sample sizes (largely dendro-based) are far higher than in the Southern Hemisphere. However, the results are considered globally applicable, due to the very strong correlation between hemispheric and global T values in the instrumental record (their Figure S3, r = 0.96, HadCRUT basis), and also of ice core and global mean atmospheric CO2.

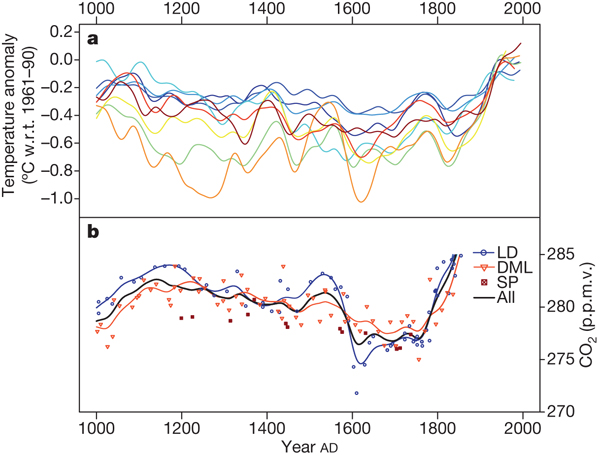

The authors systematically varied both the proxy T data sources and methodologicalvariables that influence gamma, and then examined the distribution of the nearly 230,000 resulting values. The varying data sources include the nine T reconstructions (Fig 1), while the varying methods include things like the statistical smoothing method, and the time intervals used to both calibrate the proxy T record against the instrumental record, and to estimate gamma.

Figure 1. The nine temperature reconstructions (a), and 3 ice core CO2 records (b), used in the study.

Some other variables were fixed, most notably the calibration method relating the proxy and instrumental temperatures (via equalization of the mean and variance for each, over the chosen calibration interval). The authors note that this approach is not only among the mathematically simplest, but also among the best at retaining the full variance (Lee et al, 2008), and hence the amplitude, of the historic T record. This is important, given the inherent uncertainty in obtaining a T signal, even with the above-mentioned considerations regarding the analysis period chosen. They chose the time lag, ranging up to +/- 80 years, which maximized the correlation between T and CO2. This was to account for the inherent uncertainty in the time scale, and even the direction of causation, of the various physical processes involved. They also estimated the results that would be produced from 10 C4 models analyzed by Friedlingstein (2006), over the same range of temperatures (but shorter time periods).

So what did they find?

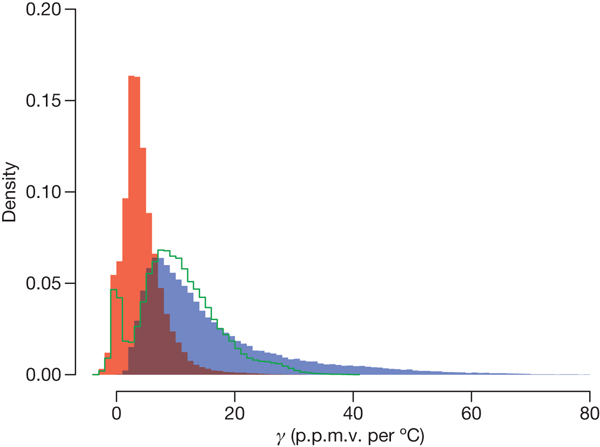

In the highlighted result of the work, the authors estimate the mean and median of gamma to be 10.2 and 7.7 ppm/ºC respectively, but, as indicated by the difference in the two, with a long tail to the right (Fig. 2). The previous empirical estimates, by contrast, come in much higher–about 40 ppm/degree. The choice of the proxy reconstruction used, and the target time period analyzed, had the largest effect on the estimates. The estimates from the ten C4 models, were higher on average; it is about twice as likely that the empirical estimates fall in the model estimates? lower quartile as in the upper. Still, six of the ten models evaluated produced results very close to the empirical estimates, and the models’ range of estimates does not exclude those from the empirical methods.

Figure 2. Distribution of gamma. Red values are from 1050-1550, blue from 1550-1800.

Are these results cause for optimism regarding the future? Well the problem with knowing the future, to flip the famous Niels Bohr quote, is that it involves prediction.

The question is hard to answer. Empirically oriented studies are inherently limited in applicability to the range of conditions they evaluate. As most of the source reconstructions used in the study show, there is no time period between 1050 and 1800, including the medieval times, which equals the global temperature state we are now in; most of it is not even close. We are in a no-analogue state with respect to mechanistic, global-scale understanding of the inter-relationship of the carbon cycle and temperature, at least for the last two or three million years. And no-analogue states are generally not a real comfortable place to be, either scientifically or societally.

Still, based on these low estimates of gamma, the authors suggest that surprises over the next century may be unlikely. The estimates are supported by the fact that more than half of the C4-based (model) results were quite close (within a couple of ppm) to the median values obtained from the empirical analysis, although the authors clearly state that the shorter time periods that the models were originally run over makes apples to apples comparisons with the empirical results tenuous. Still, this result may be evidence that the carbon cycle component of these models have, individually or collectively, captured the essential physics and biology needed to make them useful for predictions into the multi-decadal future. Also, some pre-1800, temperature independent CO2 fluxes could have contributed to the observed CO2 variation in the ice cores, which would tend to exaggerate the empirically-estimated values. The authors did attempt to control for the effects of land use change, but noted that modeled land use estimates going back 1000 years are inherently uncertain. Choosing the time lag that maximizes the T to CO2 correlation could also bias the estimates high.

On the other hand, arguments could also be made that the estimates are low. Figure 2 shows that the authors also performed their empirical analyses within two sub-intervals (1050-1550, and 1550-1800). Not only did the mean and variance differ significantly between the two (mean/s.d. of 4.3/3.5 versus 16.1/12.5 respectively), but the R squared values of the many regressions were generally much higher in the late period than in the early (their Figure S6). Given that the proxy sample size for all temperature reconstructions generally drops fairly drastically over the past millennium, especially before their 1550 dividing line, it seems at least reasonably plausible that the estimates from the later interval are more realistic. The long tail–the possibility of much higher values of gamma–also comes mainly from the later time interval, so values of gamma from say 20 to 60 ppm/ºC (e.g. Cox and Jones, 2008) certainly cannot be excluded.

But this wrangling over likely values may well be somewhat moot, given the real world situation. Even if the mean estimates as high as say 20 ppm/ºC are more realistic, this feedback rate still does not compare to the rate of increase in CO2 resulting from fossil fuel burning, which at recent rates would exceed that amount in between one and two decades.

I found some other results of this study interesting. One such involved the analysis of time lags. The authors found that in 98.5% of their regressions, CO2 lagged temperature. There will undoubtedly be those who interpret this as evidence that CO2 cannot be a driver of temperature, a common misinterpretation of the ice core record. Rather, these results from the past millennium support the usual interpretation of the ice core record over the later Pleistocene, in which CO2 acts as a feedback to temperature changes initiated by orbital forcings (see e.g. the recent paper by Ganopolski and Roche (2009)).

The study also points up the need, once again, to further constrain the carbon cycle budget. The fact that a pre-1800 time period had to be used to try to detect a signal indicates that this type of analysis is not likely to be sensitive enough to figure out how, or even if, gamma is changing in the future. The only way around that problem is via tighter constraints on the various pools and fluxes of the carbon cycle, especially those related to the terrestrial component. There is much work to be done there.

References

Charney, J.G., et al. Carbon Dioxide and Climate: A Scientific Assessment. National Academy of Sciences, Washington, DC (1979).

Cox, P. & Jones, C. Climate change – illuminating the modern dance of climate and CO2. Science 321, 1642-1644 (2008).

Frank, D. C. et al. Ensemble reconstruction constraints on the global carbon cycle sensitivity to climate. Nature 463, 527-530 (2010).

Friedlingstein, P. et al. Climate-carbon cycle feedback analysis: results from the (CMIP)-M-4 model intercomparison. J. Clim. 19, 3337-3353 (2006).

Ganopolski, A, and D. M. Roche, On the nature of lead-lag relationships during glacial-interglacial climate transitions. Quaternary Science Reviews, 28, 3361-3378 (2009).

Lee, T., Zwiers, F. & Tsao, M. Evaluation of proxy-based millennial reconstruction methods. Clim. Dyn. 31, 263-281 (2008).

Lunt, D.J., A.M. Haywood, G.A. Schmidt, U. Salzmann, P.J. Valdes, and H.J. Dowsett. Earth system sensitivity inferred from Pliocene modeling and data. Nature Geosci., 3, 60-64 (2010).

Pagani, M, Z. Liu, J. LaRiviere, and A.C.Ravelo. High Earth-system climate sensitivity determined from Pliocene carbon dioxide concentrations. Nature Geosci., 3, 27-30

I’m curious — what does long-term paleo evidence say about gamma? It seems to me that during glacial cycles, global T changes about 5 – 6 deg.C while global CO2 changes about 100 – 120 ppm, implying gamma is about 20 ppm/deg.C. Is this too simplistic?

Can’t you estimate this feeback by looking at the last glacial? Temperatures were about 6°C lower and CO2 leves was 180 ppm. To the interglacial tenperature rose and CO2 too (280 ppm). That estimate would give ~15 ppm/°C. Or is this too simple?

Can someone point me to a somewhat nontechnical explanation of gamma? Thanks. I’m also curious about this statement from the article: “Still, based on these low estimates of gamma, the authors suggest that surprises over the next century may be unlikely.” What surprises are they talking about.

The CO2 increase between 1760 and 1850 isn’t correlated with temperature.

But, is’nt there, already, an anthropogenic CO2 (the beginning of industrial era)?

So, in consequence, isn’t there a bias, or is it accounted, in this study?

Thank you for the excellent write up, Gavin. May I ask: did the models used to produce AR4 all include interactive carbon? Or, to put this another way: if gamma is indeed 10ppm per degree C, would that have much effect on the ensemble mean temperature projections given in AR4 for 2100?

Longer term feedbacks include melting of glaciers, sea ice, ice shelves, ice caps and changes in vegetation. Each of these have their own characteristic feedback sensitivity and response rates.

During the period 1050 to 1800, there were considerably more ice shelves in the Northern Hemisphere than there are now. So, what ever influence NH ice shelves had on gamma will no longer be a factor in the future.

Likewise, some day (perhaps soon) the same will be said about Northern Hemisphere sea ice.

Hi Gavin

Thanks. When I read this paper, the question that came to mind is that these results are for 275 to 285 ppmV and a narrow range of temperature as well. Would you expect them to be relevant to much higher levels of atmospheric carbon and temperature? Would carbon sources like permafrost and methane hydrates have been affected in some linear way?

best regards

Tony

On another tack I’ll recommend any of the articles at The Oil Drum documenting the status of coal reserves, such as this one, from 2007: COAL – The Roundup

2. The Future of Coal, a study by B. Kavalov and S. D. Peteves of the Institute for Energy (IFE), prepared for European Commission Joint Research Centre

Read the report (PDF, 1.7 MB, 52 pp).

The report identifies three trends:

* Proved reserves are decreasing fast – unlike oil and gas.

* Bulk of coal production is concentrated within a few countries.

* Coal production cost are rising all over the world.

The following observations are also made:

* Hard coal in the EU is largely depleted.

* Six countries (USA, China, India, Russia, South Africa, Australia) hold 84% of world hard coal reserves. Four out of these six (USA, Russia, China, Australia) also account for 78% of world brown coal reserves.

* Growth in consumption has not been matched by increasing reserves leading to falling R/P ratios.

* Poor investment over the past 10-15 years.

* The USA and China — former large net exporters — are gradually turning into large net importers with an enormous potential demand, leaving Australia as the “ultimate global supplier of coal”. However all of Australia’s steam coal exports equal to only 5% of Chinese steam coal consumption.

Another excellent article was Richard Heinberg’s Coal in the United States, which was later expanded into an excellent book, Blackout, covering the status of coal reserves worldwide. None of this is to say that ample reserves of coal exist to continue extraction for decades, but the more extreme scenarios formulated by the IEA and utilized by the IPCC deserve much closer scrutiny than the eternally optimistic numbers government agencies give them.

Very interesting summary, Jim; thanks.

Can someone who has a subscription to Nature identify the data sets shown in the temperature anomaly chart in Figure 1?

The above says that sensitivity is MUCH higher than previously thought. It is degrees per 10 ppm rather than degrees per doubling. Am I right or did I miss something?

Thanks for this. Two questions:

First, if we use these low values of Gamma, can Earth escape an ice age?

[Response: Good question. Don’t know but I much doubt it. Hope people start discussing that. Jim]

Second, using meatball physics, there should be absolute temperature thresholds at which Gamma takes a step e.g. changes in ocean pH changing the solubility of CO2, letting methane hydrates loose assuming the time resolution here is long enough that the CH4 oxidizes just to name two.

[Response: meatball physics? :)]

Are they only finding the natural amplification of CO2 and thus NOT the sensitivity? I read the abstract at Nature, but I don’t have access to the whole article. Gavin, please clarify.

If they are finding only the amplification, they have omitted the ocean bottom clathrates and the tundra peat bogs. The amplification only applies to a narrow range of temperature and CO2. Am I reading it correctly now?

So how does the article help us predict what comes next in our current situation?

The Death of Global Warming

http://blogs.the-american-interest.com/wrm/2010/02/01/the-death-of-global-warming/

Science is losing the battle…….

With regards to the paragraph

“One such involved the analysis of time lags. The authors found that in 98.5% of their regressions, CO2 lagged temperature… these results from the past millennium support the usual interpretation of the ice core record over the later Pleistocene, in which CO2 acts as a feedback to temperature changes initiated by orbital forcings.”

Based upon analysis of current orbital forcings, can this be substantiated? I mean does the beginning of the current warming fit into the cyclical pattern determined from milankovich cycles? If this is the case, then at what point can we really differentiate orbital forcings from CO2 forcings with regards to the most recent warming. Particularly with evidence of a 1000 year warming cycle evidenced in Viau et al. (2006) also being superimposed on this. (yes its pollen records but the analysis is quite robust and involves quite a significant amount of proxies)

I have questions :-)

1. Any idea why the “gamma lag” in the last millennium would be 80 years while the gamma lag coming out of a glacial period is 800?

2. Given that 80 years is long relative to the fast temperature feedbacks, does the definition of gamma include those feedbacks? In other words, suppose that over some time period >>80 years, temperature goes up 1C while CO2 goes up 20ppmv … but assuming a sensitivity of 3C and that the 20ppm was from 280 to 300, then the 20ppm was responsible for 0.45C of the warming itself … so is gamma 20, or is gamma 20/.55 = 36? I’m guessing that the definition includes feedbacks (so gamma is 20) because the definition of sensitivity includes feedbacks and taking the feedback out involves more uncertainty.

3. If gamma does include feedbacks, then don’t we already have a pretty well constrained estimate of gamma from the glacial/interglacial ice cores – something like 100ppmv over 6-8C, so gamma is around 14? I realize that’s in a different regime from the present, and the temperature changes include slower feedbacks, but it seems like the logic should hold, unless there is a “phase change” to the behavior of the carbon cycle around current temperatures.

Thanks!

I’m not convinced this article is particularly helpful in considering future CO2 feedbacks:

(i) There’s a little bit of a strawman feel to it. A couple of previous papers suggesting large carbon cycle feedbacks are used as a justification for re-stating what we really knew already, namely that the historical CO2-response to warming is positive but likely less than 20 ppm per oC of earth temperature rise. That’s consistent with the glacial interglacial response (around 90 ppm of CO2 release per 5/6 oC of warming; i.e. ~ 15-18 ppm/oC), and with previous estimates from analysis of the last millennium of a CO2 response of ~ 12 ppm/oC:

Gerber S et al. (2003) Constraining temperature variations over the last millennium by comparing simulated and observed atmospheric CO2 Climate Dynamics 20, 281-299

http://www.meteo.psu.edu/~mann/shared/articles/GerberClimDyn03.pdf

(ii) I can’t find any indication in the paper of the timescales of these feedbacks. Are these equilibrium feedbacks (as expected for the very long term response during glacial-interglacial cycles)? Or are these transient feedbacks that apply on short timescales (decadal; multidecadal?).

(iii) They address the question within a very narrow set of boundary conditions (in the period 1050-1800 AD) encompassing slow global temperature variations encompassing around 0.5 oC of total temperature variation across many centuries. Surely the concern for the future is the carbon cycle response to fast temperature variations encompassing potentially several oC of warming.

Here’s Friedlingstein 2006:

http://eric.exeter.ac.uk/exeter/bitstream/10036/68733/1/Model%20Intercomparison.pdf

These ecological models are all over the place, in other words (giving response factors between 20-200 ppm over one century) – and they are awfully complex, while also lacking key elements – It’s hard to include the nitrogen cycle, for example, which can have impacts on the carbon cycle (due to nutrient limitation issues). The climate models seem far more robust.

What also makes the approach seem somewhat curious is that the CO2 changes over the time period 1080-1800 are very small, as are the temperature changes – so you’re trying to calibrate using conditions that are very different from expected future conditions. Major factors could be ignored – i.e. the models could suffer from systematic biases.

The main issue is that the permafrost was stable over that period, but that’s no longer the case:

Zimov et al. 2006 Permafrost and the Global Carbon Budget Science (pdf)

Look at the accounting – if CO2 went from 360 gigatons at the last glacial maximum, to 560 Gt before 1800, to around 740 Gt today – and if the yedoma permafrost contains about 500 Gt, as estimates go, then clearly even partial transfer of that carbon to the atmosphere could have some large effects.

If these rates are sustained in the long term, as field observations suggest, then most carbon in recently thawed yedoma will be released within a century – a striking contrast to the preservation of carbon for tens of thousands of years when frozen in permafrost.

That seems to give a CO2 sensitivity well above any previous coupled model estimation, doesn’t it? Notice that polar amplification trends will result in temperature responses well above 1.5C /century.

It’s hard to tell from the abstract, but this current Nature paper seems to neglect permafrost emissions. There’s even an additional uncertainty – will permafrost emissions be dominated by methane or by carbon dioxide? That could also be a critical factor.

If they left out the permafrost, it’s likely not a very good prediction.

Most clear, thank you!

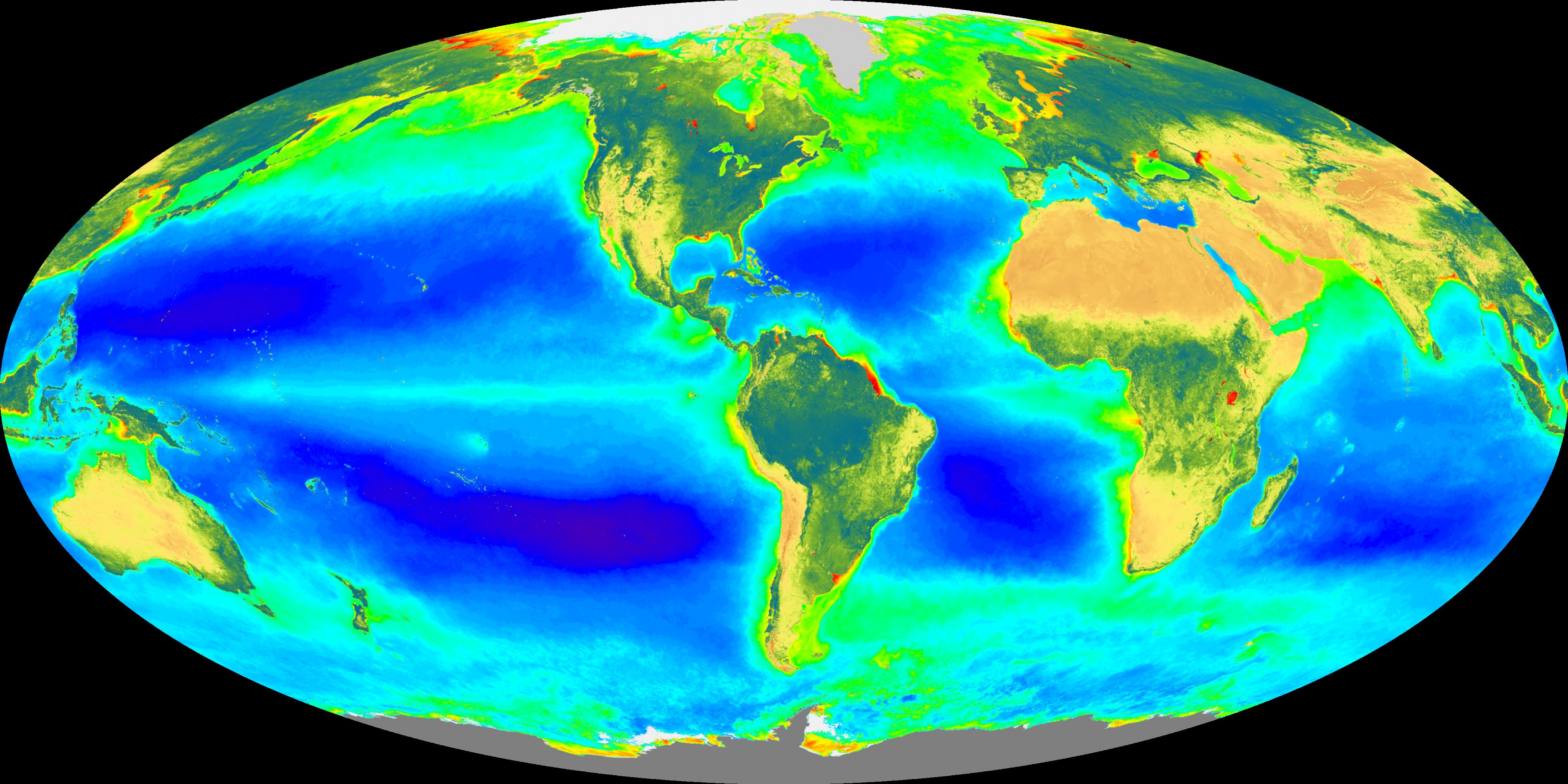

Interesting contribution and caveats in the analysis. The main of the statistical argument seems to assume the biogeochemical cycles of the photosynthetic plankton hold consistent even under the onslaught of the massive pulse of atmospheric carbon; near certain ensuing effects, such as ocean acidification, await. As a specific concern, wouldn’t one encounter (unpredictable) non-linear macro-ecological responses of the Earth carbon cycle to arise at some point if we continue atmospheric carbon release?

What is the state of knowledge of the carbon cycle particularly on the biological side of things? Any studies come out since the latest IPCC?

What is the state of knowledge of the carbon cycle particularly on the biological side of things? Any studies come out since the latest IPCC report?

17 Ike Solem: What is yedoma?

Is there an explanation for the drop around 1500?

Vendicar, global warming is not dead. Why? Because within the next five years we will see:

1) A new global maximum temperature anomaly,

2) A new minimum Artic sea ice extent, and

3) A rapid and accelerating disintegreation of the Pine Island and Thwaites Glaciers in Antarctica, among other things.

Okay, my judgement, but I would bet money on two out of three.

Edward Greisch (10) — Read again. Gamma is in units of ppm/K. Makes no difference to equilibrium climate sensitivity.

Various commenters —

The long lags of CO2 after temperature seen at the end of LGM do not appear to be the same at the beginning of every interglacial; consider especially the beginning of the one in MIS 11, the prior integlacial most similar in orbital forcing to the Holocene. I, in my amateur way, attribute the long lag measured at the end of lGM as being due to the expressed CO2 from the warming oceans being snatched up immediately by growing vegitation.

I take the approximately 10 ppm/K as being in fairly decent agreement with the LGM to Holocene transition, once the positive feedback of CO2 upon temperature is taken into account; for that interval of time one has to solve the entire feedback equation.

This estimate of gamma certainly takes almost none of the potential methane releases into account. It does take some because there is evidence of fairly recent and continuing methyl clathrate blowouts which are presumably due to the continued warming of the oceans now that the glacial is over. Rmember, all that water takes a long time to warm up.

I have a bunch of questions:

1) You say in paragraph 2:

“Values of gamma are typically positive in these studies (i.e. increased T => increased CO2).”

This is a bit confusing. When you say ‘value is positive’, do you imply they are >0 (zero), or are you referring to absolute values?

For example, in a cooling period, gamma is high, but its ‘sign’ is negative, (i.e. reducing temperature => rapidly falling CO2).

I do understand that if one considers the whole preindustrial millenium, gamma values are positive (>0) overall.

Great post!

Regards

Anand

Minor typo: “methodologicalvariables”.

KLR #8: in Australia, the coal industry with government backing is aiming for a rapid expansion in production and exports, with plans for new mega-mines; on project alone will add more than 10% to Australia’s output. Given the Australian federal and Queensland state governments claim they aren’t climate change deniers, I wonder what they think they actually are.

With contributions like that to the forcing, you wonder how vital it is to fine-tune our understanding of the feedbacks.

“It’s hard to tell from the abstract, but this current Nature paper seems to neglect permafrost emissions.”

It doesn’t consider any sources at all Ike; it’s not a mechanistic paper (no physics, geology, biology, ecology). It’s a statistical comparison between two groups of published time series data – proxy temperature reconstructions (which they recalibrate) and ice core CO2 concentrations. They actually just divide the second by the first (with an 80 year lag), average the result over a couple of different time intervals and call that their gamma. They crunch the numbers using monte carlo simulation, though, oddly, they don’t call it that. Hence the probability distribution output.

RE Don Shor

Don, it turns out that the reconstructions are in the SI, which is freely available. Of course, without the article, you would not have known that. ;-)

Figure S1 shows: Jones 1998, Briffa 2000, MannJones 2003, Moberg 2005, D’Arrigo 2006, Hegerl 2007, Frank 2007, Juckes 2007, Mann 2008.

Excellent analysis, Gavin, and thank you much for focusing on the interpretation of the science (as always) of how it helps us to understand the system, rather than getting caught up in the numbers.

I’m a numbers guy, though, so I tried to put together an equation / graph that would represent what this means, in a quantitative sense, to the climate.

CO2 Feedback Miscalculation Graph

What I basically did was to say, okay, if we see 3C from a doubling in CO2, we started at 280 ppm as the norm, and we’re up to 387.5 ppm now (2010), then we’ve already committed to a temperature increase of log(387.5/280) * 0.996 (last factor is from k[log(2)]=3C, hence k=0.996).

[Response: Something’s not seeming right here. First it should be natural logs, not base 10 logs. The summary forcing equation for CO2 is RF = 5.35*ln(C2/C1), or 3.71 W/sq m, where C2 and C1 are the ending and beginning CO2 concentrations respectively. Assuming an equlibrium (fast feedback only) climate sensitivity of 3C, this gives an equilibrium temperature change due to changes so far, of T = (3/3.71)*5.35*ln(387.5/280) = 1.41 deg C. Not all of this has been realized yet of course–Jim

see https://www.realclimate.org/index.php/archives/2007/08/the-co2-problem-in-6-easy-steps/%5D

I’m presuming that the 3C/doubling number is based on models which already assumed some degree of CO2 feedback (in whatever fashion, either by just including it outright, or by modeling in specific events like the eventual mutation of some percentage of forests into savannas at certain tipping points, or whatever).

[Response: I don’t know that the models do (or don’t, some may now), but the empirical estimates from the end of the Pleistocene almost certainly include some terrestrial and oceanic feedbacks–Jim]

I presumed a constant rate of anthropogenic CO2 increase equal to the average of the last 10 years (1.953 ppm/year).

[Response: 1.87 is what I get, using Mauna Loa from 1998 to 2008, but close enough–Jim]

I also presumed that we have not yet seen any CO2 feedback as a result of warming to date (i.e. that it’s too soon in the cycle, and, as Gavin pointed out in his opening paragraph, CO2 feedback would be relatively slow).

[Response: Possible rub there, see below

With this, then, we can compute a new ultimate temperature increase, for each year that we continue to add 1.953 ppm CO2, by adding more or less CO2 feedback to the equation than was originally presumed. Note that while the equation appears to be adding or subtracting CO2, that’s not really what’s happening. It’s simply adding/subtracting the warming that would have resulted from changing the CO2 feedback — CO2 itself is not actually being increased or reduced, per se.

I included a case where the models are accurate (pure 3C/doubling) and so there is a 0 ppm/C adjustment (black), where the models underestimated CO2 feedback by 7 ppm/C (blue), and cases where they overestimated CO2 feedback by 7 ppm/C, 14 ppm/C, 21 ppm/C, and 28 ppm/C (cyan, green, orange, red). I had originally graphed lines for having underestimated CO2 feedback by 14 ppm/C, 21 ppm/C and 28 ppm/C, but they were too depressing to look at.

Did I make any mistakes? Is my logic (and end result) sound?

[Response:OK, I see from below that you did come up with 1.4 somehow, just via a different route, so OK there. Everything seems fine except one: your graph lines don’t take into account that there has already been some (unknown) degree of cc feedback contribution. But, even if we take the authors’ mean estimate of 10.2 ppm/degree (or any other value), we don’t know the lag time that the feedback is operating on (only that it’s something 80 or less). If it’s 80, the cc feedback to date is negligible, but if it’s 10, it’s potentially much more substantial. Therefore it’s hard to know whether your graph lines are in the right place, or need to be shifted (to the left). And of course the issue of leaving out the higher possible feedback rates. This points up another reason why I wish the authors had included the details on the observed time lags–important info it seems to me–Jim]

Quick note: The graph does not show actual temperature increases. Obviously we haven’t already warmed the planet by 1.4C as of 2010. The graph shows what the eventual, inevitable increase will be based on the “setting of the CO2 dial,” i.e. based on how much we have already added and may continue to add in anthro CO2. Hence, the intersection of the black line at 1.4C at 2010 isn’t saying we’ve warmed by that much already. It’s just saying that, no matter what we do from here on out, that’s how much we will warm once all of the feedbacks play out (and therefore the label “Committed Temperature Increase”).

Correspondingly, moving forward in time, it suggests that the current 3C/doubling number will see us committed to a 2C increase by 2039… or sooner or later, if the CO2 feedback is more or less than expected.

Type in the previous post, although the graph is correct. k=9.996 (not 0.996).

It’ll be really great to see this technique refined and improved, it seems as though it has some genuine promise for refining “our” predictive abilities, if my woefully amateurish reading of it is somewhat on the mark.

An exceptionally nice writeup by Gavin, by the way. You should get a special medal for phlegmatically plowing forward despite all. Thank you.

Robert W, Based on Milankovitch cycles, we ought to be cooling now. However, such cycles are not the only things that can cause temperature to change. Insolation can change or volcanism can change the amount of light that reaches the surface.

#1, #2

Another possibility is that the value you are using for the temperature change is too low; if delta_T = 10C, one obtains gamma = 10 ppm/deg_C, which is in good agreement with the result described in the post.

There seems to be some misunderstanding regarding what the authors did, relative to the time scales of various feedbacks. Perhaps I failed to make this clear.

The authors were trying to provide estimates of gamma over policy relevant timespans–the next several decades to a century. This is clear both from their opening sentence, and from details of their study methods. I assume this is why they limited the evaluation of time lags to +/- 80 years.

Several mentioned the estimates from the Pleistocene. The authors are aware of the use of Pleistocene-based estimates of gamma. But the range of timescales involved there are different, and so there is potential for confounding by mulitple, simultaneous variations in other factors–e.g. albedo and ice-free land area for starters. Of course there is potential for confounding in their empirical estimates also, but by fewer factors, and they tried to control for the (likely) most important one: land cover changes.

The main point though, to me, goes along the lines Ike Solem discusses.

Charlie: They mean unexpectedly high increases in atmospheric CO2, in response to high feedback rates.

Edward: You have the sensitivity idea reversed. This is the sensitivity of CO2 to T, not T to CO2. Not sure what you have in mind re amplification vs feedback. The study makes no attempt to discriminate pathways/mechanisms.

Robert: The point on the orbital forcing example was simply that CO2 can lag temperature, not that the current warming is forced in any way by orbital factors (it’s not). Orbital forcings are very predictable.

GFW: (1) policy relevant scales (see above). The 80 years was not a finding of the study, but simply the limits of the explored time lags. (2) Yes, it does include them. It would implicitly include any T feedbacks operating over the scales that the individual regressions were performed over–which ranged up to several centuries. Gamma would be 20 in your example.

Chris: (1) I understand your point but I think the strawman idea is wrong. Testing existing parameter estimates using different methods, and/or over different time or space scales, is a big and important part of validation in science. If their values are in line with pleistocene based estimates, that’s important information. Also, a major point of the paper is to account for the uncertainty in the reconstructed T record since 1050–that’s new (2) no, they are transient–they have to be, given that there’s no way to control an empirical estimate and thus run it out to equilibrium. (3) yes, I made that point in the article.

Ike: This is exactly the type of discussion I was hoping would result when I talked about no-analogue states and models vs empiricism.

The fact that we do not now have the forest land area buffer–the potential forest area uncovered by ice during deglaciations–is another major difference from the pleistocene warmings. Nor do we have anywhere near a full handle on the global ecosystem productivity response to current and future temperatures. You mention N but an even bigger one is precipitation and humidity patterns.

Jim Redden: A critical question indeed.

I’d expect some genes in the ocean biota remain at low levels from previous times when there have been rapid changes, and may be favored in the current rapid change. looking:

http://www.google.com/search?q=evolution+coccolithophores

turns up for example

http://www.elsevier.com/wps/find/bookaudience.cws_home/712230/description

particularly Ch. 12, “Origin and evolution of coccolithophores: from coastal hunters to oceanic farmers.”– they’re still out there in the oceans, threatened by pH change, but I’d think with some genetic equipment that will handle rapid changes since they evolved in similar situations.

Wild speculation of course on my part.

Another good one:

http://www.cprm.gov.br/33IGC/1349196.html

Evolution of coccolithophores: Tiny algae tell big tales

“… Coccolithophores, a group of calcifying unicellular algae, constitute a major fraction of oceanic primary productivity, and generate a continuous rain of calcium carbonate (in the form of coccoliths) to the seafloor. Hence, they play an important role in the global carbon cycle, representing natural feedbacks in the climate system. Coccolithophores are a crucial biological group subjected to present-day climate change and ocean acidification due to oceanic uptake of excess atmospheric CO2. It is not known to what extent the natural populations can acclimatize or adapt to these changes, nor how their natural feedback mechanisms will operate in future. However, we can learn valuable lessons from their past and present-day behavior: Coccolithophore evolutionary rates are fast, with a fossil record dating back to 220 million years. Their global and abundant fossil occurrences in deep-sea sediments provide detailed records of plankton evolution that can be readily compared to proxy records of past ocean environmental parameters. In addition, cultivation of extant species of coccolithophore can inform us how modern calcifiers respond to different experimental physico-chemical conditions, and what factors are pivotal to sustain algal growth and biocalcification. Intriguingly, distinct responses between different modern species could relate to variable evolutionary adaptation strategies of their Cenozoic ancestors.”

Guest Commentary by Jim Bouldin (UC Davis):

Excellent explanatory review.

Thanks, Steve.

Yedoma – Wikipedia, the free encyclopedia

Yedoma is an organic-rich (about 2% carbon by mass) Pleistocene-age loess permafrost with ice content of 50–90% by volume. The amount of carbon trapped in …

en.wikipedia.org/wiki/Yedoma

——-

If you invert gamma, you get a sensitivity of at least 1 degree C per 10 ppm, which would have given us 10 degrees C between 280 and 380 ppm. That didn’t happen. I think the take-home message is that Mother Nature doesn’t sit still for tickling, not even a little bit of tickling. If you add a little CO2, Nature is going to react to it somehow; probably by raising the temperature and then adding more CO2, etc. Since Nature has all of the cards, it is not clever for humans to play the game.

Surprises unlikely? I don’t agree. Jim Bouldin is in a “Black Swan” situation. See the book by that name.

http://www.scientificamerican.com/article.cfm?id=defusing-the-methane-time-bomb

Oh, brother, I failed to notice the author of this. Gavin’s articles are -always- great, of course, and Jim Bouldin did a great job with this one. Am I digging fast enough?

Gavin, please go over why there are 2 distributions for 2 time periods. What happened in 1550 that made the distribution change?

The Black Swan: The Impact of the Highly Improbable

By Nassim Nicholas Taleb.

Like the turkey who thinks that the human farmer is benevolent for 1000 days, then comes Thanksgiving; economists have been fooled by their own statistical analysis.

“[T]he authors suggest that surprises over the next century may be unlikely.” Sorry Gavin, I just don’t understand Jim Bouldin’s reasoning on this one. Could you elaborate? I think we have already identified some “black swans,” such as Yedoma, clathrates, tundra peat bogs and melting Arctic ice.

As a naive guess, it seems unlikely that gamma would be linear over large temperature swings simply because of increasing areas of carbon stores becoming ‘unfrozen’ as ice-covered land and sea reduces (eg permafrost / clathrates).

16 Chris: Cites a paper that says: “Simulations with natural forcings only suggest that atmospheric CO2 would have remained around the preindustrial concentration of 280 ppm without anthropogenic emissions.”

THAT is a reasonable conclusion from Jim Bouldin’s paper. Without people burning fossil fuels, the present CO2 concentration of 387 ppm and 455 ppm equivalent would not have happened. It is worth testing the proposition that the extra greenhouse gas is indeed anthropic [human caused]. We can say with greater confidence that humans have caused the 100 ppm increase in CO2 and the increase in the other greenhouse gasses. Nature just would not have done it by herself.

This lower sensitivity could well explain why higer temperatures in the previous last 3 interglacial periods did not tip the earth into runaway glowal warming from higher methane or C02 release in the arctic and subarctic.

@1, 2

Possibly, carbon/climate feedbacks during the LGM involve longer-term processes (deep oceans, icesheet dnamics…) which are not relevant to the centennial time-scale of human-induced climate change… ?

It seemed to me that the Cox and Jones estimate of gamma (20-60 ppm/°C) was already a conservative one: they used the Moberg T reconstruction, which has a large variance, thus minimizing the estimation of gamma…

Any comments ?

RE: 19

Hey Jim,

The main interest I would have is to see what would happen if we were to suddenly start growing phytoplankton in large tank farms and porting the organic mass to defunct oil wells and coal mines and pumping a bit of the added carbon back into the earlier geologic epoch.

As to the expectation of a CO2 biologic response that would step up. That would be appropriate if there were less humans on the planet allowing more of the arable land to “go wild” and convert the CO2 to biomass. Instead humans have now converted nearly 82% of the earths arable land to cultivation.

This only leaves the oceans to rise to meet the added CO2 supply and as you may be aware the theory is that CO2 is not only possibly more biologically damaging; but, according to recent measures less likely to dissolve in the “warmer” oceans. (This is one of those little confounding bits of discovery many seem to miss…)

This then suggests that the answer to your question is likely a no.

Cheers!

Dave Cooke

Co-2 and Temps are interconnected and drive each other when not in equilibrium, as long as the earth ecological systems support them. Tipping Points mark those like within a domino game.

“The above says that sensitivity is MUCH higher than previously thought. It is degrees per 10 ppm rather than degrees per doubling. Am I right or did I miss something?

Edward Greisch”

You are thinking about the sensitivity of temperature to CO2. This articles refers to the increase of CO2 in response to temperature.