Guest Commentary by Jim Bouldin (UC Davis)

How much additional carbon dioxide will be released to, or removed from, the atmosphere, by the oceans and the biosphere in response to global warming over the next century? That is an important question, and David Frank and his Swiss coworkers at WSL have just published an interesting new approach to answering it. They empirically estimate the distribution of gamma, the temperature-induced carbon dioxide feedback to the climate system, given the current state of the knowledge of reconstructed temperature, and carbon dioxide concentration, over the last millennium. It is a macro-scale approach to constraining this parameter; it does not attempt to refine our knowledge about carbon dioxide flux pathways, rates or mechanisms. Regardless of general approach or specific results, I like studies like this. They bring together results from actually or potentially disparate data inputs and methods, which can be hard to keep track of, into a systematic framework. By organizing, they help to clarify, and for that there is much to be said.

Gamma has units in ppmv per ºC. It is thus the inverse of climate sensitivity, where CO2 is the forcing and T is the response. Carbon dioxide can, of course, act as both a forcing and a (relatively slow) feedback; slow at least when compared to faster feedbacks like water vapor and cloud changes. Estimates of the traditional climate sensitivity, e.g. Charney et al., (1979) are thus not affected by the study. Estimates of more broadly defined sensitivities that include slower feedbacks, (e.g. Lunt et al. (2010), Pagani et al. (2010)), could be however.

Existing estimates of gamma come primarily from analyses of coupled climate-carbon cycle (C4) models (analyzed in Friedlingstein et al., 2006), and a small number of empirical studies. The latter are based on a limited set of assumptions regarding historic temperatures and appropriate methods, while the models display a wide range of sensitivities depending on assumptions inherent to each. Values of gamma are typically positive in these studies (i.e. increased T => increased CO2).

To estimate gamma, the authors use an experimental (“ensemble”) calibration approach, by analyzing the time courses of reconstructed Northern Hemisphere T estimates, and ice core CO2 levels, from 1050 to 1800, AD. This period represents a time when both high resolution T and CO2 estimates exist, and in which the confounding effects of other possible causes of CO2 fluxes are minimized, especially the massive anthropogenic input since 1800. That input could completely swamp the temperature signal; the authors’ choice is thus designed to maximize the likelihood of detecting the T signal on CO2. The T estimates are taken from the recalibration of nine proxy-based studies from the last decade, and the CO2 from 3 Antarctic ice cores. Northern Hemisphere T estimates are used because their proxy sample sizes (largely dendro-based) are far higher than in the Southern Hemisphere. However, the results are considered globally applicable, due to the very strong correlation between hemispheric and global T values in the instrumental record (their Figure S3, r = 0.96, HadCRUT basis), and also of ice core and global mean atmospheric CO2.

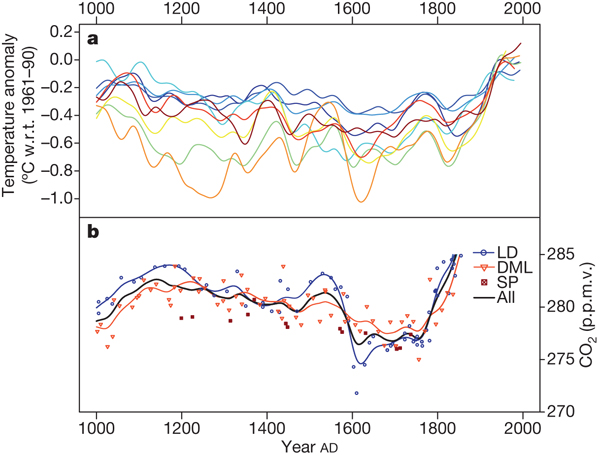

The authors systematically varied both the proxy T data sources and methodologicalvariables that influence gamma, and then examined the distribution of the nearly 230,000 resulting values. The varying data sources include the nine T reconstructions (Fig 1), while the varying methods include things like the statistical smoothing method, and the time intervals used to both calibrate the proxy T record against the instrumental record, and to estimate gamma.

Figure 1. The nine temperature reconstructions (a), and 3 ice core CO2 records (b), used in the study.

Some other variables were fixed, most notably the calibration method relating the proxy and instrumental temperatures (via equalization of the mean and variance for each, over the chosen calibration interval). The authors note that this approach is not only among the mathematically simplest, but also among the best at retaining the full variance (Lee et al, 2008), and hence the amplitude, of the historic T record. This is important, given the inherent uncertainty in obtaining a T signal, even with the above-mentioned considerations regarding the analysis period chosen. They chose the time lag, ranging up to +/- 80 years, which maximized the correlation between T and CO2. This was to account for the inherent uncertainty in the time scale, and even the direction of causation, of the various physical processes involved. They also estimated the results that would be produced from 10 C4 models analyzed by Friedlingstein (2006), over the same range of temperatures (but shorter time periods).

So what did they find?

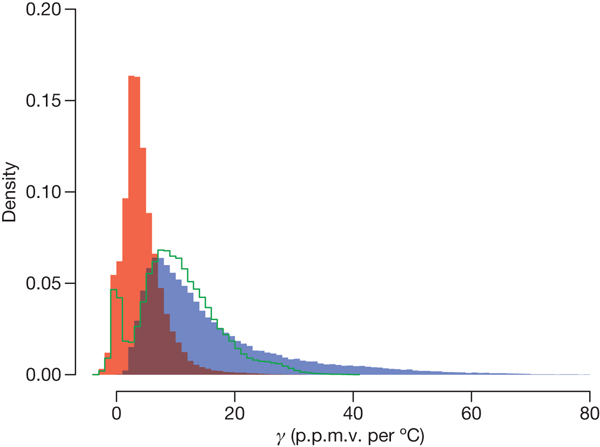

In the highlighted result of the work, the authors estimate the mean and median of gamma to be 10.2 and 7.7 ppm/ºC respectively, but, as indicated by the difference in the two, with a long tail to the right (Fig. 2). The previous empirical estimates, by contrast, come in much higher–about 40 ppm/degree. The choice of the proxy reconstruction used, and the target time period analyzed, had the largest effect on the estimates. The estimates from the ten C4 models, were higher on average; it is about twice as likely that the empirical estimates fall in the model estimates? lower quartile as in the upper. Still, six of the ten models evaluated produced results very close to the empirical estimates, and the models’ range of estimates does not exclude those from the empirical methods.

Figure 2. Distribution of gamma. Red values are from 1050-1550, blue from 1550-1800.

Are these results cause for optimism regarding the future? Well the problem with knowing the future, to flip the famous Niels Bohr quote, is that it involves prediction.

The question is hard to answer. Empirically oriented studies are inherently limited in applicability to the range of conditions they evaluate. As most of the source reconstructions used in the study show, there is no time period between 1050 and 1800, including the medieval times, which equals the global temperature state we are now in; most of it is not even close. We are in a no-analogue state with respect to mechanistic, global-scale understanding of the inter-relationship of the carbon cycle and temperature, at least for the last two or three million years. And no-analogue states are generally not a real comfortable place to be, either scientifically or societally.

Still, based on these low estimates of gamma, the authors suggest that surprises over the next century may be unlikely. The estimates are supported by the fact that more than half of the C4-based (model) results were quite close (within a couple of ppm) to the median values obtained from the empirical analysis, although the authors clearly state that the shorter time periods that the models were originally run over makes apples to apples comparisons with the empirical results tenuous. Still, this result may be evidence that the carbon cycle component of these models have, individually or collectively, captured the essential physics and biology needed to make them useful for predictions into the multi-decadal future. Also, some pre-1800, temperature independent CO2 fluxes could have contributed to the observed CO2 variation in the ice cores, which would tend to exaggerate the empirically-estimated values. The authors did attempt to control for the effects of land use change, but noted that modeled land use estimates going back 1000 years are inherently uncertain. Choosing the time lag that maximizes the T to CO2 correlation could also bias the estimates high.

On the other hand, arguments could also be made that the estimates are low. Figure 2 shows that the authors also performed their empirical analyses within two sub-intervals (1050-1550, and 1550-1800). Not only did the mean and variance differ significantly between the two (mean/s.d. of 4.3/3.5 versus 16.1/12.5 respectively), but the R squared values of the many regressions were generally much higher in the late period than in the early (their Figure S6). Given that the proxy sample size for all temperature reconstructions generally drops fairly drastically over the past millennium, especially before their 1550 dividing line, it seems at least reasonably plausible that the estimates from the later interval are more realistic. The long tail–the possibility of much higher values of gamma–also comes mainly from the later time interval, so values of gamma from say 20 to 60 ppm/ºC (e.g. Cox and Jones, 2008) certainly cannot be excluded.

But this wrangling over likely values may well be somewhat moot, given the real world situation. Even if the mean estimates as high as say 20 ppm/ºC are more realistic, this feedback rate still does not compare to the rate of increase in CO2 resulting from fossil fuel burning, which at recent rates would exceed that amount in between one and two decades.

I found some other results of this study interesting. One such involved the analysis of time lags. The authors found that in 98.5% of their regressions, CO2 lagged temperature. There will undoubtedly be those who interpret this as evidence that CO2 cannot be a driver of temperature, a common misinterpretation of the ice core record. Rather, these results from the past millennium support the usual interpretation of the ice core record over the later Pleistocene, in which CO2 acts as a feedback to temperature changes initiated by orbital forcings (see e.g. the recent paper by Ganopolski and Roche (2009)).

The study also points up the need, once again, to further constrain the carbon cycle budget. The fact that a pre-1800 time period had to be used to try to detect a signal indicates that this type of analysis is not likely to be sensitive enough to figure out how, or even if, gamma is changing in the future. The only way around that problem is via tighter constraints on the various pools and fluxes of the carbon cycle, especially those related to the terrestrial component. There is much work to be done there.

References

Charney, J.G., et al. Carbon Dioxide and Climate: A Scientific Assessment. National Academy of Sciences, Washington, DC (1979).

Cox, P. & Jones, C. Climate change – illuminating the modern dance of climate and CO2. Science 321, 1642-1644 (2008).

Frank, D. C. et al. Ensemble reconstruction constraints on the global carbon cycle sensitivity to climate. Nature 463, 527-530 (2010).

Friedlingstein, P. et al. Climate-carbon cycle feedback analysis: results from the (CMIP)-M-4 model intercomparison. J. Clim. 19, 3337-3353 (2006).

Ganopolski, A, and D. M. Roche, On the nature of lead-lag relationships during glacial-interglacial climate transitions. Quaternary Science Reviews, 28, 3361-3378 (2009).

Lee, T., Zwiers, F. & Tsao, M. Evaluation of proxy-based millennial reconstruction methods. Clim. Dyn. 31, 263-281 (2008).

Lunt, D.J., A.M. Haywood, G.A. Schmidt, U. Salzmann, P.J. Valdes, and H.J. Dowsett. Earth system sensitivity inferred from Pliocene modeling and data. Nature Geosci., 3, 60-64 (2010).

Pagani, M, Z. Liu, J. LaRiviere, and A.C.Ravelo. High Earth-system climate sensitivity determined from Pliocene carbon dioxide concentrations. Nature Geosci., 3, 27-30

LDC: what are you talking to me about that for?

The reason you don’t understand my answers is because you have a think in your head that requires you to tell me stuff that isn’t news. My answers are based on my best guess as to why you’re asking me or, where I cannot discern that, how you’re lecturing the wrong person.

I mean, if you want to continue, just remember this: dilligaf?

Re: 338. Simon, by Clausius-Clapeyron effect, I just mean that when temperature increases, the equilibrium vapor increases in proportion to their function, i.e. 6%/degree. Think of it as an equilibrium between the water and vapor phases, which depends on temperature.

#352 Jim D Thanks for the clarification Jim. While I’m here could I ask you to comment on CFU’s (#278) mocking question “Did you know that RH is the amount of vapour held in water compared to the maximum amount of water that such vapour could hold?”. It seems a far cry from the usual definition, (loosely) “the amount of water vapour in a given volume of air expressed as a percentage of that required to saturate it”, but maybe his formulation amounts to the same thing though I can’t see it. Please put me straight. I’ll apologize to him if I’m wrong of course. Thanks, simon.

Mr Bouldin.

What I originally said, and talked about again is very simple.

-If we believe in paleontologic reconsctructions of the past climate (global temperature anomaly), and

-If these reconstructions extend, in continuity right up to the instrumental period

then,

(A) we shouldn’t have problems accepting values for gamma, derived from the same period.

(B) Even if we say similar values for gamma cannot be accepted as being in play in the present (given all the perturbation), we should at least, be ready to accept that, in all likelehood, similar broad rules for gamma apply for the present period.

[Response: No, that is an open question. You can’t conclude that from this study.–Jim]

These rules being:

1) gamma is lower in periods of warming

2) gamma is higher in periods of cooling

3) probability of very high gamma values is diminishingly low, esp in warming periods (from fig 3)

[Response: No again. This study does not show what you state. There was cooling throughout the entire period (1050 to 1800). The causes of the difference in gamma between the two sub periods is unknown. Gamma did not show a dependence on the direction of T change, but rather on the rate of CO2 change (pg 529).–Jim]

I am not confusing proxy calibrations with graphic co-representation of temp and tree-ring proxies. But I believe there is no need to talk about that at all.

When we say that current temperatures are unusually high compared to the previous millenium – we are comparing the recent period to the pre-industrial period. We don’t say:

“we cannot compare temperatures from those two periods because the global conditions were completely different during the two periods”, do we? Would Realclimate hesitate to condemn such imaginative ‘denialism’, if someone were to actually use that logic?

[Response: Not analogous! We have T estimates over both periods. We don’t have gamma estimates, and furthermore, the uncertainty discussion is in regards to likely future feedbacks.–Jim]

Anand, I wonder how your reasoning would look during an interglacial when the ice melted and exposed a large area of former permafrost and peat bogs that were now free to emit their CH4 and CO2 into the atmosphere.

Re: 353. Simon, it seems misphrased. RH is the amount of vapor held in air compared to the maximum amount of vapor that such air could hold.

“So what happens to the rest of the column until it becomes cool enough to change state?” It cools by expansion at the dry adiabatic lapse rate. If it cools enough to become denser than the surrounding air, it stops rising (barring momentum effects, which can be significant in large thunderstorms, but lets not complicate the discussion).

“What happens to the lapse rate of the total column?” If its dry(no condensation), it cools at the dry lapse rate, if it’s wet(contains droplets or ice), it cools at the wet lapse rate. If the column is heterogenous – parcels with cloud and parcels without, the different parts cool with expansion at different rates; the wet parcels cool more slowly, so they become less dense compared to the adjacent dry parcels over time. The bouyancy difference drives the moist parcels to rise faster than the dry parts, The continuing condensation tends to increase the number/mass of the cloud particles until it starts to rain out, unless mixing & transport to adjacent dry parcels remove enough water vapor to prevent this (happens a lot in real atmospheres). The difference in speed of rise creates turbulence & mixing.

“If globally the air above the Sea Surfaces were to be warmer are you suggesting that the atmospheric AH would not increase? ” As the air becomes warmer, the AH will increase, but not without limit. Even though the supply is huge (the oceans), once you reach 100%RH, any further evaporation turns to clouds then rain. Conceptually, the maximum upper limit under any circumstances is when the troposphere is filled with clouds everywhere. Practically, the earth already has ~60% cloud cover, e.g. at some altitude the RH is 100% and the air is drier above and below the cloud layer. Warming is putting more water vapor into the atmosphere(from the bottom). Convection, tropospheric mixing, lateral transport, macroscopic air mass movement into different temperature regimes move this moisture into an area where condensation starts. Once cloud particles form, the situation becomes unstable, driving the system towards rain. The particles are denser than air, so they settle gravitationally. If they are really small, the rate will be really slow, but never zero. Surface energy increases as surface curvature increases, so small particles lose mass to larger ones, and larger particles fall faster, When particles collide, they coalesce; larger falling particles traverse a larger volume per unit time, so they collide with more particles than smaller slower moving particles. Precipitation starts at higher cooler levels, so particles falling through moist warmer air increase mass by condensation. all these positive feedbacks result in an average residence time of ~10 days.

“For instance going from 40 Deg. C at 1033mb at 90% humidity with a dew point of what around 37 Deg. C what would the temperature be of the same parcel at say 550mb and again at 250mb.” lets say 5km & 10km instead(264 & 540 mb) so we can do the math in our head. The dry lapse rate is ~10degC/km, so the parcel would cool to 37 deg & start condensation at about 330m altitude, where it would transition to a wet lapse rate of ~5degC/km, reaching 13degC at 5km and -12C at 10 km. If there’s enough volume of 40deg’C 90%RH near rhe ground to sustain inflow and development of a cloud column that extends from ground level to 10 km, with an area sufficient to sustain coriolis rotation, you will likely get a supercell thunderstorm. see http://ww2010.atmos.uiuc.edu/(Gh)/guides/mtr/svr/type/spr/home.rxml (although the site is about weather, it is a good overview of many of the processes related to hydrologic part of climatology.)

How do we know that AH increases with temperature? Because gamma alone doesn’t provide enough positive feedback to convert the small change in insolation from Milankovic cycles into the temperature change recorded in the ice cores over the glacial cycles, and observations show increasing AH with temperature (Wentz et al referenced previously).

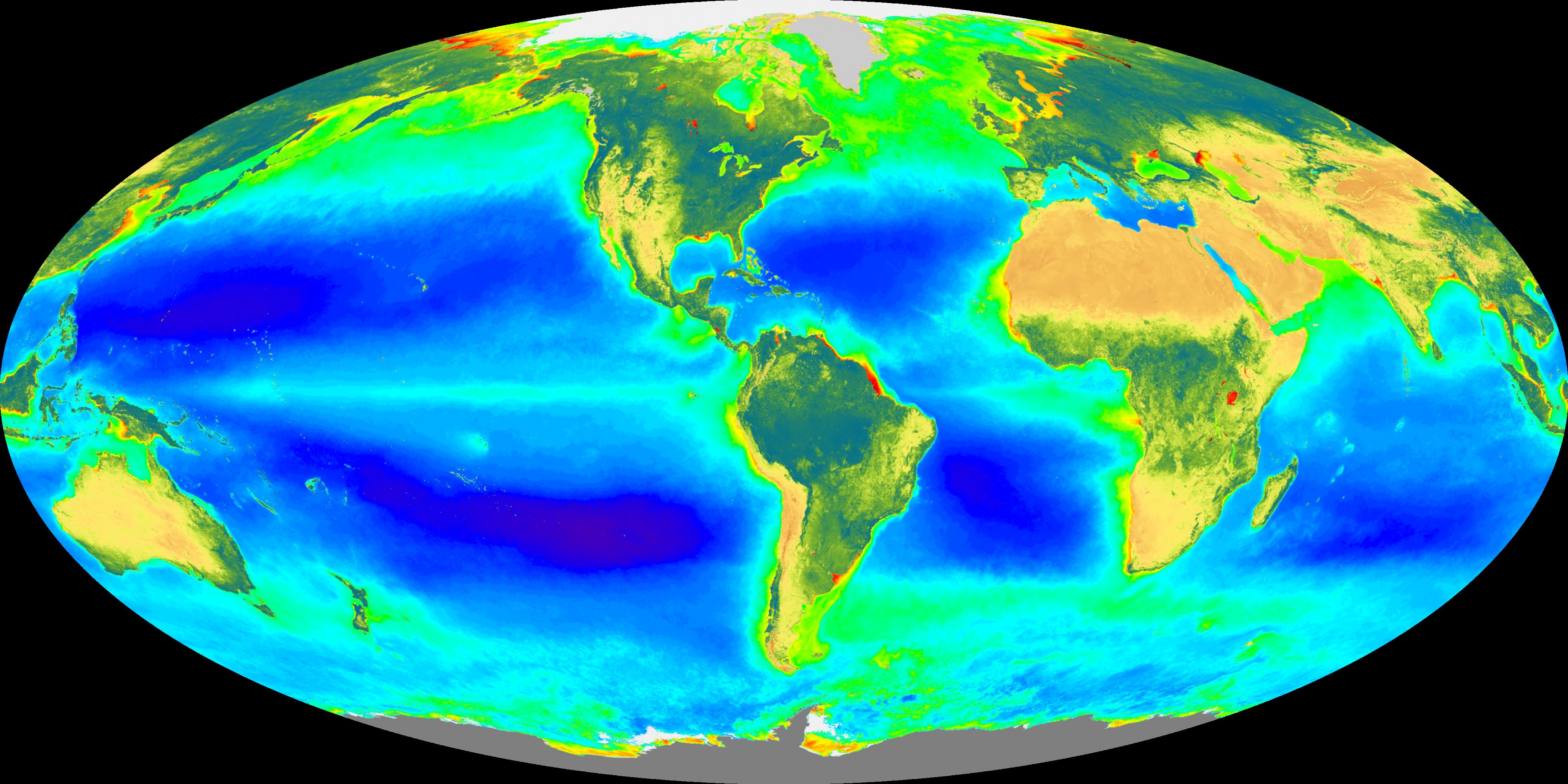

How do we know that the AH greenhouse increase with temperature doesn’t cause a runaway situation(Simon’s original question 13 February 2010 @ 5:58 AM) Because it didn’t at the PETM, because the water vapor stays in the troposphere, and even there is concentrated in the lower levels (lapse rate – a wool blanket under a down comforter doesn’t have as great a temperature gradient or warming effect), and precipitation provides an effective mechanism to remove water from the atmosphere. If you compare the rainfall pattern from http://www.ipcc.ch/publications_and_data/ar4/wg1/en/figure-8-5.html to the SST image at http://nasascience.nasa.gov/images/oceans-images/amsre-sst.jpg/ you can see that the water vapor/rainfall doesn’t get transported very far northeast from the coasts of Japan & the US from the warm surface pools of water south of Japan and in the Caribbean, where the water is evaporating.

What are the areas where the accuracy of models needs to improve? “Precipitation patterns are intimately linked to atmospheric humidity, evaporation, condensation and transport processes. Good observational estimates of the global pattern of evaporation are not available[1], and condensation and vertical transport of water vapour can often be dominated by sub-grid scale convective processes which are difficult to evaluate globally.”http://www.ipcc.ch/publications_and_data/ar4/wg1/en/ch8s8-3-1-2.html

[1]but the situation is improving –

http://www-calipso.larc.nasa.gov/ “CALIPSO and CloudSat are highly complementary and together provide new, never-before-seen 3-D perspectives of how clouds and aerosols form, evolve, and affect weather and climate. CALIPSO and CloudSat fly in formation with three other satellites in the A-train constellation to enable an even greater understanding of our climate system from the broad array of sensors on these other spacecraft.”.

http://cloudsat.atmos.colostate.edu/overview “Changes in climate that are caused by clouds may in turn give rise to changes in clouds due to climate: a cloud-climate feedback. These feedbacks may be positive (reinforcing the changes) or negative (tending to reduce the net change), depending on the processes involved. These considerations lead scientists to believe that the main uncertainties in climate model simulations are due to the difficulties in adequately representing clouds and their radiative properties.”.

http://aqua.nasa.gov/about/instrument_amsr_science.php “Measurements by AMSR-E of ocean surface roughness can be converted into near- surface wind speeds. These winds are an important determinant of how much water is evaporated from the surface of the ocean. Thus, the winds help to maintain the water vapor content of the atmosphere while precipitation continually removes it.”

OK, I’ve read the main post and all the comments and I still can’t see that this article does much for us. As I understand it, the data was based on the last thousand years (or two?), and that period shows a carbon feedback r ate (or gamma?) of 10ppm for every degree of temperature rise.

But we are now seeing (and are about to see) temperature changes that are much larger and faster than anything seen in the last few millennia (at least). Is there any reason to believe that temperature jumps at these new levels and r ates are going to have the same effect as the smaller and slower movement of the relatively recent past? And as others pointed out, the global system was a much different, much more resilient one during most of this time–no ocean acidification, no massive die off of forests world wide…

Furthermore, carbon feedbacks are already starting to kick in that have not been seen during this time frame, as far as I understand–

vast melting of permafrost

methane hydrates bubbling to the surface from beneath the ocean (see the many papers by Igor Semiletov and others)

an Arctic Ocean on the verge of being ice free in summer (or nearly so)

These types and levels of feedback, as far as we know, did not happen to this extent in the past thousand years. It seems to me that we are hitting huge discontinuities that make these linear finding from the past centuries essentially worthless as predictive models.

You can’t predict based on observing water heated from 1 to 99 degrees what will happen when you heat it one degree further, yet that is what this paper seems to do. And we do see to be at that kind of a change-of-state moment, or very, very near it.

Am I missing something here? (I assume I am since much more informed posters than I seem to find this work of great worth.) Is the value that it gives us some kind of baseline to work from?

wili (358) asks “Is the value that it gives us some kind of baseline to work from?” I opine so from my amateur standing. I should think that laboratory experiments could establish values of gamma for various teemperatures and near equilibrium concentrations. Alas, that leaves out the role of all the plants, in the oceans, on the land and in the soil; that changes the reality.

I now think Tamino had a good point in his comment #1; the transition from LGM to Holocene, as he points out, gives a much larger value of gamma, but under non-equilibrium conditions. So there is plenty more to puzzle out yet.

RE: 357

Hey Brian,

Okay, now have general agreement or an understanding of the pre-GW observational atmospheric physics, I think we may need to do a reset as we seem to be talking past each other. In short, I think we have the necessary model update observations you refer to.

To my question regarding, in a warming world where there is more water vapor in the atmosphere, are you suggesting that the global AH is not increasing? In response to the question you say, “yes; but, not without limit”. Not a problem; however, you did not venture to suggest a limit, I did. My limit rose to a level of 55km meaning that over 80% of the atmospheric density is below the height of PSCs. At this level the AH may have the potential to be nearly 1/3rd greater, what would you suggest this would do to the RH and rainfall potential?

Going further, the point I was trying to point to was that the processes you are talking about appear to have changed. Where you are attempting to describe the mechanics of normal rainfall which would occur in the 2-6km range, you are missing that the mechanics have been observed to have changed over the last four years. It appears that we have liquid water vapor being observed at altitudes as high as 10-12km. (That was the reason I asked the question about the estimated temperature of the 40 Deg. C air parcel at altitude. Your values clearly should suggest condensation and frozen precipitate phase change.

If your wet lapse rate at 10km would suggest a temperature of -12 Deg. C at 10km you would expect the water there to be frozen. (Even though your wet lapse rate might differ from mine (6.3 Deg. C/km)) And yet we have direct measurements of liquid water at this altitude. The point remains that the processes you are describing seem to have broken down in the warming atmosphere, as observed by researchers at Washington State University and USC between 2005-2007. Another confirming observation was offered by the British Arctic Survey in the Canadian Arctic region in 2007.

If this is true then why would you expect more rain if even at 10km and -12 Deg C we have liquid precipitate rather then frozen? (To me it appears you may have missed that most precipitation is actually related to the accretion of frozen ice particles and not condensate. The surface tension of the liquid water seems to effect the tendency for colliding water droplets to repel or bounce off and not become absorbed.)

I understand your point of view, I am only suggesting recent research suggests that the processes appear to have changed. We have seen clear cases in which high altitude aerosols density has prematurely dried out air parcels stopping convection and rainfall. (Droughts observed in the US Eastern seaboard as related high altitude desert dust.) We also have issues of insolation on dark aerosols which seem to delay the transition from the wet lapse rate to the dry lapse rate by nearly 4km. The good folks at UCAR and NCAR have done a lot of research which seems to suggest that there are clearly confounding processes that are changing the normal atmospheric processes you described.

All of these observations appear to suggest that where an increase in rain is possible, it is not happening. The truth is that the AH will have increased which would suggest that the RH potential of the atmosphere should have increased. If this is true then there would be a clear delay in the rain at the normal “limits”.

So where would all of this additional water come from, is the actual root of my reason for pursuing this subject. It comes from soil moisture, and sea surfaces, as a reflection of a change in gamma, which was the initial point of this discussion. Gamma appears to change in correlation to changes in the Earths processes. If radiation is no longer sufficient to reduce the surface temperature and to keep the inter-zonal heat content (specific heat) flow stable, Earths processes appear to change. However, it is not so much that they change, as the balance of heat flow through the conduits or atmospheric processes change.

The next question is do the conduits get bigger or does the flow rate increase. Every thing I have seen suggests that just like bubbles in a pot about to boil, the conduits increase in size and a large pulse of heat flows to the upper latitudes. The question then is does gamma actually increase or is gamma stable and the Earths processes change to re-balance gamma? Then to go full circle is the deviation in the Northern Jet Stream a signal or a forcing of the change in the Earth’s processes?

Cheers!

Dave Cooke

RE: 360

Hey All,

My error it looks like my memory is failing. Apparently the Washington State University research I referred to should have been in relation to the University of Washington and research stemming from a series of 1998 expeditions; ( http://www.arm.gov/campaigns/nsa1998firesheba ) There should be references at the bottom of the page. What I do not see was references to the follow up where they were experimenting with data collection devices.

If I find the reference I will post it. In the meantime my apologies to the University of Washington team. I will also go back and check the USC reference now…

Cheers!

Dave Cooke

RE: 361

Hey All,

This is a link to the follow up of FireIII-ACE super cooled liquid water observation: http://lidar.ssec.wisc.edu/papers/journal/bulletin_mpace.pdf Apparently the new device appears to be called SPLAT, and regards aerosols. From what I understand SPLAT testing was not part of the 2004 M-PACE expedition.

Cheers!

Dave Cooke

RE #67. Thanks, Jim, for your response: “Fertilization is a generally positive effect, as it is a negative carbon cycle feedback.”

This is an early study, and just as the earlier CO2->T studies didn’t include much about long term positive feedbacks, the T->CO2 study may not include all factors. I’m thinking that T at higher values (which it looks like we’re headed toward) may at some point actually end up releasing more CO2 than absorbing thru increased plantlife (nevermind releasing frozen methane).

I’m thinking of how T dessicates soil and plants, holds up more WV in the atmosphere, then releases it as deluges (killing plants), and also T may be causing stronger winds (I know this is less certain), which with dried out vegetation would translate into wildfires. So then we’d get into a +t -> +CO2 -> + T, and so on. There might be some threshhold T at which the system flips into a positive feedback syndrome.

[Response:If gamma is positive, this means that feedback is positive. The statement above that CO2 fert. is a negative cc feedback still holds even if gamma is positive–it’s just that other things (like, e.g. what you mention), make the net feedback positive.–Jim]

And I’m still not certain the CO2-plant connection is linear. I’m thinking that perhaps really high CO2 might be bad for plants (sort of like overeating is bad for us) or at least in some log-type relation of decreasing benefit, not linear, esp in a really hot climate.

[Response: You have to get to CO2 levels far beyond what’s likely to occur to get toxic effects.–Jim]

Mr. Bouldin

1) gamma is lower in periods of warming

2) gamma is higher in periods of cooling

3) probability of very high gamma values is diminishingly low, esp in warming periods (from fig 3)

Your response was: “No again. This study does not show what you state. There was cooling throughout the entire period (1050 to 1800).”

Going by the AR4. Chap 6, the medieval warm period was from 950 AD to 1300 AD. There is much hand-wringing about this, but no matter, for we are only interested in the time period for the MWP, not the temperature anomaly of that period. Likewise, the Little Ice Age extends from 1350 to 1770 (source: Chap 6 again). It can be considered 1550-1800, by other accounts.

The paper divides the past millennium into two periods:

1050-1549 &

1550-1800

The time periods for the MWP and LIA, as we can clearly see, roughly correspond to the periods considered in the paper.

[Response: So what?!. Your point here was that gamma is lower when temperatures are warming, than when they are cooling. There remains no basis to conclude this from this paper, regardless of these irrelevant statements about time intervals. ]

And moreover, I am not the one using the MWP/LIA terminology – I am aware that the IPCC tries to look down upon these terms (or tries to look away). The authors use these terms in the paper.

[Response:Then why did you just introduce those terms into this discussion (???) when they are not related to your point about gamma values in relation to the direction of T change? Very weak]

Vincentnathan: I don’t believe it is a good idea to answer a study, which uses verifiable data with speculation.

“may at some point actually end up releasing more CO2″

“There might be some threshold T at which the system flips into a positive feedback syndrome.”

For I can simply answer you “may be” and “might be” with a may not be and a might not be. If you do insist that actual studies exist that predict the emergence of conditions you describe, I could answer saying such conditions might have transpired, to varying degrees in the warmer periods of the past too. If you then insist, that there is no geologic/climatologic evidence for such conditions in the past I could say even then, that the positive feedback syndrome you speak of, continues to be in the realm of speculation.

Mr wili

“It seems to me that we are hitting huge discontinuities that make these linear finding from the past centuries essentially worthless as predictive models.”

My question again is: if you can infer temperatures from tree rings of the ‘resilient’ period centuries past(remember – it is an inference), you should be able to learn other lessons from models which use the same tree-ring temperatures. If they suddenly become worthless to you, given all the huge discontinuities, then you would surely agree that those who make claims of an unprecedented temperature rise shouldn’t do so too. After all, the vastly different conditions that prevailed past the current discontinuity, form the very basis for the precedence that we speak of.

[Response: I already answered this. You are making an improper analogy, and from this are drawing improper conclusions.

It seems that you want to believe that this paper rules out the possibility of high feedbacks and unpleasant surprises in the future. It doesn’t. Both the authors and I have been quite clear about that.–Jim]

Regards

Anand

Mr Bouldin

Your point here was that gamma is lower when temperatures are warming, than when they are cooling. There remains no basis to conclude this from this paper, regardless of these irrelevant statements about time intervals.

How do you say this? I really don’t know what I am missing here.

[Response: Look at Figure 1. The various reconstructions show that there was gradual cooling almost over the entire interval of their study–forget about MWP and LIA etc. The higher gammas in the 1550-1800 interval, compared to lower such in the earlier period, do NOT correspond with some difference in the direction of T change (which is what you claimed they did). Rather, the higher gammas in the later period are most strongly associated with the magnitude of the CO2 change, if anything. The authors state this in the paper, on page 529, 1st P. (Plus there are sample size differences–that could have an effect too). You therefore cannot conclude what you concluded. It might have been interesting if the authors had presented results for the mangitude of gamma in relation to warming or cooling temps, but they did not present such, nor even mention it –Jim]

I am referring to Figure 3, also reproduced in your post above.

And let me quote:

“Values for gamma for the early period (1050-1549) indicate a lower mean (4.3 ppmv per C)…”

“than estimates for 1550-1800 (mean = 16.1)…”

These periods roughly correspond to the MWP and the LIA of the AR4.

Therefore:

1050-1549 – 4.3 – MWP

1550-1800 – 16.1 – LIA

I was hesitant to use the terms ‘MWP’ and ‘LIA’ only because I did not want the issue to get sidetracked into a discussion of whether the MWP or the LIA ever existed at all.

[Response: Yes, I understand and appreciate that.–Jim]

Regards

Anand,

I’m not sure I follow your point. The correlation between rising temperatures and feedback release of additional carbon into the atmosphere is a particular kind of dynamic. That we are likely to see (further) discontinuities in this particular relationship does not seem to me to automatically imply anything particular about other correlations. As Jim notes, you seem to be reaching for an analogy here that isn’t quite apt.

Since google did not cough up anything readily, I would like to ask a stupid question:

What exactly is included in “Charney feedback(s)”?

I get the impression that it (or these) are fast feedbacks, but I’m not sure what exactly they include. I downloaded the 1979 paper, but I’m not sure which of the many topics covered in that interesting piece is now included in “Charney feedback(s).”

Thanks ahead of time for any light you can throw on this or source you can point me to.

Wili: I used Google Scholar for the term; it’s defined here:

Charney J, Carbon dioxide and climate: a scientific assessment. In National Academy of Sciences Press 1979 Washington, DC:National Academy of Sciences Press

I found it described here: see this article for much more detail:

http://rsta.royalsocietypublishing.org/content/365/1856/1925.full

“… the Charney (1979) definition of climate sensitivity, in which ‘fast feedback’ processes are allowed to operate, but long-lived atmospheric gases, ice sheet area, land area and vegetation cover are fixed forcings. Fast feedbacks include changes of water vapour, clouds, climate-driven aerosols1, sea ice and snow cover.

——-

Aside, I don’t recall seeing this, which may be good news:

http://www.physorg.com/news128613620.html

(about a paper published in Science April 18, 2008)

“… the majority of ocean calcification is carried out by coccolithophores such as Emiliania huxleyi, and the amount of calcium carbonate produced at the ocean surface is known to have a direct influence on levels of atmospheric carbon dioxide.”

The coccolithophore she referred to, E. Huxleyi, is like the “lab rat” of coccolithophores, Rehm says. Laboratory experiments where one increased the acidity caused all kinds of problems for E. Huxleyi. But in this new work, Iglesias-Rodriguez’s group didn’t just add acid, they bubbled CO2 at increasing levels into the culture. The coccolithophores increased their calcification in response.

Assessing the amount of calcification done by hundreds of thousands of coccolithophores grown in the experiments was the job of the UW researchers using flow through cytometery and techniques refined by Peter von Dassow while a post-doctoral researcher in Armbrust’s group. He’s now at France’s Station Biologique de Roscoff and also a co-author on the paper.

A second group of researchers on the paper, led by Paul Halloran, doctoral student at the University of Oxford, took cores from sediments in the North Atlantic and found that two dominant coccolithophores species had increased in size in the 220 years since the Industrial Revolution, when humans began turning more and more to fossilized fuels for energy

“Our research has also revealed that, over the past 220 years, coccolithophores have increased the mass of calcium carbonate they each produce by around 40 percent,” Halloran says….”

Something newer on coccolithophores and climate change from two of the same authors, and less optimistic:

http://biogeosciences-discuss.net/6/9817/2009/bgd-6-9817-2009.pdf

“Conclusions

Ocean acidification may induce changes in the composition and quality of biogenic particles that impact the formation, as well as the sinking and degradation rate of aggregates. As a consequence, the efficiency of deep export may be reduced in the future ocean, and the shallow remineralisation of organic matter enhanced. Thus, our study provides an example of how the biological pump may transmit the signal of anthropogenic perturbations from the surface into the ocean’s interior.”

wili:

“As Jim notes, you seem to be reaching for an analogy here that isn’t quite apt.”

I am not making an analogy, not quite so, irrespective of what you might claim. I am saying there is a logical fallacy in your position (vis-a-vis the discontinuities) when considered against other positions adopted by proponents of anthropogenic warming, which it contradicts.

Not enough to make Bertrand Russel turn in his grave, but maybe just enough to make his toes curl up. :)

I always choose my words carefully. I do understand your point, but I am not sure of the same from you.

Thanks

Anand

RE: 357

Hey Brian,

Not to beat a dead horse as much to show independently the cloud processes I failed to identify regarding water vapor condensation and rain drop formation.

According to this article it appears for water to form into a droplet requires what appears to be an RH of up to 120%, (apparently due to surface tension). So earlier posts by others suggesting that at an RH of 100%, water vapor condenses, then coalesces forming larger droplets and Wa La, rain…, in light of this article may need to be revised.

http://weather.cod.edu/sirvatka/bergeron.html

I hope this helps laymen like myself here to gain a little more understanding of the micro-physics occurring in clouds regarding raindrop formation. Hope you have a wonderful day!

Cheers!

Dave Cooke

@24 [who argued that Global Warming isn’t dead because we will see new signs of it in the next few years]:

I just read a remark by Pier Vellinga, professor Earth sciences and climate change: “Much will depend on the melting of the ice caps. That’s a strange realization: the trust people have in us now depends on the short term aberrations in local climate. While the essence of our message is about risks for the global climate in a 30-100 year time frame. If there is ice on the IJsselmeer now, we are blamed.”

[from Resource, the weekly for students and staff of Wageningen UR, the Netherlands, translation mine]

“According to this article it appears for water to form into a droplet requires what appears to be an RH of up to 120%, (apparently due to surface tension)”

It can go somewhat above that RH if the air is devoid of nucleation sites.

Similarly, water doesn’t freeze definitely until about -30C.

http://en.wikipedia.org/wiki/Supercooling

Again, if there aren’t any nucleation sites or regular sites to form on.

So in light of this, does your fridge need recalibrating?

Feedback from carbon release does seem to still be a concern for these folks:

tmospheric levels of methane, the greenhouse gas which is much more powerful than carbon dioxide, have risen significantly for the last three years running, scientists will disclose today – leading to fears that a major global-warming “feedback” is beginning to kick in.

For some time there has been concern that the vast amounts of methane, or “natural gas”, locked up in the frozen tundra of the Arctic could be released as the permafrost is melted by global warming. This would give a huge further impetus to climate change, an effect sometimes referred to as “the methane time bomb”.

http://www.independent.co.uk/environment/climate-change/methane-levels-may-see-runaway-rise-scientists-warn-1906484.html

[Response: Reasonable story except for the headline and the ‘runaway feedback’ paragraph which implies that this happened at the end of the last ice age. Positive feedback does happen and is likely in the future (though of uncertain magnitude) but that isn’t the same as ‘runaway affects’. – gavin]

Thanks for the distinction. Help me with my maths. What value of gamma moves a feedback over from non-runaway into runaway territory.

[Response:Given the various uncertainties it seems to me doubtful that such a value could be identified very specifically.–Jim]

RE: 373

Hey CFU,

That is for Pure Water, this only goes to show for what is in the ice you may put in your tea… Add in a bit of dirt, bacteria, salt, metals or oxides and you have plenty of nucleation sites…

Cheers!

Dave Cooke

I’ve been taking a more detailed look into Earth System Sensitivity. These recent papers seem very interesting :

1. In Pagani et al “for the early and middle Pliocene, when temperatures were about 3–4 C warmer than preindustrial values… CO2 levels at peak temperatures were between about 365 and 415ppm”. So if CO2 roughly the same as today led to 3-4 degrees of warming in the early Pliocene, that would mean an ESS of about 6-8 degrees for CO2 doubling. However, we must be careful, because CO2 is not the only relevant forcing.

2. Lunt et al estimate Earth System Sensitivity about 30–50% greater than Charney sensitivity, because they also analyse orography in the mid-Pliocene as a forcing separate from CO2 or any of its feedbacks. This summary seems to have the main points. At mid-Pliocene ~3 million years ago, CO2 is modelled at 400ppm. Overall temperature is about 3.4 degrees above pre-industrial, with ESS due to the 400ppm CO2 contributing about 2.3 degrees.

3. However Tripati et al argue that CO2 hasn’t been as high as today since the Miocene. Their reconstructed peak level for the mid-Pliocene is only 350ppm: see the graph at the bottom right of the last page here . Even allowing for the additional orography forcings, and some forcings by other GHGs, ESS for doubled CO2 would be in the ~6 C range again.

So it’s bad news, then a bit better news, but then more bad news, returning us to the ballpark of Hansen et al’s original estimates.

On top of that, we need to add carbon cycle feedbacks, as discussed above and calculated here . There is maybe another degree or two from naturally-released CO2, even after we’ve stopped adding our own. Plus there will be other GHG feedbacks (methane, N2O), but it seems those are rather constrained by Paleoclimate data. For instance Hansen et al have methane and N2O moving in synch with CO2 between Ice Age cycles, with CO2+CH4+N2O forcing about 25% bigger than forcing from CO2 alone. A crude approximation would be to combine these GHGs into a “CO2-equivalent”. Then construct a “gamma-equivalent” which also reflects the changes of these other GHGs in response to temperature, and so is about 25% bigger than gamma by itself. For example a gamma of ~16ppm per degree C would turn into a “gamma-equivalent” of ~20ppm per degree C (which is still convergent, fortunately). This is very rough and ready, and the error bars on gamma are big enough to swallow it anyway.

But is that it? Unless I’ve missed anything, we may be close to totting up all the feedbacks (i.e. seeing all the bad news at once).

There’s another kicker in Tripati’s paper. If we do manage to melt down the Greenland and West Antarctic ice-sheets, we would have to reduce BELOW pre-industrial CO2 to get them back again. “Lower levels were necessary for the growth of large ice masses on West Antarctica (~250 to 300 ppmv) and Greenland (~220 to 260ppmv)”. Should there be a 250.org ???

“Hey CFU,

That is for Pure Water,”

No, it can handle non pure water as long as the impurities are liquid at that temperature too, and therefore will not act as nucleation sites.

[edit]

[Response: –just stick to the science and drop the insults and everyone will be better off.–Jim]]