France has a per capita carbon emission of 1.64 tonnes, compared to 2.67 tonnes for the U.K and 5.61 tonnes for the US. So, if anybody has earned the right to rest on their laurels and pontificate to the rest of the developed world about what they should be doing, you’d think it would be France. Far from it, under the leadership of Nicolas Sarkozy, France has embarked on an ambitious program of deeper carbon reductions. In introducing the measures, Sarkozy said "The guiding principle is that the cost to the climate — the carbon cost — will be integrated into planning of all major public projects, and into all deliberations affecting the public." These measures include: a commitment that all new buildings would be net energy producers by 2020, incandescent lighting would be banned by 2010, buyers of efficient vehicles would be subsidized, drivers of inefficient vehicles would be penalized, and road construction would be severely curtailed in favor of expanded rail travel using state-of-the-art French TGV technology. A carbon tax is also being seriously contemplated. These proposals are the result of an intensive months-long series of discussions with scientists and stakeholders such as environmental nongovernmental organizations, industry representatives, and labor union representatives. The process, known as Le Grenelle de l’Environnement, was described here by Nature (subscription required) and a summary of some of Sarkozy’s proposed actions was reported in the press here.

All the same, there has been some pushback from a vocal pair of highly decorated French academicians, Claude Allègre being the most prominent and noisiest of the two. In recent years, Vincent Courtillot has emerged as a reliable sidekick to Allègre — a Dupont to his Dupond — helping to propagate Allègre’s claims and adding a few of his own. Both are members of the Académie des Sciences, and Allègre has been awarded both the Crafoord Prize and Bowie Medal. Allègre has an impressive list of publications relating to the Earth’s interior, and besides that was Minister for Education, Research and Technology in the Jospin government. Courtillot — currently director of the Institut de Physique du Globe de Paris (IPGP) — has had a distinguished record of research on fundamental aspects of geomagnetism and is currently President of the Geomagnetism and Paleomagnetism Section of the American Geophysical Union. Their views were amply (some would say more than amply) represented at a symposium on the IPCC report, held last Spring at the Academie (See the issue "Evolution des Climats" of La Lettre de l’Académie des sciences, and press reports in Le Figaro, Le Monde, and Liberation). What does all this mean? Are the opinions of Allègre and Courtillot founded on some special profound insight that has escaped the notice of the community of scientists who have devoted entire careers to studying climate? Let’s take a look.

[NDT : les passages en italique sont en français dans le texte original]

Les émissions de carbone en France s’élèvent à 1,64 tonne par personne, contre 2,67 tonnes au Royaume Uni et 5,61 tonnes aux Etats Unis. Si un pays peut se targuer d’être vertueux, et faire la leçon aux autres pays développés, vous pourriez penser que ce serait la France. Loin de là, sous l’impulsion de Nicolas Sarkozy, la France s’est lancée dans un programme ambitieux de réduction des émissions de carbone. En présentant ces mesures, M. Sarkozy a déclaré : « Premier principe : tous les grands projets publics, toutes les décisions publiques seront désormais arbitrées en intégrant leur coût pour le climat, leur ‘coût en carbone’. » Ces mesures comprennent : l’engagement que tous les bâtiments construits en 2020 soient des producteurs nets d’énergie, l’interdiction des ampoules à incandescence à partir de 2010, des aides pour les acheteurs de véhicules les moins polluants, les conducteurs de véhicules les plus polluants étant au contraire taxés, et la construction de routes sera limitée pour favoriser les transports ferroviaires avec la technologie de pointe française du TGV ! Une taxe carbone est également envisagée. Ces propositions sont le fruit d’une intense série de discussions entre scientifiques et représentants de la société civile, dont des représentants d’organisations non-gouvernementales écologistes, d’organisations patronales et de syndicats. Ce processus, connu sous le nom de Grenelle de l’Environnement, a été décrit brièvement dans Nature ici (accès avec souscription), et un résumé par la presse des propositions d’actions de Sarkozy est ici.

Cependant, il y a une certaine résistance de la part d’un tandem bruyant de deux membres très décorés de l’Académie des Sciences française, Claude Allègre étant le plus médiatisé et tapageur des deux. Au cours des dernières années, Vincent Courtillot est apparu comme le fidèle compère d’Allègre –le Dupont de Dupond–, l’aidant à diffuser ses thèses, et en ajoutant aussi des personnelles. Tous deux sont membres de l’Académie des Sciences, et Allègre a reçu à la fois le prix Crafoord et la médaille Bowie. Allègre a une liste impressionnante d’articles ayant sujet à la Terre interne, et fut de plus le Ministre de l’Education Nationale, de la Recherche et de la Technologie sous le gouvernement Jospin. Courtillot –actuellement directeur de l’Institut de Physique du Globe de Paris (IPGP)– a un passé reconnu en recherche fondamentale sur le géomagnétisme, et est actuellement le président de la Section de Géomagnétisme et Paléomagnétisme de l’American Geophysical Union. Leurs opinions ont été largement (certains diraient même plus que largement) exprimées lors d’un colloque sur le rapport du GIEC tenu au printemps dernier à l’Académie (voir le numéro spécial “Evolution des Climats” de la Lettre de l’Académie des Sciences, ainsi que les rapports du Figaro, du Monde et de Libération). Qu’est-ce-que tout cela signifie ? Est-ce que les opinions d’Allègre et Courtillot sont fondées sur une profonde clairvoyance qui aurait échappé à la communauté de scientifiques qui ont consacré leur carrière entière à étudier le climat ? Voyons cela.

When an active scientist of the distinction of Allègre or Courtillot speaks out, the voice has a special claim on our attention, no matter how implausible the claims may seem. It would be a mistake, however, to accept the proclamations of such luminaries on the basis of authority; one must examine the arguments on their merits. Allègre does not publish his arguments on climate in the peer-reviewed scientific literature, so we have to turn to his popular writings and public statements to get a glimpse of what these arguments are. A treasure trove of Allegrisms (Allegrories? Allegrations?) can be conveniently found in a little opus humbly entitled Ma vérité sur la planète (Plon/Fayard:Paris 2007). Many of the things said here merely parrot standard discredited skeptics’ arguments without adding anything new: For example, Allègre at several junctures repeats the old fallacy of confusing unpredictability of weather with the problem of determining how climate responds to changes in radiative forcing: "I have difficulty believing that one could predict with precision the temperatures that will occur a century from now, when we can’t even predict what they will be one week from now." (p.89) He also repeats the fallacy that the lead-lag relation between CO2 and temperature in Antarctic ice cores proves that temperature causes CO2 variations rather than vice-versa — a tired and thoroughly discredited argument (look here for a summary of the rebuttals) . There is little more to say about such arguments, save that Allègre’s willingness to repeat them shows either a remarkable gullibility or a disturbing lack of scientific integrity.

Elsewhere, though, Allègre breaks new ground with regard to passing off nonsense as scientific argument. Here are a few examples.

- Allègre claims that the disappearance of the glaciers on Kilimanjaro is due to moisture supply changes arising from tectonic uplift, and has nothing to do with global warming. This claim appeared over a year ago in Allègre’s column in L’Express, and was discussed in an earlier RealClimate post on Allègre. Essentially, Allègre failed to understand that the tectonic events referred to in the Science paper he cited affected the African climate millions of years ago, whereas the present Kilimanjaro glacier didn’t even come into existence until around 10,000 years ago. The erroneous claim about Kilimanjaro is repeated in Ma vérité sur la planète (p.120), despite ample time and opportunity to correct the mistake. So much for vérité ("his" or otherwise).

- Allègre says that "common sense" casts doubt on the idea that CO2 could have such a controlling effect on climate, because its concentration is only 300 parts per million (p.104). This "common sense" flies in the face of over a century of meticulous physics going back to the time of Tyndall, which shows precisely why certain trace gases have such a strong influence on infrared absorption by the Earth’s atmosphere.

- Allègre says we "know nothing" (p.109) about the Dansgaard-Oeschger events and other form of millennial variability appearing in the ice-core record. From this statement, I think you are supposed to infer that since we "know nothing" about the events it could well be that the current warming is just the latest warm phase of such a thing. To be sure, there’s a great deal still to be learned about millennial variability, but the phenomenon has been the subject of several hundred peer-reviewed scientific papers, dozens of conferences, and a major study by the US National Academy of Sciences. We know enough about the pattern of such events and the mechanisms involved to essentially rule out the possibility that the recent warming is a related form of natural variability. We also know enough to worry about the possibility that climate change due to greenhouse gas increases could trigger massive ocean circulation shifts of the sort that were involved in past millennial variability.

- Allègre says that under an increase of CO2 there would be no warming at the equator, whereas the predicted warming at the equator is in fact only somewhat below the global mean warming. He states correctly that the warming is strongest at the poles, but states without support that a 10ºC warming would be no big deal (p.122). This is quite a startling statement, given that a much more moderate warming has already caused substantial loss of Arctic sea ice. Part of his misconception may come from the fact that he thinks that the temperatures at "the poles" range from "-30 to -60ºC." (p.122) If that were really true, there would be no open water in the Arctic at the time of the sea ice minimum. It is easily verified that this is not the case, and indeed the Arctic commonly gets up to 0ºC in the summer, and sometimes more.

- Ignoring the numerous independent studies of the instrumental record of the past century, he says the Phil Jones analysis of this data has been "put seriously in doubt" (p.100). And by what means? A comparison of Jones’ global mean data with an unpublished analysis of the average of a limited number of hand-picked European stations — presented as the epitome of the Geophysicists’ incomparable expertise at time-series analysis! More on this when we come to discuss Courtillot.

- Helpfully, Allègre advises modellers that "It is necessary to avoid basing predictions of future climate on a global mean whose status is vague." (p.106) Evidently he is unaware that general circulation models have been simulating geographical variations of future wind and temperature changes at least since the 1970’s, and that maps of such changes have been included in every IPCC report going back to the very first. Oh, but I forget. Elsewhere Allègre states that "nobody reads" the IPCC reports (p.115). Evidently, this statement applies to at least one person.

- Continuing his display of ignorance of the modelling enterprise, Allègre wonders why modellers put CO2 in their models, and concludes that it is only because they happen to know how it has varied over the centuries. Could a century of meticulous laboratory and field work documenting the radiative effect of CO2 perhaps have something to do with modellers’ preoccupation with this gas? Evidently not in Allègre’s universe. But there’s more: "Because one doesn’t well understand how clouds form, one neglects them! Because one has not mastered the role of aerosols and dust, one neglects them!" (p.104) This is not at all true. Clouds, aerosols and dust (as well as solar irradiance variations and volcanic eruptions) are all included in modern models. Models that leave out the influence of the CO2 rise fail to reproduce the warming of the past 30 years, and it is precisely for this reason that CO2 is confirmed as the prime culprit in global warming.

- Allègre makes a number of false or misleading statements concerning the contents of the IPCC Fourth Assessment report. He claims that this report (contrary to French media coverage) "considerably toned down" its conclusions compared to previous reports (p.119). By way of evidence, Allègre states "For a doubling of CO2 emissions the temperature of the globe will rise between 2 and 4.5ºC in a century. The previous report said between 1.5 and 6ºC." (p.119) First of all, the IPCC statements on climate sensitivity refer to a doubling of CO2 concentration, not CO2 emissions, but let’s give Allègre the benefit of the doubt and assume this is just another instance of sloppy writing rather than true misunderstanding. Even so, Allègre is mixing up his apples with his pommes de terre in this statement. Though the climate sensitivity range narrowed from 1.5-4.5ºC previously to 2-4.5ºC now, reducing the likelihood of low climate sensitivity, the range of predictions for 2100 remain largely unchanged (and are moreover not strictly comparable between the reports given changes in the way "likelihood" is estimated). In a similar vein, Allègre claims that the IPCC reduced its forecast of sea level rise, which is not the case.

- And there’s more. He says that IPCC has "modified, though not completely abandoned, its argument concerning 20th century temperature rise." (p.119) This evidently refers to Allègre’s belief that one of IPCC’s main arguments has been that CO2 must be responsible for temperature rise because (ben voilà!) they both go up! It’s hard for IPCC to abandon an argument it never made, and in any event the Fourth Assessment Report probably devotes more space to discussing the twentieth century temperature record, using more techniques, than any earlier report. And yes it does (pan to shot of Galileo here, speaking through clenched teeth as he bows before the inquisition) still go up (and by essentially the same amount as previously estimated). Continuing the theme of abandonment, Allègre says that the IPCC has "abandoned" its argument regarding the implications of ice-core CO2 and temperature variations. Actually, not. There is no change in the way IPCC interpreted the Vostok isotope and CO2 curve, which appears both in the 2001 and 2007 reports (the latter with Epica extensions into earlier times). The discussion appears in Chapter 6 of the Fourth Assessment Report (p 444 fig 6.3), but how could Allègre be expected to know that? Nobody reads the IPCC reports, right?

Misconceptions and misrepresentations of the sort given above are liberally supplemented with the usual arsenal of innuendo and quote-mining. Because Christopher Landsea (extravagantly compared to Galileo!) chose to make a scene by resigning from the IPCC, the whole process is deemed to not allow dissent — conveniently ignoring that Lindzen happily stayed on the IPCC Third Assessment Report. An entirely reasonable and uncontroversial statement by Dennis Hartmann on modelling uncertainties is twisted to imply that modellers think we can’t simulate anything with sufficient precision to draw conclusions about future warming (p.105). Quotes on the possible necessity of adaptation measures from MIT’s Ron Prinn and Columbia’s Wally Broecker are used to imply that these two notables favor adaptation over CO2 emissions reduction (p.126). And on the subject of adaptation vs. mitigation, some of Allègre’s statements are downright bizarre: He says that we have nothing to fear from global warming. After all, we adapted to the ozone hole, didn’t we? We adapted to acid rain, didn’t we? (p.127) Well, no actually, we did nothing of the sort. We "adapted" to the ozone hole by passing the Montreal Protocol to control CFC emissions. We "adapted" to acid rain by passing pollution control measures which reduced sulfate emissions. If this is "adaptation," I guess I can just say: ‘d’accord!’ Let’s just "adapt" to global warming by reducing CO2 emissions!

What is to be said of such claims? I couldn’t put it better than Allègre himself: "… une imposture intellectuelle, une escroquerie!" (p.107)

Whatever agenda Allègre is pursuing in his public pronouncements on global warming, it would seem to be very little informed by his scientific expertise. Through his litany of errors, misconceptions and misrepresentations, he has abdicated any claim to be taken seriously as a scientist when he speaks about climate change. And lest Lomborg and similar eco-Pollyannas draw too much comfort from Allègre’s support, let us note that, at the end of the day, Allègre still calls for a 20% reduction in CO2 emissions over the next 20 years. Many of us who wouldn’t touch Allègre’s arguments with a 10 foot baguette would be quite happy if such a plan were enacted in the United States, at least as a first step towards ultimate deeper reductions.

So much for Allègre. Now what of M. Courtilllot? Fortunately, we need not go into nearly so much detail, since almost all of the arguments presented in the Academie debate (see his article in La Lettre de l’Académie des sciences) mirror those given in Allègre’s book. Still, the man manages to add a few wrinkles of his own. For example he confidently declares that the glacial-interglacial CO2 variations are "simply" explained by the effects of temperature on CO2 solubility. He is evidently unaware that this simple mechanism was in fact quite simply evaluated years ago by Wally Broecker — like Allègre, a Crafoord prize winner — and found to be woefully insufficient (see Martin, Archer and Lea, Paleoceanography 2005, for a recent treatment of the subject).

Remember the graph of European temperature in Ma vérité which was supposed to put Phil Jones’ analysis of the instrumental record "seriously in doubt?" Well, it reappears in Courtillot amply decorated with a lot of new verbiage: climate scientists spend all their time modelling and hardly any looking at data; geophysicists are uniquely qualified to look at time series because they do it all the time and anyway they invented most of this stuff in the first place; nobody ever cross-checks or verifies Phil Jones’ work. And patati, and patata, none of which holds a glimmer of truth. But, having declared all this the brave geophysicists of the IPGP decide to take a look for themselves by averaging together a few tens of European weather stations (with a few distant ones from the Urals thrown in for good measure) and ben voilà, how Courtillot is "astonished" that the curve doesn’t look at all like what they were taught it should look like! (Courtillot is evidently a man easily astonished, and equally easily surprised, since these words appear with stunning regularity in his article.)

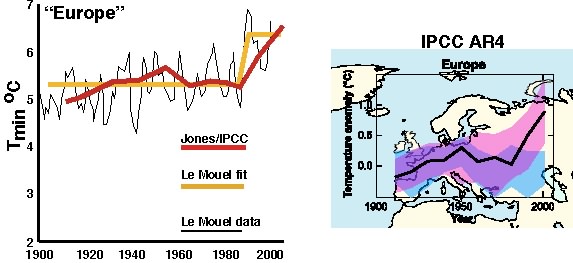

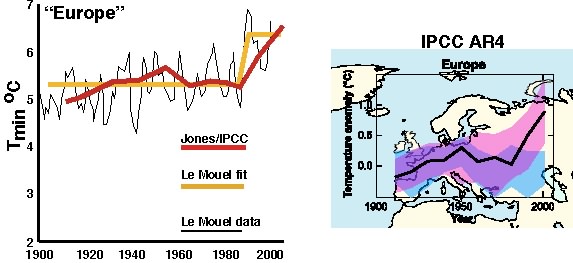

The analysis which evidently shocked Courtillot like a coup de foudre was presented at the Academie debate by Le Mouël (himself an Academician, and holder of the Fleming Medal). A video of his talk is here. Dear reader, I urge you to take a look at this video for yourself and see if you can make any more sense of it than I could, amidst all the mislabeled graphs, bizarre choices of what to compare to what, and missing information about crucial aspects of the data handling. I have done my best to convey what I think is the essence of the argument Le Mouël is trying to make, but it isn’t easy. In the left panel below I reproduce the only graph in which Le Mouël attempts a direct comparison between his data and the Phil Jones analysis which appeared in the IPCC report; it was redrawn by tracing over a freeze-frame of Le Mouël’s presentation. The graph is labeled "European Average" in the presentation, but the data (thin black line) which Le Mouël compares to Jones’ European analysis (red line) is actually from Denmark. Moreover, Le Mouël’s data seems to be monthly (or maybe daily) minima. Why one would want to compare Danish temperature minima with all-Europe temperature means is beyond me, but in the end what Le Mouël is making a big noise about is his claim that the yellow curve fit describes the data better than Phil Jones’ curve. Given the variability, there is really no objective reason to prefer one over the other, but the distinction between the two fits is largely immaterial. What you can take home from Le Mouël’s analysis is that, in Europe, a marked temperature rise does not set in until the 1980’s. Sound familiar? It should, because that is more or less what the IPCC says, pointing out further that natural variability cannot explain the recent warming. This can be seen well in the right panel, taken from the Fourth Assessment report. The blue shaded region is the ensemble of simulations forced by natural variabiity, while the pink shaded region includes anthropogenic forcing. Only the latter reproduces the rise at the end of the record.

The point of a lot of this fiddling with temperature curves is that the Sun must be doing something to control all these fluctuations. That brings us to radiative forcing, and Courtillot and company have had some problems with this issue, since they have a hard time making it look like CO2 is a small forcing and solar variability is a big forcing. One botched attempt at this was to claim that cloud fluctuations swamp CO2; Courtillot claims that clouds cause 80 Watts per square meter of radiative forcing, so that a mere 3% change in cloud cover would cause 2.4 Watts per square meter radiative forcing, which would be comparable to greenhouse gas forcing changes to date. To get this figure, though, Courtillot evidently assumed that all the Earth’s albedo is due to clouds, and moreover neglected the cloud greenhouse effect. When properly calculated, the net cloud radiative forcing is more like 20 Watts per square meter, so a 3% change gives you only 0.6 Watts per square meter, well below the greenhouse gas radiative forcing to date, to say nothing of what is in store for the future.

This flub is nothing compared to the trouble Courtillot’s collaborator Le Mouël got into during the debates, when he was trying to show that the 1 Watt per square meter variation in the Solar irradiance over the solar cycle is fully half the greenhouse gas forcing. Well, there is the little matter that Le Mouël forgot to take into account the sphericity of the Earth (which means divide the solar irradiance by 4) or its reflectivity (which means take 70% of the result). As the Le Monde reporter archly noted, Le Mouël’s calculation assumes a black flat Earth, but, "Hélas! La Terre est ronde" (zut alors!). Le Mouël seems eager to follow in Allègre’s geometrically-challenged footsteps: In a 1988 book (12 clés pour la géologie, Belin:Paris), Allègre confidently stated that the pole to equator temperature gradient was due to snow albedo and atmospheric absorption, making no mention of the role of the Earth’s spherical geometry, which is far and away the dominant factor (and the reason there’s ice at the poles to make a high albedo). Messieurs, here’s a little hint: What does the "G" stand for in "IPGP?"

The round Earth having robbed him of his 1 Watt per square meter –which in any event is mostly averaged out over the relatively short solar cycle leaving a miniscule tenth of a Watt variation between cycles — Courtillot grasps at the possibility some unknown and unquantified nonlinear mechanism for turning the very high frequency solar variability into a century scale trend.

There is also a bit of nattering about Moberg’s take on the Hockey stick, the supposed considerable warmth of the Medieval Warm Period, and some supposed millennial solar variability which supposedly accounts for why the present warming sort of looks like Moberg’s take on the Medieval Warm. Even leaving aside evidence that Moberg’s method exaggerates variability (see Mann, Rutherford, Wahl and Ammann 2005, available here), the "blame the Sun" mantra falls apart because neither the Sun nor cosmic rays have been exhibiting any trend that could conceivably account for the recent warming, as we have discussed in many places on RealClimate (most recently here).

With regard to climate, Courtillot’s main claim to fame is not found in his article in La Lettre. For that we have to look to a paper recently published in EPSL, which claims that climate variations are closely tied to the geomagnetic field. How convincing is this work? That will be the subject of Part II.

Quand un scientifique actif aussi distingué qu’Allègre ou Courtillot s’exprime, sa parole capte notre attention, quelle que soit sa pertinence. Ce serait néanmoins une erreur d’accepter les affirmations de tels phares uniquement à cause de leur renommée; on doit contrôler les arguments sur leurs mérites. Allègre ne publie pas ses idées sur le climat dans la littérature scientifique, de sorte que nous devons nous contenter de ses écrits de vulgarisation et déclarations publiques afin d’avoir un aperçu de ces arguments. Un trésor de ces allègreries (allègritudes ?, allègrations ?) est facilement accessible dans un petit ouvrage humblement intitulé Ma vérité sur la planète (Plon/Fayard, Paris, 2007). Beaucoup de choses que l’on y trouve ne sont que rabâchage d’arguments standard de sceptiques, arguments complétement discrédités auxquels il n’apporte rien de neuf. Par exemple : il répète à plusieurs endroits l’erreur classique de confondre le caractère imprévisible de la météorologie avec la détermination de la réponse du climat au forçage radiatif : « J’ai peine à croire qu’on puisse prédire avec précision le temps qu’il fera dans un siècle alors qu’on ne peut pas prévoir celui qu’il fera dans une semaine » (p.89). Il répète également le raisonnement faux que les relations de phase entre CO2 et température mesurés dans les carottes de glace de l’Antarctique prouvent que c’est la température qui est responsable des variations de CO2 plutôt que l’inverse – un raisonnement éculé et largement discrédité (lire ici un résumé des contre-arguments). Il y a peu de choses à ajouter sur ces arguments, sauf que la capacité d’Allègre à les répéter indique soit une remaquable crédulité, soit un inquiétant manque d’intégrité scientifique.

Ailleurs, pourtant, Allègre excelle dans l’art de servir des balivernes comme arguments scientifiques. En voici quelques exemples.

- Allègre affirme que la disparition des glaciers du Kilimandjaro est due à un changement des apports de vapeur d’eau causé par le soulèvement tectonique, et n’a rien à voir avec le réchauffement global. Cette affirmation est apparue il y a un peu plus d’un an dans la chronique d’Allègre dans l’Express, et a été discutée dans un article précédent de RealClimate au sujet d’Allègre. Pour l’essentiel, Allègre passe à côté du fait que les événements tectoniques dontil est question dans l’article cité de Science ont modifié le climat africain il y a plusieurs millions d’années, alors même que le glacier actuel du kilimandjaro est apparu il y a seulement 10 000 ans. Cette affirmation erronée au sujet du Kilimandjaro est répétée dans Ma vérité sur la planète (p.120), bien qu’il ait eu suffisamment de temps et d’opportunités pour corriger cette erreur. Autant pour la ‘vérité‘ (la sienne ou une autre).

- Allègre soutient que le ‘bon sens’ permet de mettre en doute l’idée que le CO2 puisse autant contrôler le climat parce que sa concentration est seulement de 300 parties par million (p.104). Ce ‘bon sens’ bouscule plus d’un siècle de physique méticuleuse qui remonte à l’époque de Tyndall et qui montre précisément pourquoi certains gaz à l’état de trace influencent si fortement l’absorption des infra-rouges par l’atmosphère.

- Allègre dit qu’on ne « sait rien » (p.109) sur les événements de Dansgaard-Oeschger et autre type de variabilité millénaire existant dans les carottes de glace. De cette affirmation, je suppose que nous sommes censés déduire que, comme on ne « sait rien » sur ces événements, il se pourrait bien que le réchauffement actuel soit juste la dernière phase de l’un d’eux. Bien sûr, il y a encore beaucoup de choses à apprendre sur la variabilité millénaire, mais ce phénomène a fait l’objet de plusieurs centaines de publications scientifiques, douzaines de conférences, ainsi qu’une synthèse majeure par l’Académie des Sciences américaine. Nous en savons assez sur la structure de ces événements et leurs mécanismes pour tout écarter la possibilité que le réchauffement récent provienne simplement de ce type de variabilité naturelle. Nous en savons également assez pour nous inquiéter de la possibilité que le changement climatique dû à l’augmentation des gaz à effet de serre puisse déclencher un de ces changements majeurs de la circulation océanique qui ont participé à la variabilité millénaire dans le passé.

- Allègre annonce qu’avec une augmentation du CO2 il ne devrait pas y avoir de réchauffement à l’équateur, alors que le réchauffement prédit à l’équateur n’est que légèrement inférieur à la moyenne globale. Il affirme correctement que le réchauffement est plus fort aux pôles, mais aussi, et sans justification, qu’un réchauffement de 10ºC serait sans importance (p.122). C’est une affirmation plutôt surprenante puisqu’un réchauffement bien plus faible est déjà responsable d’une disparition notable de la glace de mer en Arctique. Cette conception erronée pourrait provenir en partie du fait qu’il pense que la température « aux pôles » varie entre « -30 et -60ºC » (p.122). Si c’était vrai, il n’y aurait pas d’eau libre en Arctique pendant le minimum de couverture de glace de mer. Il est facile de vérifier que ce n’est pas le cas, et en fait l’Arctique monte souvent jusqu’à 0ºC, et parfois au-delà.

- Ignorant les nombreuses études indépendantes des mesures sur le dernier siècle, il soutient que l’analyse de Phil Jones de ces données a été « fortement mise en doute » (p.100). Et par quel moyen ? Par une comparaison entre les données globales de Phil Jones et une analyse non publiée des moyennes d’un petit nombre de stations européennes – présentée comme l’archétype de l’expertise incomparable des Géophysiciens en analyse de séries temporelles ! Nous y reviendrons à propos de Courtillot.

- Très obligeamment, Allègre conseille aux modélisateurs : « Il faut donc éviter de fonder les prédictions du climat futur sur une moyenne mondiale dont la situation est floue. » (p.106). Visiblement il n’est pas au courant que, depuis au moins les années 70, les modèles de circulation générale simulent des champs spatialisés des prévisions de vent et de température, et que des cartes de ces changements ont été incluses dans tous les rapports du GIEC depuis le premier. Oh, mais j’oubliais. Ailleurs, Allègre assène que « personne ne lit » (p.115) les rapports du GIEC. Visiblement, cette déclaration s’applique au moins à une personne.

- Continuant d’étaler son ignorance de la modélisation, Allègre se demande pourquoi les modélisateurs ont inclus le CO2 dans leurs modèles, et en conclut que c’est uniquement parce qu’ils connaissent ses variations sur les derniers siècles. Est-ce-qu’un siècle de travail méticuleux en laboratoire et sur le terrain passé à documenter l’effet radiatif du CO2 aurait peut-être à voir avec l’attrait des modélisateurs pour ce gaz ? Visiblement pas dans l’univers d’Allègre. Mais il y a mieux : « Comme on ne sait pas bien comment se forment les nuages, on les néglige ! Comme on maîtrise mal le rôle des aérosols et des poussières, on les néglige ! » (p.104) C’est complètement faux. Nuages, aérosols et poussières (comme variations de l’irradiance solaire et éruptions volcaniques) sont tous pris en compte par les modèles actuels. Les modèles qui négligent l’influence de l’augmentation du CO2 n’arrivent pas à reproduire le réchauffement des derniers trente ans, et c’est précisément pour cette raison que le CO2 a été confirmé comme le responsable principal du réchauffement global.

- Allègre fait un certain nombre de déclarations fausses ou fallacieuses sur le contenu du Quatrième Rapport d’Evaluation du GIEC. Il assure, contrairement aux médias français, que ce rapport « est beaucoup plus modéré que les précédents. » (p.119) Ainsi, Allègre assure que « Pour un doublement des émissions de CO2 , la température du globe augmenterait de 2 à 4,5ºC en un siècle. Le précédent rapport disait entre 1,5 et 6ºC. » (p.119) En premier lieu, les déclarations du GIEC sur la sensibilité climatique font référence à un doublement de la concentration en CO2, pas de ses émissions, mais laissons à Allègre le bénéfice du doute et supposons qu’il ne s’agit que d’une autre coquille et pas d’une véritable incompréhension. Tout de même, Allègre mélange ici des pommes et des frites. Même si la fourchette de la sensibilité climatique a été réduite, passant de 1,5 – 4,5ºC à 2 – 4,5ºC, ce qui diminue ainsi la probabilité d’une faible sensibilité, la fourchette des prévisions pour l’année 2100 n’a presque pas changé (de plus la définition probabiliste de cette fourchette a varié entre les rapports, elles ne sont donc pas directement comparables). Dans la même veine, Allègre assure que le GIEC a réduit ses prévisions de hausse du niveau marin, ce qui n’est pas le cas (voir ici).

- Mais il y a mieux. Il dit que le GIEC « modère sans encore l’abandonner l’argument d’évolution de la température depuis le XIXe siècle. » (p.119) Ceci correspond évidemment à la croyance d’Allègre que l’un des principaux arguments du GIEC est que le CO2 doit être responsable de l’augmentation de température parce que (ben voilà !) tous deux augmentent ! Il est difficile au GIEC d’abandonner un argument qui n’a jamais été le sien, et en tout cas le Quatrième Rapport d’Evaluation fait probablement plus de place à la discussion des enregistrements de température sur le 20e siècle, en utilisant plus de techniques, qu’aucun des rapports précédents. Et pourtant (on pense ici à Galilée, parlant en serrant les dents tout en s’inclinant devant l’Inquisition) elle augmente bien (et en suivant pratiquement les prévisions). Poursuivant sur le thème du renoncement supposé du GIEC, Allègre assure que celui-ci a « abandonné » son argumentation basée sur les variations de CO2 et de température enregistrées par les glace. Rien de tout cela. Il n’y a pas eu de changement d’interprétation par le GIEC des courbes isotopiques et du CO2 de Vostok, interprétation qui apparait dans les deux rapports de 2001 et 2007 (avec, dans ce dernier, l’extension d’EPICA à des périodes plus anciennes). Cette discussion se trouve au chapitre 6 du Quatrième Rapport d’Evaluation (p.444, figure 6.3), mais comment pourrait-on s’attendre à ce qu’Allègre sache cela, puisque personne ne lit le rapport du GIEC, n’est-ce-pas ?

De telles idées fausses et déformations de la réalité comme celles exposées ci-dessus sont généreusement accompagnées de l’arsenal habituel d’insinuations et de citations abusives. Parce que Christopher Landsea (comparé de manière extravagante à Galilée !) a choisi de faire toute une scène de sa démission du GIEC, le processus dans son ensemble est jugé opposé à toute dissidence – ignorant de manière opportune que Lindzen lui est resté tranquillement tout au long du Troisième Rapport d’Evaluation du GIEC. Une affirmation de Dennis Hartmann, tout à fait justifiée et incontestable, sur les incertitudes de la modélisation est détournée afin d’insinuer que les modélisateurs ne croient pas possible d’obtenir suffisamment de précision pour tirer des conclusions sur le réchauffement futur (p.105). Des citations sur la possible nécessité de mesures d’adaptation, venant de Ron Prinn du MIT et de Wally Broecker de Columbia, sont utilisées afin d’insinuer que ces deux célébrités favorisent l’adaptation sur la réduction des émissions de CO2 (p.126). Et sur le sujet de l’adaptation par rapport à l’atténuation, certaines affirmations d’Allègre sont franchement saugrenues : il soutient que nous n’avons rien à craindre du réchauffement global. Après tout, nous nous sommes adaptés au trou d’ozone, n’est-ce pas ? Nous nous sommes adaptés aux pluies acides, n’est-ce pas ? (p.127) Et bien, non en fait, nous n’avons rien fait de tout cela. Nous nous sommes ‘adaptés’ au trou d’ozone en adoptant le protocole de Montréal pour contrôler les émissions de CFC. Nous nous sommes ‘adaptés’ aux pluies acides en adoptant des mesures de contrôle des rejets soufrés. Si c’est ça ‘s’adapter’, je pense que je peux juste dire : « D’accord ! ‘Adaptons’-nous au réchauffement global en réduisant les émissions de CO2 ! »

Que peut-on dire de toutes ces affirmations ? Je ne pourrais le faire mieux qu’Allègre lui-même : «…une imposture intellectuelle, une escroquerie ! » (p.107)

Quel que soit le plan d’Allègre dans ses annonces publiques, celles-ci semblent peu fondées sur son expertise scientifique. Avec sa litanie d’erreurs, d’idées fausses et de déformations de la réalité, il a renoncé à toute prétention d’être considéré sérieusement en tant que scientifique lorsqu’il parle du changement climatique. Et si même Lomborg et autres éco-polyannas bénéficient trop du soutien d’Allègre, notons que, au final, Allègre appelle quand même à une réduction de 20% des émissions de CO2 sur les vingt prochaines années. Nombre d’entre nous qui se refuseraient à toucher aux arguments d’Allègre, même avec un bâton de 3 m, seraient très heureux si un tel plan était mis en oeuvre aux Etats Unis, au moins comme un premier pas vers des réductions plus drastiques.

Voici donc pour Allègre. Maintenant que dire au sujet de M. Courtillot ? Heureusement il ne nous est pas nécessaire d’aller si loin dans les détails, car pratiquement tous les arguments présentés au débat à l’Académie (voir son article dans La Lettre de l’Académie des sciences) reflètent ceux du livre d’Allègre. Pourtant, notre homme parvient à ajouter quelques marques de son cru. Par exemple il déclare, sûr de lui, que les variations glaciaires-interglaciaires du CO2 sont « tout simplement » dues à l’effet de la température sur la solubilité du CO2. Il n’est donc pas au courant que ce mécanisme de base a été évalué il y a bien des années par Wally Broecker –comme Allègre, un détenteur du prix Crafoord– et a été estimé vraiment insuffisant (voir Martin, Archer et Lea, Paleoceanography 2005, pour un récent bilan sur ce sujet).

Vous vous souvenez du graphe de la température européenne dans Ma Vérité, qui devait remettre « fortement en doute » l’analyse de Phil Jones des enregistrements de température ? Et bien il réapparait avec Courtillot très enrichi par de nouveaux verbiages : les scientifiques du climat passent tout leur temps à modéliser et pratiquement pas à observer; les géophysiciens sont les seuls qualifiés à étudier les séries temporelles car ils le font sans arrêt et de toute façon ils ont pratiquement tout inventé en premier dans ce domaine; personne n’a jamais contrôlé ou vérifié le travail de Phil Jones. Et patati, et patata, rien de tout cela n’ayant une once de vérité. Mais, après avoir déclaré tout cela, les braves géophysiciens de l’IPGP décidèrent de regarder par eux-mêmes en moyennant quelques dizaines de stations météorologiques européennes (additionnées de quelques stations éloignées en Oural pour faire bonne mesure), et ben voilà, Courtillot est “étonné” que la courbe ne ressemble pas à ce qu’on leur avait appris ! (Courtillot est visiblement quelqu’un de facilement étonné, et autant surpris, car ces mots apparaissent avec une régularité stupéfiante dans son article).

Cette analyse, qui frappa Courtillot d’un vrai ‘coup de foudre‘, fut présentée lors du débat à l’Académie par Le Mouël (lui-même académicien, et détenteur de la médaille Fleming). Une vidéo de cette présentation se trouve ici. Cher lecteur, je vous presse de regarder cette vidéo afin de voir si vous pouvez en tirer plus de sens que je ne l’ai pu, parmi tous les graphes mal annotés, les choix étranges des comparaisons, et les informations qui manquent sur certains aspects cruciaux du traitement des données. J’ai fait de mon mieux pour présenter ce que je pense être l’essence de l’argumentation de Le Mouël, mais ce n’est pas facile. Sur la partie gauche de l’image ci-dessous j’ai reproduit le seul graphe dans lequel Le Mouël tente une comparaison directe entre ses données et l’analyse de Phil Jones parue dans le rapport du GIEC; ce graphe a été décalqué sur un des plans de la vidéo de la présentation. Le graphe est titré “Moyenne de l’Europe” dans la présentation, mais les données (courbe noire) que Le Mouël compare à l’analyse européenne de Phil Jones (trait rouge) sont en fait celles du Danemark. De plus, les données de Le Mouël semblent correspondre à des minima mensuels (ou journaliers peut-être). Pourquoi voudrait-on comparer les minima de température au Danemark avec la moyenne de température de toute l’Europe, cela me dépasse, mais finalement ce dont Le Mouël fait grand bruit c’est l’affirmation que la courbe jaune est une meilleure approximation des données que la courbe de Phil Jones. En considérant la variabilité, il n’y a vraiment pas de raison objective de préférer l’une à l’autre, la distinction entre les deux approximations est complètement irréelle. Le message de l’analyse de Le Mouël est qu’en Europe une augmentation marquée de la température n’apparaît pas avant les années 80. Déjà entendu quelque part ? Vous devriez, car ceci correspond plus ou moins à ce que dit le GIEC, qui conclut de plus que la variabilité naturelle ne peut expliquer le réchauffement récent. Ceci est bien visible sur le graphe de droite tiré du Quatrième Rapport d’Evaluation du GIEC. La zone ombrée en bleu correspond à un ensemble de simulations forcées par la variabilité naturelle, tandis que celle en rose inclut aussi le forçage anthropique. Seule cette dernière reproduit l’augmentation de la fin de l’enregistrement. Bien loin de bousculer les conventions, Le Mouël a en fait montré qu’une simple moyenne d’un jeu limité de données confirme largement l’analyse de Phil Jones – une ‘prouesse’, si l’on réalise qu’en considérant une région aussi petite que l’Europe, la tendance anthropogénique est bien plus difficile à distinguer de la variabilité naturelle due à la circulation.

Le but de tout ce trafic avec les courbes de température est que le soleil doit en être pour quelque chose dans ces fluctuations. Ce qui nous amène au forçage radiatif, où Courtillot et consorts ont eu quelques problèmes sur ce point, car il leur a été très difficile de faire passer le CO2 pour un forçage mineur et la variabilité solaire pour un forçage majeur. Un essai baclé dans ce sens a été de prétendre que les variations des nuages surpassaient le CO2 : Courtillot prétend que les nuages sont responsables d’un forçage radiatif de 80 watts par mètre carré, de sorte qu’un changement aussi faible que 3% de la couverture nuageuse induirait un forçage radiatif de 2,4 watts par mètre carré, comparable à celui actuel des gaz à effet de serre. Mais pour obtenir ce chiffre, Courtillot a évidemment supposé que l’albédo terrestre est entièrement dû aux nuages, et de plus il a négligé l’effet de serre des nuages. Calculé correctement, le forçage radiatif net des nuages est plutôt de 20 watts par mètre carré, de sorte qu’une variation de 3% donne seulement 0,6 watts par mètre carré, très inférieur au forçage radiatif actuel des gaz à effet de serre, sans même parler de celui qui nous attend.

Cette gaffe n’est rien en comparaison de la difficulté éprouvée pendant les débats par Le Mouël, qui collabore avec Courtillot, lorsqu’il essaya de montrer que la variation de 1 watt par mètre carré de l’irradiance solaire au cours d’un cycle solaire représente vraiment la moitié du forçage des gaz à effet de serre. Bon, il y a un détail que Le Mouël oublie de prendre en compte, c’est la sphéricité de la Terre (ce qui implique de diviser l’irradiance solaire par 4) ou sa réflectance (ce qui implique de prendre 70% du résultat). Comme le reporter du Monde le soulignait malicieusement, le calcul de Le Mouël suppose une Terre noire et plate, mais “Hélas! La Terre est ronde” (zut alors !). Le Mouël semble ainsi suivre avec ferveur Allègre dans ses mauvais pas en géométrie : dans un livre de 1988 (12 clés pour la géologie, Belin/Paris), Allègre affirme sûr de lui que le gradient de température entre les pôles et l’équateur est dû à l’albédo de la neige et à l’absorption de l’atmosphère, ne faisant aucune mention du rôle de la géométrie sphérique de la Terre, qui est de loin le facteur dominant (et la raison de la présence aux pôles de glace avec un albédo élevé). Messieurs, voici un indice : que veut dire le ‘G’ de ‘IPGP’ ?

La rotondité de la Terre l’ayant privé de son 1 watt par mètre carré –qui de toute façon est pratiquement moyenné au cours d’un cycle solaire et ne laisse qu’un dixième de watt par mètre carré entre les cycles– Courtillot se raccrocha à la possibilité d’un mécanisme non linéaire, inconnu et non quantifié, pour transformer la variabilité solaire haute fréquence en une tendance sur un siècle.

Il y a également quelques bavardages sur la position de Moberg sur la ‘crosse de hockey’, la prétendue période très chaude de l’Optimum Médiéval, et une supposée variabilité solaire millénaire qui devrait expliquer pourquoi le réchauffement récent correspond plus ou moins à l’explication de Moberg de l’Optimum Médiéval. Mises à part quelques indications que la méthode utilisée par Moberg surestime la variabilité (voir Mann, Rutherford, Wahl et Ammann 2005, disponible ici), le mantra du “C’est la faute au soleil” s’effondre puisque ni le soleil ni les rayons cosmiques ne montrent de tendance pouvant expliquer le réchauffement des dernières décennies, comme nous en avons discuté à plusieurs reprises sur RealClimate (le plus récemment ici).

Pour ce qui est du climat, les faits de gloire de Courtillot ne se trouvent pas dans son article de La Lettre de l’Académie. Pour cela nous devons nous tourner vers un article récemment publié dans EPSL, article qui prétend que les changements climatiques sont étroitement liés au champ géomagnétique. Ce travail est-il convaincant ? Ce sera le sujet de la seconde partie.

Andrew Dodds (42) — Both nuclear and hyrdo require massive amounts of concrete. Cement production produces massive amounts of CO2, estimated to be 5% of the antropogenic yearly total addition.

Of course, there is also the 7% you are using less of. :-)

Re nuclear, the InterAcademy Council report, Lighting the Way: Toward a Sustainable Energy Future, recommends:

So it would be good if we all push for this report, and help publicize the results.

I know little authoritative about French waste, though we all know that Greenpeace requires more than a bucket of salt. I posted on links to American analysis of Yucca Mountain, but learned afterwards that 260 mrem is a tad less than the yearly exposure due to a one cigarette/day habit (not pack/day).

Also see National Research Council Disposition of High-Level Waste and Spent Nuclear Fuel: The Continuing Societal and Technical Challenges (2001)

Re France, Areva and Mitsubishi have created Atmea to build advanced Gen III reactors, see here and here. A Physics Today article says the French are building a 1600 MW PWR (2012 due date) and that Chirac announced plans for a pebble bed reactor by 2020. Note, any 2006 report on the future of nuclear power is dated. I read elsewhere that AREVA plans to take a stake in PBMR development.

From David Bodansky’s Nuclear Energy, 2nd edition on French nuclear waste plans:

Re #42, if you use less nuclear + hydro to light your bulb, more of that power is available to sell to Germany.

Re #12: [Could it be because we have no idea what to do with the extremely toxic & long-lived waste products?]

You do realize that statement applies equally well, if not more so, to CO2 produced by fossil fuel power plants, don’t you? Extremely toxic (in mass quantities), long-lived, but dumped into the atmosphere regardless.

> Jim Cripwell

Search for +arctic +”multi-year ice”

Here for example:

http://www.arctic.noaa.gov/essay_wadhams.html

“Ice which has survived one or more summer seasons of partial melt is called multi-year ice. In the Arctic, sea ice commonly takes several years to either make a circuit within the closed Beaufort Gyre surface current system (7-10 years) or else be transported across the Arctic Basin and expelled in the East Greenland Current (3-4 years). More than half of the ice in the Arctic is therefore multi-year ice. Growth continues from year to year until the ice thickness reaches a maximum of about 3 metres, at which point summer melt matches winter growth and the thickness oscillates through an annual cycle. This old, multi-year ice is much fresher than first-year ice; it has a lower conductivity and a rougher surface. The low salinity of multi-year ice makes it much stronger than first-year ice and a formidable barrier to icebreakers.”

> Jim Cripwell

Also remember to do your search and select “images”

That will find this, for example:

http://www.socc.ca/seaice/seaice_current_e.cfm

“As of November 1, Environment Canada analysis indicates sea ice over the Northern Hemisphere is recovering rapidly from its spectacular collapse in the summer and, except for the Chukchi Sea and Barents Sea, ice cover is near normal. However, much of the thick multi-year ice in the eastern and even central Arctic Ocean has been lost and is now covered with thin newly formed ice….”

I’ve given examples of how to search, you can work out other search terms, narrow the time covered, and as Coby reminds us there’s no “wisdom” button — you have to read.

Remember also to do the same searches in Google Scholar of course.

Re 32

Look at http://psc.apl.washington.edu/zhang/IDAO/summer2007_arctic_seaice.gif very carefully and you will see that some of the sea ice area recovery in Oct 2007 was the result of thick ice breaking up into thin ice. Thus, even after freeze-up started and the sea ice area was increasing, some sea ice continued to thin. This continuted thinning will affect next summer’s melt.

Note too. that the Antartic ice is back to near normal, after haveing been slightly above normal for the last couple of months.

RE #52 (Karen Street) “we all know that Greenpeace requires more than a bucket of salt.” Evidence for that claim, please?

Timo,

Your link is apparently incorrect. I’ve included the correct one below. However, a quick perusal of the article will reveal that what they are doing is using the lasers as an accelerator–kind of a laser wakefield approach. This is not going to be energetically feasible on a large scale. The article you posted was from 2003. They’ve had 4 years to make this work if it was in fact feasible. The fact that we still have tons of nuclear waste and that no government is seriously pursuing this option argues that it is not in fact energetically feasible. No free lunches.

http://www.iop.org/EJ/article/0022-3727/36/18/L01/d3_18_L01.pdf?request-id=ppCsos2W3BG0ZZEg3Ai7Kg

James, You needn’t stop with coal-fired plants. Semiconductor fabs on the scale needed to make solar power economical will likely produce quite a bit of toxic waste as well. Wind and solar both require energy storage–and most storage technologies–from batteries to reservoirs–have adverse environmental consequences. No matter what option we choose, we will have to mitigate adverse consequences.

Karen–borosilicate glasses are quite hard, durable and inert–but do they stay that way. Radiation damage over time could change the structure considerably–and in some cases, the thermal effects are sufficently great to cause contact metamorphism in the surrounging rock. And all of this does not take into account seismic disturbancs, changes in precipitation… It is a daunting problem.

France, Germany, and the U.K. are relatively minor contributors to GHG’s, compared to the U.S. and, soon, India and China, as Pete says in comment #30, but they are going in the right direction. They’re also making themselves more independent of the unstable(to put it mildly) oil supplies coming from the Middle East. In addition by developing alternative energy supplies these countries are contributing to new technologies in these areas to diminish drawbacks and make them more economical. As things stand now, China has more stringent fuel efficiency standards than the U.S.

As far as these European nations are concerned, they’re apparently adhering to the old saw that it’s better to light one candle than to curse the darkness. Much better. Our own policy from on high, here in the U.S., is apparently to let the inmates run the asylum.

> 260 mrem is a tad less than the yearly exposure due to a

> one cigarette/day habit (not pack/day)

False comparison; radioactivity in cigarette smoke is a minor part of the total risk, http://www.lungusa.org/site/pp.asp?c=dvLUK9O0E&b=35422

Adding 260 mrem is adding it to existing background.

But transuranics don’t behave the same as natural elements.

People trivializing the serious, thoughtful effort being made to contain and manage fission plant waste really insult the people who are trying to do that competently and professionally. We can argue over whether it’s smart to cause the problem. But arguing over whether the problem is real is a complete waste of time. It’s real.

It’s hard work being done with great effort. Outcome yet unknown.

To concur with writer Nr 27th

On Allegre and Co, yes, this will not do much damage in France. Allegre has sold lots of his books. He is a king of institution here. With a bunch of other “new reactionaries” he is holding a position in the media as “oppressed by the conformist scientists in the line of IPCC”. This does work with less litterate press writers and their bosses. But has little impact on policy and none on climate science.

There is no funny “creationist” factions here but we have “extreme rationalists” such as Allegre and Petit. The French Academy of Science is full of this breed. It recently issued a report by Allegre-like old guys explaining that no cancer comes from the Environment (only in the end it said that most cancers come from unknown causes). The same batch recall regularly that low radioactive dose have no impact, and they even said once that dioxin was never a problem.

Part of the explanation for these strange reports come from their defective governance where consultants to the chemical industry can at the same time chair subgroups that issue this crap. When Georges W. Bush was rebuffed by the US Academy of Science in his first term, we kept on thinking this could not happen in France where this institution is not very respectable as such (many individual members are nice people and good scientists).

More recently, another “Academicien” of similar lines, Mr Petit -who was involved in the TAR- wrote that (‘Quelle bonne méthode contre le réchauffement climatique’, M. Petit, La Tribune, November 5th, 2007) it had never been scientifically proven that energy efficiency saves carbon emissions, and that he would prefer more nuclear reactors and less wind turbines. But his text showed also that he felt betrayed by the part of the speech of Sarkozy defending a 40% decrease in energy bills for French households in 2020″, and other radical statements on the side of energy savings. As for the real world policy towards efficiency, you can have a doubt or two wether our President will deliver.

As a former chair of CAN-France and a reviewer in FAR WG III, I feel entitled to give some ideas on “how we did it” and “where we are going”.

[Response: I can’t speak to the issue of whether Allegre is in a position to influence policy. I’m quite aware that nobody in climate science in France takes him seriously or is taken in by him. This post wasn’t written for those. It was written for scientists (and I’ve met a few) outside the climate area, who have heard that Allegre has been casting doubt on anthropogenic global warming, and — knowing of his general reputation — think there must be something to it. This post is just to provide a convenient place to point such people to, in order to learn why Allegre and company shouldn’t be taken seriously. There have also been rumors that Allegre was being considered for a cabinet-level position in the Sarkozy government. Maybe these rumors have mainly been spread by Allegre himself; I don’t really know. Nonetheless, even the possibility means that any effort to get out the word on the utter intellectual bankruptcy of Allegre’s stance on climate is worthwhile. Besides that, Allegre is often held out among the US denialist crowd as a prime example of a prominent scientist who changed his mind on global warming. Some people — the sorts who get their information from Fox News or from the Heartland Institute — will not be swayed no matter what, but I think there are a certain number (perhaps a large number) who have been misled by Allegre’s reputation but who are nonetheless amenable to persuasion. Any help the readers can provide in making this post known to such people would be much appreciated. –raypierre]

Re 58

In the waning days of the Fast Flux Test Facility (at Hanford), some of the research engineers in the facility performed some ad hoc experiments whereby high-level waste from plutonium production was “burned”. The result was a material that had no half-lives longer than 300 years. These were pretty smart guys, and they said the concept had real potential for radioactive waste treatment. However, US DOE middle management felt that, at that time, there was no political appetite for new reactors, and they did not even want to spend the money to keep the FFTF open for another year for additional research. No request for funding was made to senior DOE management, thus no request for funding was made to congress. The FFTF was closed on schedule.

When I left Hanford (1996), the *only* copy of those research reports was in the US DOE-RL reading room.

There are no free lunches, but if you are open to options, you can have a nice healthy lunch for the same price as the greasy burger and fries that will eventually give you a heart attack. I have had lunch with some DOE middle managers. Often, we ended up in restaurants that offered unlimited “free” seconds on greasy fries.

Re #47 Martin:

I’ve got two question regarding the atmospheric carbon drawdown scheme: “Conclusion: if we would plant 10% (more) of the Earth’s surface with forest, and let it grow for 30 years, it would suck all the carbon out of the atmosphere!

The first is how many percent of the Eart’s surface is desert and the second, do you think it would be possible to reforest the entire Sahara ?

Many thanks for all the comments, and suggestions. Apparently no-one wants to make a wager on the surface area of arctic sea ice in March and September 2008. Probably because, like me, they are certain that the 2007 extreme ice minimum in the arctic was a one of a kind anomaly, which, like the hurricane season in the North Atlantic in 2005, is unlikley to ever be repeated. It was not a “tipping point”.

Burying vitrified nuclear waste in salt deposits, well below the waterline in Arizona would solve all but the political problem.

Pollyanna. That’s me.

[Response: “Pollyanna-ism,” now there was a case where there was no adequate translation into French. I don’t even know if the novel is at all widely read in French. I toyed with something involving Dr. Pangloss, but the idea of Hayley Mills (who played Pollyanna in the Disney flick) as Pangloss was just beyond bearing. But for the record Pollyanna-ism doesn’t refer to optimism in general. It refers to unreasonable or unrealistic optimism that flies in the face of evidence that optimism isn’t warranted. It amounts to putting an optimistic or favorable light on whatever is coming at you, regardless of what the data actually say. That’s why I think Lomborg (esp. in “Skeptical Environmentalist”) is well described by the term. There are other cases in which optimism is quite justified. –raypierre]

I agree with growing more trees. Rather than trying to transfer carbon credits, why don’t we grow more trees? It’s what the planet needs.

Re#65, I’d be inclined to suggest that the maximum sea ice as shown on Cryosphere today is unlikely to exceed 13 million sq km and the minimum to be around 3 million sq km, perhaps less.

Notable has been the loss of multiyear ice over the last decade, although the area will go back up to 13 million most of this will be single winter ice unlike say 15 yrs ago, consequently it will disappear rather quickly in the summer leaving us with the multiyear residue by september. As a result more solar absorption by the ocean and more heat storage.

Check out the following particularly the figure on the Disappearance of multiyear ice.

http://nsidc.org/news/press/2007_seaiceminimum/20070810_index.html

Re #61: [False comparison; radioactivity in cigarette smoke is a minor part of the total risk…]

I missed seeing anything at that link about radioactivity levels in tobacco smoke. I do realize that there are other risks from tobacco smoke that greatly outweigh the risk from radioactivity, but that might apply elsewhere as well.

[People trivializing the serious, thoughtful effort being made to contain and manage fission plant waste really insult the people who are trying to do that competently and professionally.]

One problem is that the criteria for containment are primarily political. There may be people that trivialize the problem, but there are as many or more that greatly exaggerate both the level of containment needed, and the difficulty of attaining any given level. Demonstrate that wastes could safely be contained for a billion years, and they’d immediately start demanding two billion.

The second problem is the difference in the way nuclear & fossil fuel wastes are regarded. A high level of safe waste disposal is demanded of nuclear, while fossil fuel plants simply get to dump their waste into the atmosphere, despite the problems that those wastes are creating.

Looking at nuclear in isolation is not the way to get reasonable answers. We need to look at the amount of waste created in the process of generating some unit of power, quantify the harm that might be caused by that waste (with various risk scenarios &c), and figure in the cost of adequately disposing of that waste. As it is, too many of those factors are arbitrarily set to either infinity or zero, so we never get a meaningful answer.

Re growing more trees to mop up carbon, there are indeed companies who are planting trees and selling the carbon credits — Planktos, for example, is planting forests in Hungary.

Unfortunately, it’s not entirely clear that this is an effective mitigation strategy. There was a recent study that showed the amount of carbon sequestered in a new forest varies greatly depending on inputs like nutrients and water. Afforestation at high latitudes can actually cause increased warming by contributing to “albedo flip.”

For my money, the biggest bang for the buck to reforest tropical zones. Healthy tropical forests will cause clouds to appear above them, increasing albedo.

Re Jim Cripwell’s remarkable claim that the 2007 arctic sea ice minimum was a “one of a kind anomaly, which…is unlikley to ever be repeated”:

I invite you to explain the “Northern Hemisphere Sea Ice Anomaly” graph at Cryosphere Today:

http://arctic.atmos.uiuc.edu/cryosphere/IMAGES/current.anom.jpg

Is your claim that this curve will reverse its trend and start heading back to 0 next summer?

Reply to 61 Hank Roberts:

1. Nobody is trivializing the hard work you do.

2. From Wikipedia, the free encyclopedia

http://en.wikipedia.org/wiki/Background_radiation

The exposure for an average person is about 360 millirems/year, 80 percent of which comes from natural sources of radiation. The remaining 20 percent results from exposure to artificial radiation sources, such as medical X-rays and a small fraction from nuclear weapons tests.

Re #47:

I’d just like to point out that reforestation is not the inverse of deforestation. When a native forest is stripped from the landscape (as is tragically occurring here in Australia at an alarming rate in North-west Tasmania – see here) you lose an incredibly complex and diverse ecosystem and all the associated flora and fauna that are dependent on it. You also release huge amounts of CO2 in the atmosphere, much of it stored in the soils and in peaty deposits. When companies replant forests you generally get monocultures, often in rows. Often these are effectively biological deserts from the point of view of the flora and fauna that once lived there. And it is likely to take hundreds or even thousands of years for the amount of carbon stored in soils and peat deposits to approach the pre-clearance levels. Even if an area is clear-felled and immediately allowed to regrow from the natural seedbank in the soil, the resultant forest will be a pale shadow of its former self.

Edward, re 72, I’m not doing any hard work!

I’m just reading along here.

I was referring to the work being done by the people working on solving the problem of storing waste from fission piles – a real problem now, that has to have real solutions that stand up over a very long period.

re 52

“I know little authoritative about French waste, though we all know that Greenpeace requires more than a bucket of salt. ”

I made the comment originally re appropriate amount of salt because Greenpeace doe have an obvious bias that is going to color what they have to say.

That said, “we all know” is a bit of a jump. Like them or dislike them, Greenpeace has been in many ways the little yappy dog that has often caused people to pay attention to issues revolving around the presevation of what remains of the biosphere.

I’m not particularly fond of some of their methods; I ooften think they are their own worst enemy. But that is a far cry from suggesting they deserve to be hand-waved off in such a dismissive fashion.

[Response: Greenpeace does occasionally tend toward stating extreme consequences without all the necessary qualifications as to chance of occurrence, but when it comes to climate, they stay much closer to real science than organizations like Heartland Institute which occupy the right of the political spectrum. At a talk a Heartland “climate science advisor” gave to the U. of C Republicans last week, it was confidently stated that most of the IPCC were Greenpeace members, and that solar variability accounted for global warming. These aren’t exaggerations or minor bendings of the truth; they’re outright lies. –raypierre]

Exactly! Nuclear power is the wave of the future as France has long known and practiced.

Re: #48

Timo,

This is essentially how I look at nuclear waste disposal. It needs to be unmade. #58 Ray objects that this is not energetically feasable, and I do agree that we need to look for the lowest energy methods. There have been some interesting low energy nuclear reaction experiments in Japan that appear to transmute Cs and not too long ago there was a report that half-life could be reduced in very cold metals. Those things are a little far out, but hopeful. But, for now, I think of nuclear energy as energy that must be payed back. The recent article in Philosophical Transactions by Hansen et al. makes me think that part of our fossil fuel energy use may need to be payed back as well.

On the other hand, I am not nearly as pessimistic as the IPCC about how much that might cost.

Chris

Regarding #65 quote: “Apparently no-one wants to make a wager on the surface area of arctic sea ice in March and September 2008. Probably because, like me, they are certain that the 2007 extreme ice minimum in the arctic was a one of a kind anomaly,…”

Not really Jim. It’s because it’s not one occurrence that counts it’s the trend over a number of years. Every summer for the past several years, the sea ice in the arctic has been declining. Maybe it will rebound some in the next year or the year after.It’s like losing weight the trend isn’t straight down but moves in a wavy pattern on its way down. Basing a conclusion on what happens in any one year is

inclusive in itself.

Re tamino (#44): “In large part I agree; the people who are still listening to the turkeys are those whose disbelief is ideological, and no amount of reason will persuade them.”

Unfortunately this is not entirely true. My brother (who is a smart guy not entirely driven by ideology) has come to believe the McIntyre stuff. He knows that I have a background in this. So he is always poking me about the latest ClimateAudit wondering whether I have seen the light yet…. I’m sure it’s not generally interesting but perhaps amusing for people here to know that my homework this week is showing him (with code included) that red noise does not make hockey sticks (yes, we return again to hockey sticks….). It’s like going back to grad school except with politics and family added.

Or, more likely, people understand that volume, not area, is the most important statistic.

Great site!

I offer some ideas about why people find it hard to accept the science behind climate change here: http://sanseverything.wordpress.com/2007/11/18/climate-change-vs-human-inertia-part-1/

I’d be interested in any thoughts – cheers.

Re: #79 (Andrew)

Indeed!

Good luck, it sounds like you’ve got quite a challenge. Unfortunately, denialists make it *easy* for their adherents; you really don’t have to study hard, think hard, or know anything, to buy into their arguments. In some cases you actually have to be *ignorant* (like believing that red noise makes hockey sticks). It’s all too easy and comfortable to accept their drivel.

Trying to be brief on several issues:

Carbon is permanently sequestered as: fossil fuel or limestone or dolomite? During the last 3 million years CO2 has been unusually low because of sequestration due to unusual ??? I think I got the wrong answer last time on this one.

In 1968 I almost got a job at a company named Numec at Apollo PA near Pittsburgh. The job was designing nuclear batteries for heart pacemakers. The batteries used nuclear “waste” to generate heat. Numec was in the nuclear fuel reprocessing business. Numec “lost” half a ton of nuclear fuel that it was reprocessing. The lost fuel somehow found its way to Israel. Numec went out of business, and the US quit reprocessing nuclear fuel. The real issue is not waste but keeping Israel and other countries from stealing the “spent” fuel to reprocess back into fuel. You can skip the expensive centrifuging step that way. My solution is to do the reprocessing in government owned government operated [GOGO] plants. Free enterprise can be too free. Yucca mountain must be guarded because the so-called “waste” is very valuable.

Coal fired power plants, besides burning 4 Million tons of carbon per 1000 megawatts per year, also put out 4 tons of Uranium with each 4 Million tons of carbon. Average coal contains 1 part per million uranium. Illinois coal contains up to 103 parts per million uranium. We could fuel our nuclear power plants from coal smoke and cinders. Thorium from coal can be bred into uranium. Industrial grade Chinese coal contains so much Arsenic that families who steal it for cooking, die of arsenic poisoning. Coal contains so much of so many elements that coal smoke and cinders should be considered ore and perhaps nuclear “waste.” We don’t treat coal waste that way because familiarity breeds contempt???? Coal is almost pure carbon, except for the URANIUM, ARSENIC, LEAD, MERCURY, THORIUM, Antimony, Cobalt, Nickel, Copper, Selenium, Barium, Fluorine, Silver, Beryllium, Iron, Sulfur, Boron, Titanium, Cadmium, Magnesium, Calcium, Manganese, Vanadium, Chlorine, Aluminum, Chromium, Molybdenum, Zinc and so on that are coal’s impurities. See:

http://www.ornl.gov/ORNLReview/rev26-34/text/coalmain.html

Natural background radiation is 1000 times what you get from a nuclear power plant or 10 times what you get from a coal fired power plant of the same capacity. Radiation is a “Greenpeace” or propaganda or phony or Allègre/Courtillot issue. We date ancient mummies with the radioactive carbon they ate thousands of years ago. NOW can we get back to the climate?

Raypierre,

Your writing style is superb. I wish your posting was not so infrequent.

Jim Cripwell, I’m very happy to take your money on 2008 arctic sea ice area. Sorry not to respond earlier but work pressure has prevented me from keeping up with RC threads lately.

As I have mentioned in other threads in the past, the early November re-freeze this year was predicted by the sea ice experts. I had an email exchange with Bill Chapman at CT in October on this exact subject, in which he said (26th October): “I fully expect the extreme anomaly to reverse course very rapidly any day now. During the severe melt back this summer, I was expecting the anomaly to really get severe this autumn as the climatological ice growth season kicked in and the excess heat absorbed by the ocean delayed the refreezing. So far, it has played out just as expected.” I hope he doesn’t mind my posting this small quote from private email.

To run quickly over the physics of this: the arctic winter is very cold and very long, because of the earth’s axial tilt. Any open water in the arctic which is not warmed by currents will freeze during the winter. Last winter, next winter, every winter; the axial tilt isn’t changing. The only way this could be prevented would be for the water to start the winter so warm that six months of arctic night is insufficient to freeze it. The arctic ocean is very far from being this warm yet: we will enter the 2008 melt season with the arctic ocean entirely frozen, as always (although total arctic sea ice is likely to be lower than the 1979-2000 average, because there will be less ice on the arctic periphery – in the North Atlantic, North Pacific, Greenland Sea, etc – partly because of warmer water, partly because there has been less ice in the arctic ocean to drift into peripheral waters).

However, the arctic climate is strongly warming, and arctic weather (wind patterns) and decadal climate systems (the AO) have also been in a warming state. Models provide good evidence that the overall warming trend of the arctic is CO2 driven. Whether or not the weather patterns and AO are also driven by CO2 is (as I understand it) an open question. Regardless, the most dramatic effect of all these warming systems is the reduction of the summer ice area minimum, and the opening up of a huge anomaly at and after the minimum. Any effect on the winter ice area maximum is bound to be smaller, because of the simple physics of the system, as explained above.

Various posters have said that volume is more important than area. Volume is very important, because of the latent heat, and the steady loss of ice thickness over several decades is striking, as is the recent loss of multi-year ice, to which 2007 adds a huge contribution. But area is also important, because the loss of sea ice area drives the albedo feedback, and for a number of other reasons (wave-mixing, sub-ice systems, etc). One could reasonably say that volume is important in the winter (when the whole ocean is frozen anyway, and albedo is less relevant, and the increase in volume of ice shows the latent heat flow), but that area is important in the summer (when albedo is critical).

Now, on to the important business at hand. I’m not a rich man, and I have many other things to do with my money, so shall we say 50 Euros? Odds complicate things, so let us construct a bet which we are both happy to take at even odds. For the reasons outlined above, I don’t think a bet on the 2008 maximum area is an interesting one. So let us bet on the 2008 minimum area.

The 1979-2000 average minimum is a little over 5 million square kilometres. 2005 was a record low of 4.01 million square kilometres; 2006 was not quite so low; 2007 was around 3 million square kilometres. I propose a cut-off number of 4.01 million square kilometres: the 2005 number. That is, I’ll put money on the 2008 minimum being lower than any previous record except 2007.

If Cryosphere Today reports a 2008 minimum area of more than 4.01 million square kilometres, I’ll pay you 50 euros. If Cryosphere Today reports a 2008 minimum area of less than 4.01 million square kilometres, you’ll pay me 50 euros.

Do we have a bet?

PS to Jim: I would also be prepared to bet 1 euro per ten thousand square kilometres either side of 4.01 million, up to a maximum of 50 euros (e.g. if it’s 4.45 million, I pay you 44 euros, if it’s 3.85 million, you pay me 16 euros).

@ Edward Greisch

Natural background radiation is 1000 times what you get from a nuclear power plant or 10 times what you get from a coal fired power plant of the same capacity. Radiation is a “Greenpeace” or propaganda or phony or Allègre/Courtillot issue.

I take it you mean that a nuclear power plant delivers 1000 times the natural background radiation and a coal plant 10x?

Apart from that – yes, familiarity breeds obliviousness, not contempt. Owners of coal fired plants would like us to not think of the byproducts being released, I think.

Looks like the key question on nuclear energy is: Is there sufficient economic fuel supply? For Uranium there is a study that answers with NO:

http://energiekrise.de/uran/docs2006/REO-Uranium_5-12-2006.pdf

Next question: Is there any other economic reactor type?