In January, we presented Lesson 1 in model-data comparison: if you are comparing noisy data to a model trend, make sure you have enough data for them to show a statistically significant trend. This was in response to a graph by Roger Pielke Jr. presented in the New York Times Tierney Lab Blog that compared observations to IPCC projections over an 8-year period. We showed that this period is too short for a meaningful trend comparison.

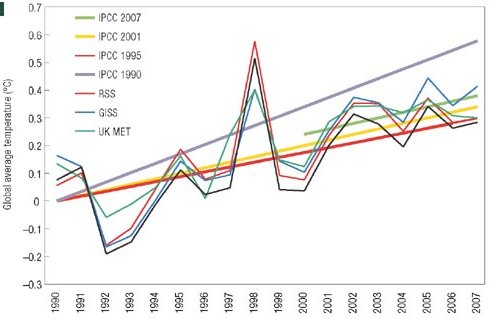

This week, the story has taken a curious new twist. In a letter published in Nature Geoscience, Pielke presents such a comparison for a longer period, 1990-2007 (see Figure). Lesson 1 learned – 17 years is sufficient. In fact, the very first figure of last year’s IPCC report presents almost the same comparison (see second Figure).

Pielke’s comparison of temperature scenarios of the four IPCC reports with data

There is a crucial difference, though, and this brings us to Lesson 2. The IPCC has always published ranges of future scenarios, rather than a single one, to cover uncertainties both in future climate forcing and in climate response. This is reflected in the IPCC graph below, and likewise in the earlier comparison by Rahmstorf et al. 2007 in Science.

IPCC Figure 1.1 – comparison of temperature scenarios of three IPCC reports with data

Any meaningful validation of a model with data must account for this stated uncertainty. If a theoretical model predicts that the acceleration of gravity in a given location should be 9.84 +- 0.05 m/s2, then the observed value of g = 9.81 m/s2 would support this model. However, a model predicting g = 9.84+-0.01 would be falsified by the observation. The difference is all in the stated uncertainty. A model predicting g = 9.84, without any stated uncertainty, could neither be supported nor falsified by the observation, and the comparison would not be meaningful.

Pielke compares single scenarios of IPCC, without mentioning the uncertainty range. He describes the scenarios he selected as IPCC’s “best estimate for the realised emissions scenario”. However, even given a particular emission scenario, IPCC has always allowed for a wide uncertainty range. Likewise for sea level (not shown here), Pielke just shows a single line for each scenario, as if there wasn’t a large uncertainty in sea level projections. Over the short time scales considered, the model uncertainty is larger than the uncertainty coming from the choice of emission scenario; for sea level it completely dominates the uncertainty (see e.g. the graphs in our Science paper). A comparison just with the “best estimate” without uncertainty range is not useful for “forecast verification”, the stated goal of Pielke’s letter. This is Lesson 2.

In addition, it is unclear what Pielke means by “realised emissions scenario” for the first IPCC report, which included only greenhouse gases and not aerosols in the forcing. Is such a “greenhouse gas only” scenario one that has been “realised” in the real world, and thus can be compared to data? A scenario only illustrates the climatic effect of the specified forcing – this is why it is called a scenario, not a forecast. To be sure, the first IPCC report did talk about “prediction” – in many respects the first report was not nearly as sophisticated as the more recent ones, including in its terminology. But this is no excuse for Pielke, almost twenty years down the track, to talk about “forecast” and “prediction” when he is referring to scenarios. A scenario tells us something like: “emitting this much CO2 would cause that much warming by 2050”. If in the 2040s the Earth gets hit by a meteorite shower and dramatically cools, or if humanity has installed mirrors in space to prevent the warming, then the above scenario was not wrong (the calculations may have been perfectly accurate). It has merely become obsolete, and it cannot be verified or falsified by observed data, because the observed data have become dominated by other effects not included in the scenario. In the same way, a “greenhouse gas only” scenario cannot be verified by observed data, because the real climate system has evolved under both greenhouse gas and aerosol forcing.

Pielke concludes: “Once published, projections should not be forgotten but should be rigorously compared with evolving observations.” We fully agree with that, and IPCC last year presented a more convincing (though not perfect) comparison than Pielke.

To sum up the three main points of this post:

1. IPCC already showed a very similar comparison as Pielke does, but including uncertainty ranges.

2. If a model-data comparison is done, it has to account for the uncertainty ranges – both in the data (that was Lesson 1 re noisy data) and in the model (that’s Lesson 2).

3. One should not mix up a scenario with a forecast – I cannot easily compare a scenario for the effects of greenhouse gases alone with observed data, because I cannot easily isolate the effect of the greenhouse gases in these data, given that other forcings are also at play in the real world.

Thanks for this discussion. Full text of the letter can be found here:

http://sciencepolicy.colorado.edu/admin/publication_files/resource-2592-2008.07.pdf

1. IPCC already showed a very similar comparison as Pielke does, but including uncertainty ranges.

RESPONSE: Indeed, and including the uncertainty ranges would not change my conclusion that:

“Temperature observations fall at

the low end of the 1990 IPCC forecast range

and the high end of the 2001 range. Similarly,

the 1990 best estimate sea level rise projection

overstated the resulting increase, whereas the

2001 projection understated that rise.”

2. If a model-data comparison is done, it has to account for the uncertainty ranges – both in the data (that was Lesson 1 re noisy data) and in the model (that’s Lesson 2).

RESPONSE: I did not do a “model-data comparison”. One should be done, though, I agree.

3. One should not mix up a scenario with a forecast – I cannot easily compare a scenario for the effects of greenhouse gases alone with observed data, because I cannot easily isolate the effect of the greenhouse gases in these data, given that other forcings are also at play in the real world.

RESPONSE: Indeed. However, I made no claims about attribution, so this is not really relevant to my letter.

Thanks again, and I’ll be happy to follow the discussion.

I’m glad to see someone write about these points. There appears to be – this is not a comment on Pielke’s letter specifically – some real misunderstanding about how the modelling part of the IPCC assessments happens. Many critiques of the IPCC results read as if there is either one or one set of climate models that are run by the lead authors of the IPCC. The letter to Geosciences, though written by someone very familiar with the IPCC process, is troubling because it employs language as well as analysis that which suggests, intentionally or not, that the IPCC only created one future projection. Again, intentional or not, the language diminishes the IPCC effort from a broad, comprehensive international review and assessment to a singular piece of research conducted by an individual body of scientists.

Some observations.

Isn’t this a case of the more scenarios you have, the more likely you are to get one that is correct, purely by chance?

Doing a quick count on the number of points within each prediction and the error bounds, compared to the number of points outside the error bounds, and none of the predictions have been particularly good.

So the little black dots on the IPCC are the points that Pielke connects, but Pielke leaves out the trend line?

It seems like the data from 2008 is not included in the observed data – how comes?

[Response: That’s easy. This is annual data, and 2008 isn’t done yet. – gavin]

I thought meterorite showers would add to the warming.

The only reason that I can imagine why the Nature editors would have published this is that they simply didn’t know that IPCC had published virtually the same analysis (well, better) a year earlier. There is nothing new at all in this letter. Are these letters peer-reviewed at Nature Geoscience? How could this have slipped through?

I wonder what is going on at Nature? First the embarrassing Pielke-flop at the start of their new climate blog last year, now this right at the start of their new geoscience journal. Are they doing this deliberately, to get PR through RealClimate?

Perish the though that anyone should question you great gurus.

Seriously though – I am not a climate scientist, I have an electrical engineering degree and have worked in the IT industry for over 20 years, including in a university environment.

Given the arrogance I have seen over the years coming from Universities, and which I am afraid I see regularly on this blog only tends to confirm the mild, but increasing scepticism I have developed towards Climate Change – aka global warming.

I say this particularly in reference to (7) above.

Chuckle. And Scientific American’s blog just published Milloy’s fear-the-fluorescent press release debunked by Pharyngula long ago, as news. It’s an election year. Support your local coal company ….

Re #3: Some observations.

Isn’t this a case of the more scenarios you have, the more likely you are to get one that is correct, purely by chance?

Doing a quick count on the number of points within each prediction and the error bounds, compared to the number of points outside the error bounds, and none of the predictions have been particularly good.

To some extent, that’s true. But it depends on the directions of the trend lines as to whether or not the result is purely chance or, well, better guessing than purely chance ;)

What bothers me about people who rebut IPCC projections / models / forecasts / educated guesses (pick one, depending on your own personal ideology …) is that they do not provide charts / graphs / projections from their own pet theories. Reading articles such as this do not make me feel particularly warm and fuzzy about climate trends, but gee, it would be nice if people who are inclined towards such things at least provided solar aa-index data on top of their latest kvetching about the IPCC. How hard can be it to drop this graph on top of the recent global temperature trend and see which fits better — the “the more scenarios you have, the more likely you are to get one that is correct, purely by chance?” collection of scenarios (etc) or ones own pet theory?

Just wondering, does anyone know if in that Hansen 2006 paper where he compares his 1988 projections to observations, is uncertainty displayed or refered to? I can’t seem to find the paper anywhere in pubs.giss.nasa.gov but do recall a graph showing Scenarios A, B, C but no uncertainty range.

Joc #9, I suggest that the primary source of your skepticism is the fact that you are ignorant of the science. No shame in that. You were never trained as a scientist, just as I am not trained in IT (and so would not presume to question you on a matter of IT). Ignorance is 100% curable. Willful ignorance is mortal.

Re 9

Well, as another engineer, I am embarrassed that you have decided to be skeptical about global warming because you do not like the attitude of climate scientists. Good grief! Where on earth did you get your engineering training?

In my (sigular) experience, a Correspondence is sent to the authors whose work is commented on to see if any differences can be resolved and if not, and Nature wants to publish, the affected authors can respond. It is important to recognize that these are not Letters and are less formal. Roger, was this your experience? I recall a post saying that model runs are being collected in a database now. I would think that this covers Roger’s point 1) and I would think that the place for comparision with other work would be directly in the peer reviewed literature rather than in the IPCC report which surveys what is available. There are different scenarios (projections) from assessment to assessment because different liturature is surveyed as time progresses. So, Roger’s point 2) is really up to the diligence of the student. The latest report certainly highlights areas where work has advanced.

Through observation, electrical engineers seem to have the highest sceptical element of any discipline I’ve seen to date, to the point of militancy. I don’t understand it.

I’m an electrical engineer and have accepted the reality of AGW for many many years. Our trade magazine, IEEE Spectrum, published an extensive article about AGW around 1999 (although they certainly caught a bit of flak over it).

Recognize that many electrical engineers populate the power companies and some may have a built-in bias. One of my professors in school also worked for the local Edison and announced to our class, that PCBs were “no more dangerous than a peanut butter and jelly sandwich.” On a related note, the Atlantic Monthly just ran an article of why so many suicidal terrorists are also educated as engineers.

Anyway, I’m just thankful that I work in a multi-disciplinary environment with scientists, engineers, and others, where everyone is expected to contribute and work together. I also happen believe the health threat of PCB contamination is real, and am decidedly not a terrorist.

Be careful,

“However, a model predicting g = 9.84+-0.01 would be falsified by the observation.”

Assuming the error distribution in the model would be gaussian, what you mean is that there would be roughly only 1 chance in 300 that the model would be right. Now if your model had predicted the gravity in let’s say 3000 points, then it would necessarily have found such errors in 10 of those points. If not, there would be something wrong with your error estimates.

In fact, one way to check if researchers have not “massaged” their data is to check for this kind of stuff. For example, when a straight line fit is presented only about 68% of the points should have error bars touching the best fit.

Can we please not go into the engineer thing again – I am extremely unimpressed by anecdotes that seem no better than “we had a cold winter here, so AGW is false.”

If someone has actually done a proper survey of AGW-scepticism by highest degree, current jobs, ages, etc, and controlled for population sizes, ages, etc … then please point at it.

[Not an EE].

Re 3:

It seems to me that the ‘predictions’, even with error bounds, must be represent a smoothed trend line– the noise, year to year, is pretty substantial; the error bounds seem to represent, not year to year variability, but a range of underlying trends that a smoothed trend line, over some reasonable period of time, would be expected to follow. But then I’m just a philosopher…corrections/explanations would be welcome.

[Response: Correct. The spread of the data points is interannual variability (see our Lesson 1 for more); it has nothing to do with the uncertainty range of the future scenarios (which applies to the long-term trend, not individual years). -stefan]

Last night I prepared my next Nature publication! I took one of my favorite graphs from the IPCC report, simplified it a bit for the Nature readership, and off we go. I will let you know how it fares.

joc said in #9:

How does arrogance or any other personal attitudinal trait on anyone’s part affect the scientific evidence for global warming due to increasing atmospheric concentrations of IR-excitable gases like CO2? Or the evidence tying that increase to fossil fuel use, production of cement and other human activities?

Dismissing real evidence as a reaction to real or perceived arrogance is not worthy of the name skepticism.

joc says:

I saw that coming.

So you decide whether scientific theories are true or not based on the demeanor or conduct of those holding the theory, rather than based on whether the evidence points that way or not?

#22 – It might not be the scientific ideal, but judging what people say on the basis of how they act is a well-established part of “human nature”.

If you want to do anything other than occupy the moral high ground you have to accept and work with this knowledge by treating people with respect and the benefit of the doubt. One of the things that happens a bit too much on the internet is that the extremes of each debate shout past each other and the people in the middle just ignore it.

Re. #20, good point. I wonder if we could apply bootstrap or similar methods to the ensemble forecasts, and thereby put some uncertainty bounds/confidence intervals in the graphs to distinguish between the confidence in the mean trend lines, and confidence in the noise affecting the trends from one year to the next. Probably seems a bit counter-intuitive, but you can have a confidence interval around a confidence interval (hope that makes sense).

Re:22 Meltwater’s comment:

“How does arrogance or any other personal attitudinal trait on anyone’s part affect the scientific evidence for global warming due to increasing atmospheric concentrations of IR-excitable gases like CO2? Or the evidence tying that increase to fossil fuel use, production of cement and other human activities?

“Dismissing real evidence as a reaction to real or perceived arrogance is not worthy of the name skepticism.”

Amen. Attitudes won’t change the fact that the Earth has already warmed about 0.7C over the past century and mostly in the last few decades, and the warming has been accelerating. Attitudes won’t change the fact that glaciers and sea ice have been diminishing and that Greenland glaciers and Arctic sea ice in particular are losing ice more rapidly than predicted only a few years ago. It’s about data and evidence, not attitude.

I am also an electrical engineer. I recognize “skeptism” as always healthy where scientific conclusions are concerned, just as they are in engineering. What is clearly not healthy are the nuts who conclude, based on a few years data, that global warming is fictional (careful – your political agenda might be showing). Clearly, if one only looked at temperature data from 1998 until 2007, one could be easily misled into thinking that the world’s climate is not getting warmer. Even 1990 to 2007 is a very short time frame on which to base conclusions. One could go back to the 1930s warm period and also decide that global warming is fictional. However, they ignore much other data than year-to-year temperature readings, and they ignore basic physics (carbon dioxide affects solar radiation how?). Glaciers recede, and atmospheric carbon dioxide and sea level continue their long upward trend. Biological observations continue to suggest changing climate. We can argue over the extent to which human activity is the cause of all this – we don’t actually know with a high level of accuracy how much human activity is affecting climate. However, simple logic says that we do affect climate – how could we not? This, also, is basic physics, and basic logic. Skeptism is healthy, drawing conclusions based on political ideology is not.

“A scenario only illustrates the climatic effect of the specified forcing – this is why it is called a scenario, not a forecast.”

I think this is the crux of the matter for me. Even dealing soley with climate change and all its ramifications, we’re missing the holistic picture. There are all the other environmental problems, including, those caused by the very actions that cause GW (e.g. burning fossil fuels). And there are non-environmental problems, as well. Many of these are interrelated or interactive.

To be fair there are also good things happening (innovations, new determinations followed by effective actions to “do good,” etc), but it seems with all the negative consequences (for humans and other living things), an honest forecast bringining in everything conceivalbe would probably be much much worse than a climate change scenario…..

Many of the various impacts might not be additive (or substractive), but multiplicative. For example, if smoking increases lung cancer risk, say, 5 times, and work exposure to asbestos, say, 10 times, then a smoker exposed to asbestos might have not a 15 times greater risk, but a 50 times greater risk.

So if we can imagine a total earth-human system (including solar input and other extra terrestial impacts), then climate change itself might be considered a “forcing” on a very sensitive system that wreaks extreme havoc, whereas CC alone would have only caused considerable harm.

I agree that uncertainty must be stated.

However if the uncertainty is of the same order as the signal in the forecast (scenario if you will) then the meaning of the forecast is pretty much nil. Which is unfortunately the case with double CO2 scenario/forecast.

Dear RC,

I can’t seem to get into the body of this article, but I can get to see the comments. Would this be a problem your end or mine?

Many thanks.

Regards

Abi

[Response: Click on the title of the post, or on the “(more…)” link. – gavin]

I can’t stop thinking about the question of whether Pielke would have known that IPCC had published the same kind of analysis already. If he did – would he really have tried to sell this to Nature as new information? But if he didn’t – how could he have overlooked the very first graph in the IPCC report? It beats me.

re #3 and #11

“Isn’t this a case of the more scenarios you have, the more likely you are to get one that is correct, purely by chance?”

No. Each scenario starts with a set of assumptions and results in an outcome. When evaluating scenarios for accuracy you pick the one where the assumptions match what happened and then see if the outcome matches. You don’t find a scenario where the outcome matches observations and say “we were right”. You find the scenario where the assumptions are closest to observations, and then see if the outcome matches.

For example, Scenarios may start with assumptions on how much CO2 man will dump in the environment each year, how many volcanoes will blow up, etc…. To verify the model, you take the scenario where the amount of CO2 dumped, volcanic activity, etc… is closest to observations.

Thanks, Gavin. I already tried both your suggestions, with no luck. I’m a regular visitor to your site and I’ve never had this problem. The links in the post work, but not the “more…” or the title ones. I’ll assume the problem is with my equipment.

Thanks

Abi

Climate Science has published a weblog “Real Climate’s Agreement That The IPCC Multi-Decadal Projections Are Actually Sensitivity Model Runs” [http://climatesci.org/2008/04/11/real-climates-agreement-that-the-ipcc-multi-decadal-projections-are-actually-sensitivity-model-runs/] on one aspect of this posting from Real Climate.

Regarding the “arrogance” discussion – Laypeople don’t have the time or education to evaluate scientific claims based on the scientific data, especially in a field as complex as climate science. We have to rely on the judgment and expertise of scientists who attempt to explain complex analyses in simplified ways we can understand. Therefore it is essential for laypeople to judge the character and motivations of a scientist making a claim, in order to establish in their mind whether this person is a trustworthy authority. When someone making a claim displays arrogance, or an unwillingness to objectively consider or respond to criticism (especially by other scientists), a layperson might reasonably consider this as an indication that the scientist is not being objective, whether or not other consider that to be true.

If a goal of realclimate is to inform laypeople of issues in climate science, then arrogance in this forum will only appeal to its most unquestioning adherents while driving away those who prefer to keep an open mind about a complex and dynamic field. Skepticism is a requirement of scientific thought, and should be applied to claims made by both sides.

Incidentally, Roger Pielke Jr. was lead author of a recent Nature (Nature period, not Nature Geoscience) article on IPCC underestimation of technological challenges. It seems like a good piece of work from what I’m able to read about it in Science Daily.

http://www.sciencedaily.com/releases/2008/04/080402131140.htm

I’d be very interested if the RC crew had any thoughts about this one. I haven’t heard of anyone else focusing on imminent technical problems in this manner.

RE the IPCC, my sense is that even with various scenarios and confidence intervals it might be underestimating CC. That’s just a gut feeling, plus I heard some scientist who contributed to the IPCC and was aware of the process and politics make that claim (but I can’t remember where I heard or read that). The point made was something to the effect that the underlying science was good, but that government officials ratcheted the reports down and diluted them (perhaps he was only talking about the summaries).

But there are 2 other points. The one I made above, about all the other problems. While the IPCC’s purview is only climate change and all its specific ramifications, and cannot be held at fault for not addressing ALL problems besetting us, the problem is that people looking at the report might forget or not keep in mind all those other problems. (It’s the same criticism I give future planners who fail to take climate change and its effects into consideration.)

The other point is that so far, it seems to me that the progression of assessment reports and climate science studies in general seems to keep indicating “it’s worse than we thought.” That’s not only from better, more sophisticated models, but from new evidence and data, new variables (or the new ability to include them in calculations), new approaches, and new theoretical insights coming in. I know we aren’t going into a permanent runaway scenario, but I’m wondering when (ten years, 100, 1000, 100,000 years from now?) we’ll start getting reports indicating “it’s better than we thought.”

So maybe Pielke is correct — scientists might not be giving us accurate scenarios. They might be underestimating the problem. Maybe the actuality lies above the confidence intervals or error bars. I suppose we could wait and find out…..Or here’s an idea, why don’t we reduce our GHGs down to nearly nil and just call it quits on this experiment (assuming we haven’t already pushed it past the tipping point).

Re Abi @ 33: Try deleting your cookies.

RE: 37 “I’m wondering when we’ll start getting reports indicating “it’s better than we thought.”

I haven’t seen it, but I heard a director of “The 11th Hour” reiterating the theme of the film: In nature, there is no waste. Throughout natural systems, what’s waste for one creature is nourishment for another… with the obvious exception of Homo sapiens.

To reply to your question, I would expect things to start improving some decades or centuries after the point where we acknowledge that the underlying assumptions of industrial civilization are deeply flawed. We haven’t yet suffered enough to grasp the necessity of such a fundamental turnaround in consciousness, most probably.

Here is another new study that the current CO2 based models don’t fit historical temperatures.

http://www.sciencedaily.com/releases/2008/04/080410140531.htm

[Response: i) GCMs are not ‘CO2 based’ – hypotheses for climate change might or might not be, ii) the Eocene is not historical (it is pre-historical), iii) this is an interesting paper since it uses a little more imagination than usual. – gavin]

Daniel C. Goodwin (36) — See Climate Progress, linked under the Other Opinions section of the sidebar, for critically negative commentary on that Nature article by the blog owner, Dr. Joseph Romm.

There’s also a new effort by Roger Pielke Jr. to claim that there is “opposition to adaptation to climate change.” In this case, if “adaptation” means “governments should do nothing”, then yes, there is opposition. If we define “Adaptation to climate change” as ending the use of fossil fuels and quickly building renewable energy infrastructure, then yes, there is opposition – from established fossil fuel interests, mostly. Thus, regardless of how you define “adaptation”, Roger Pielke Jr. is correct on that one.

As far as his letter goes, any realistic examination of predictions and observations made by climate scientists would have to consider a far longer time period – for predictions, a good beginning would be the period shown here: 1990-2100. For observations, the choice would be the period from 1860-present.

There are some valid criticisms of the IPCC process, however. It would be more useful if they projected atmospheric composition scenarios directly, rather than use such fuzzy concepts as “high technology adaptation” or “low population growth” in their scenarios. Also, the future doesn’t end 100 years from now, does it? How about a 250-year forecast, instead? Finally, they’re only now starting to try and make carbon-cycle forecasts.

I don’t know why Nature would publish such a letter, but then I don’t know why Nature would so openly promote a bogus concept like “clean coal”:

Putting the carbon back: The hundred billion tonne challenge, Aug 2006

Coal-to-gas: part of a low-emissions future? Feb 2008, Nature

Liquid fuel synthesis: Making it up as you go along, Dec 2006

Coal-fired power plant to bury issue of emissions, Mar 2003, Nature

Carbon storage deep down under, July 2007

All those stories promote the idea that sequestration of all the carbon produced by the combustion of coal is a plausible notion. They use qualifiers, but if you read them they all have the same rosy tone about what is an extremely implausible plan, on both scientific and economic grounds.

To their credit, Nature has covered the fact that the current funding for renewable energy is miserably low, and is being sabotaged by a push to fund clean coal technology: Nature Jobs: Renewable-energy funds threatened, 2001.

> maybe Pielke is correct — scientists might not be giving us

> accurate scenarios

Scenarios aren’t, by definition, “accurate” — definition:

“A plausible description of how the future may develop, based on a coherent and internally consistent set of assumptions about key relationships and driving forces (eg, rate of technology changes, prices). Note that scenarios are neither predictions nor forecasts.”

http://www.gcrio.org/ipcc/techrepI/appendixe.html

Matthew Brunker, I agree that scepticism is essential to science, but skepticism does not consist of rejecting the evidence. To simply reject evidence and clothe it in the guise of scienctific objectivity is affront to the scientific method and all those who care about it.

John Cook,

Here is the 2006 paper, and

here is the updated graph, which you can find at gistemp bottom of the page.

And, of course, here is the original 1988 paper.

Re #32:

My comment about whether or not the scenarios was “pure chance” was not to say that they are “pure chance”, but that when someone presents a number of forecasts it becomes more difficult to determine if the forecast was “correct” or a “lucky guess”.

While my position on climate change is based on the economics of creating the environmental disaster needed to reach some value, such as 450ppm, I must confess that the climate changes in response to SC24 and the trend in the solar aa-index may (or may not) change my thoughts about AGW. Which is to say, I fall into the camp called “We better stop emitted CO2 or we else all go broke.” If that prevents global warming, great. But CO2 sequestration is, in my opinion, a long term economic disaster, even if it somehow saves the whales in the process.

The temperature data over the past several decades look to most people like an upward trend leavened by statistical fluctuation. Not everyone is convinced of course, but I wonder if graphing dew point temperatures would show something more definite? It seems that humidity should rise with more evaporation in a warming world. I don’t see much about that in casual perusal of presentation and argument about GW. I wish I could find charts showing dew points over the years, does anyone have data or links or references to same?

So if I understand things correctly, the IPCC “scenarios” show a range of global temperature values due to the uncertainties of future forcings. And then Pielke treated these uncertainties as model uncertainties; i.e. the variance one would expect in multiple runs of a model that employed some stochastic equations. Is this correct? If so, then Pielke is seeing trends (older models predicted temps that were too high and newer models predicted temps that were too low) where they don’t exist. Am I totally out to lunch? Or are Nature’s editors?

On my blog, I published a fairly lengthy rebuttal of a piece in The Australian and had several fairly robust, impolite responses from anonymous denialist (or several of similar attitude). For those who don’t like the attitude they think they are seeing here, look at the responses to my article.

One of the allegations in comments on the article was that none of the models have any predictive power, and all the “refinements” are at best attempts at retrofitting as new data is available. To test this claim, I took a few examples from Hansen’s 1988 paper, and found that while the fit was not exact the temperature trend was within the error bars of subsequent measurement and the temperature distribution map while out a fair amount was not totally wrong. In other words, if you had used the 1988 paper to predict the next 20 years, you would not have been far out on the temperature trend, though you would not have done so well if e.g. you had based long-term agriculture policy or anything else where you needed to know the exact location of the warming on the paper. Not bad, I thought for an early study with some significant simplifications.

I didn’t think this piece of work was particularly innovative or complete, but found it useful to do it myself rather than rely on someone else, who may have had vested interests to protect. In view of the revelations here, should I reformat it in Nature‘s style and offer it for publication? :)

PS: I sent the following letter to The Australian and they didn’t publish it:

Your new form of journalistic parody in which you get a person to read a web site in an area in which they are not qualified, then pose as an expert, is very amusing.

However, you have pretty much exhausted the options in climate science, and the articles are starting to get repetitious. May I suggest a few new areas to try? An economist could write an excellent piece on gravitation waves. A political science graduate’s undertstanding of the physics of quantum computing would be exciting. I’m also keen to see a sports reporter’s take on junk DNA.

I hope these ideas will be of use; I look forward to further examples of the genre.

With regard to post #35, I am a lay person who comes to RealClimate looking for an understanding of the issues, not authoritative pronouncements from experts. It may be true that I will never understand the science in all its intricacies. But since this is something that may have profound effects on my later years and particularly on the lives of my children, reading RealClimate represents my decision to make the effort to understand as far as I am able. I sympathize with the feeling that we just don’t have time to learn everything that we need to know in order to make wise choices at the polls and in our communities. Our difficulty is not helped by the committed shallowness of our media. I have found the information presented on this site to be of sufficient value to overlook the human foibles of the people presenting it. (In fairness to the authors of the site, I think they do a very good job.) Those who feel abraded by personalities or unsympathetic discourse and as a consequence cannot absorb the lessons available here, are the poorer for it. A polite request for moderation in tone is never amiss. On the other hand, we who seek bear the greater responsibility both for remaining polite and for making the effort to extract the information which the site’s owners have no obligation to provide.