Over multiple generations of CMIP models Arctic sea ice trend predictions have gone from much too stable to about right. Why?

The diagnostics highlighted in our model-observations comparison page are currently all temperature based, and show overall that climate models have being doing well on these trends for decades. But there has been increasing attention to trends in non-temperature variables, and there, model performance is more mixed (Simpson et al., 2025). As we’ve discussed before, model-observation discrepancies can arise from three causes: the observations could be wrong (unrealized biases etc.), the models are wrong (which can encompass errors in forcings as well as physics), or the comparison could be inappropriate.

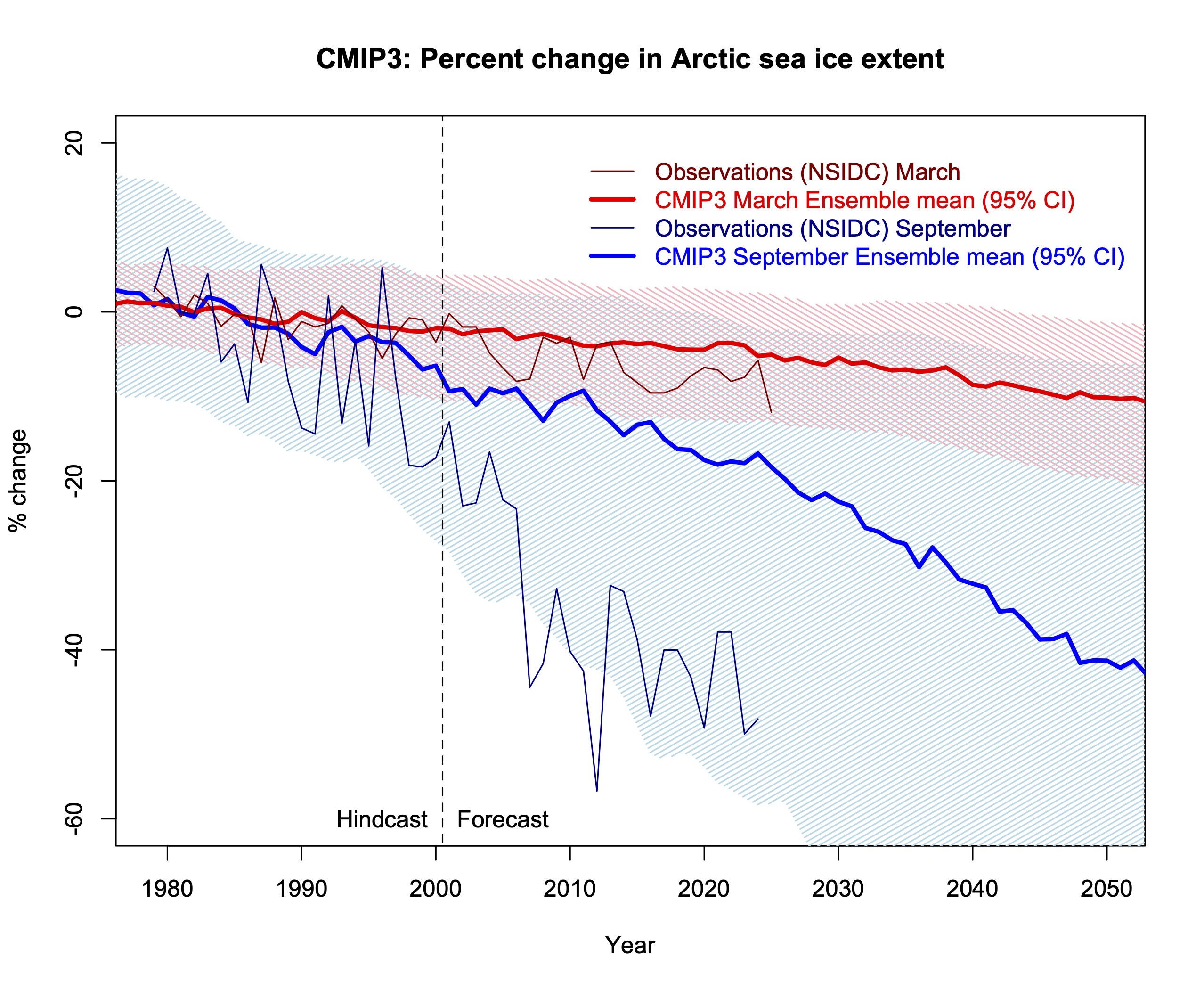

One of the most high profile ‘misses’ in coupled modeling over the last few decades was the failure of the model projections in CMIP3 (circa 2003/4 vintage) to match the rapid losses in Arctic sea ice that started to become apparent in the middle of that decade (Stroeve et al., 2007), and were compounded by the summertime record losses of sea ice in 2007 and then 2012. With an additional decade, how does that look now?

In a word, the CMIP3 Arctic sea ice projections were, and remain, terrible. The ensemble mean predicted rate of change of September Arctic sea ice extent is less than half that observed (-4.5 %/decade vs. -11 %/decade for 1979-2024), and there are only five single individual model simulations (out of 46) that have a loss rate greater than 10 %/decade (95% spread is [-12,-0.7] %/decade). The March trends are also under-predicted, but by a lesser degree. There is no real ambiguity in the observed trends, nor in the comparison (though extent is a little trickier than area to compare to), and so these discrepancies were very likely due to model failures – insufficient resolution to capture the polar sea ice dynamics, too simple sea ice physics, biases in the Arctic ocean simulations etc. Analyses have shown that errors in the absolute amount of sea ice were correlated to the errors in the trends as well.

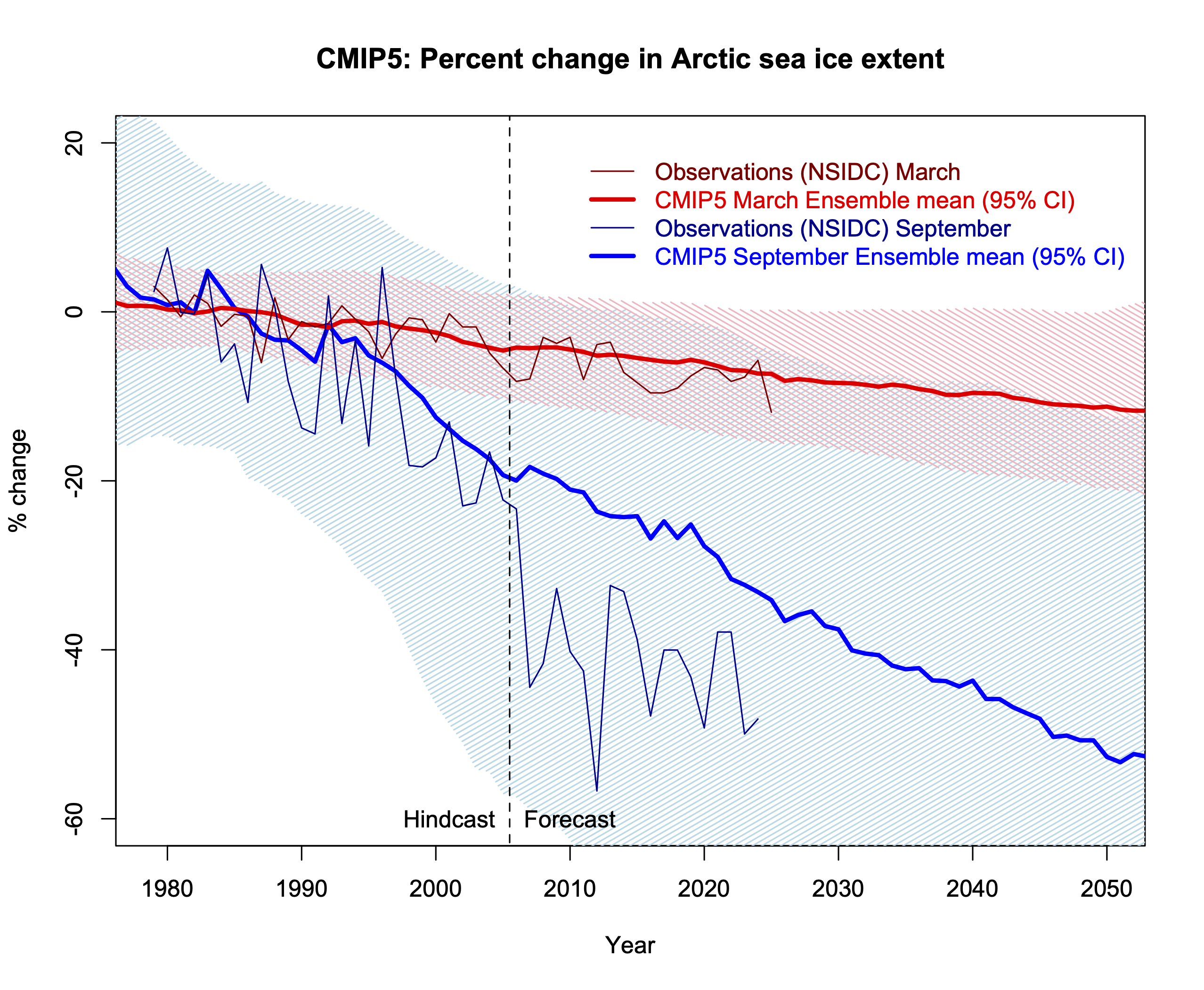

Development of the CMIP5 models was ongoing as these discrepancies were manifesting, and there were improvements in sea ice physics and dynamics, increased resolution and a reduction in the overall climate biases. The simulations in CMIP5 were conducted around 2011-2013, and used historical forcings from to 2005, and scenarios subsequently. Did that make any difference?

Closer, but no cigar. The spread in the CMIP5 models is larger (a function of greater variability), and the observations are now more within the spread, but the September ensemble mean trend (-8%/decade) is still a bit too low. But nearly 40% of the 107 individual simulations (95% CI is [-20,-1.4]%/decade) now have losses greater than 10%/decade. The March trends are mostly well represented, but there are still large variations in the absolute extent.

There was a longer gap before CMIP6, but those models were developed through to 2017/8 or so, and so developers were well aware of the ongoing discrepancies (Stroeve et al., 2012). Again, there were improvements in sea ice physics, dynamical schemes, forcings (the addition of black carbon impacts on snow and ice albedo for instance), and again, improvements in resolution and in the base climatology.

As a minor aside, from 2007 to 2014 there was a spate of un-peer reviewed claims from a few scientists (Peter Wadhams and Wiesław Masłowski notably) that used non-linear statistical fits to the observed sea ice indices to predict essentially ice-free conditions by 2013, or 2016 or so. These predictions were not based on any physical insight or model, were heavily criticised by other scientists at the time (I recall a particularly spicy meeting at the Royal Society in 2014 for instance!), and (unsurprisingly) were not validated. But this kind of stuff is perhaps to be expected when the mainstream models are not providing credible projections?

Anyway, back to CMIP6. Third time’s a charm?

Actually, this isn’t bad. The CMIP6 ensemble mean for September area trends is now -11 %/decade (observed 13 %/decade) and the March trends are spot on. Note that the observed loss in ‘area’ is slightly larger than the trend in ‘extent’ (13 %/decade vs. 11 %/decade) and I’m using area here because that is what is available. The spread for September trends is [21,3] %/decade which is slightly tighter than in CMIP5, and 40% (again) have losses greater than 10 %/decade.

What lessons can be drawn here?

As we have often stated, models are always wrong, but the degree to which they can be useful needs to be addressed – by variable or by model generation or by model completeness etc. The utility of the CMIP6 ensemble (and presumably the upcoming CMIP7 models) for Arctic sea ice is clearly higher than the CMIP3 ensemble, but there doesn’t appear to be a single thing that needed to be fixed for that to happen. Rather, an accumulation of improvements – in physics, resolution, completeness, forcings – have led to a gradual improvement in skill (not just in the sea ice trends!).

As Simpson et al (2025) noted, there are increasing numbers of climate quality diagnostics that have long enough time series and emerging signals of change, such that there are an increasing number of tests for the model trends. The history of Arctic sea ice comparisons shows that it might be premature to conclude that any specific discrepancies imply that something is fundamentally wrong, or that climate modeling is in a ‘crisis’ (Shaw and Stevens, 2025), it may well be that these discrepancies will resolve themselves in the course of ‘normal’ model development (and as the observed signals become clearer). Or not ;-).

Note on sources: CMIP3 (Mar, Sep) and CMIP5 (historical, rcp45) processed extent data are from Jacob Dörr (Notz et al, 2020) and Alex Jahn (via Julienne Stroeve and Patricia Derepentigny), and the CMIP6 area data is from the U. of Hamburg data portal (courtesy of Dirk Notz). Ensemble means are over the whole ensemble with one simulation = one vote. Also I haven’t screened the CMIP6 models by climate sensitivity (as I’ve done for the temperatures). These choices might make small differences, but not effect the main conclusions.

References

- I.R. Simpson, T.A. Shaw, P. Ceppi, A.C. Clement, E. Fischer, K.M. Grise, A.G. Pendergrass, J.A. Screen, R.C.J. Wills, T. Woollings, R. Blackport, J.M. Kang, and S. Po-Chedley, "Confronting Earth System Model trends with observations", Science Advances, vol. 11, 2025. http://dx.doi.org/10.1126/sciadv.adt8035

- J. Stroeve, M.M. Holland, W. Meier, T. Scambos, and M. Serreze, "Arctic sea ice decline: Faster than forecast", Geophysical Research Letters, vol. 34, 2007. http://dx.doi.org/10.1029/2007GL029703

- J.C. Stroeve, V. Kattsov, A. Barrett, M. Serreze, T. Pavlova, M. Holland, and W.N. Meier, "Trends in Arctic sea ice extent from CMIP5, CMIP3 and observations", Geophysical Research Letters, vol. 39, 2012. http://dx.doi.org/10.1029/2012GL052676

- T.A. Shaw, and B. Stevens, "The other climate crisis", Nature, vol. 639, pp. 877-887, 2025. http://dx.doi.org/10.1038/s41586-025-08680-1

- D. Notz, and S. Community, "Arctic Sea Ice in CMIP6", Geophysical Research Letters, vol. 47, 2020. http://dx.doi.org/10.1029/2019GL086749

Thanks, Gavin! This reminds me of conversations past, in which I tried to explain that 1), more than one climate variable matters; 2) that yes, climate science was and is cognizant of errors, 3) is willing to admit that errors happen, and 4) works to learn from them. Also that, as Ray has said in the past, errors are not always overpredictions, and by extension that “uncertainty is not a friend.”

GAVIN SAYS:

The utility of the CMIP6 ensemble (and presumably the upcoming CMIP7 models) for Arctic sea ice is clearly higher than the CMIP3 ensemble, but there doesn’t appear to be a single thing that needed to be fixed for that to happen. Rather, an accumulation of improvements – in physics, resolution, completeness, forcings – have led to a gradual improvement in skill (not just in the sea ice trends!).

Title:

Have Climate Models Earned Their Arctic Sea Ice “Improvement” — Or Are We Just Smoothing Over 23 Years of Failure?

The recent RealClimate article reviewing predicted Arctic sea ice trends across CMIP ensembles (CMIP3 through CMIP6) raises some troubling questions about how the modeling community is now reframing past model failures as part of a “gradual improvement” narrative.

Let’s be clear: for over two decades, CMIP3, CMIP4, and CMIP5 generated Arctic sea ice projections that were not just slightly off — they were deeply, persistently, and systematically wrong. Most of those models predicted summer sea ice persisting well into the late 21st century, yet we now face plausible scenarios of an ice-free Arctic September in the 2030s or earlier. That’s not a minor deviation — that’s a massive forecasting failure for a major climate system component.

And now, suddenly, we’re told that CMIP6 is doing better — as though this were the natural result of steady scientific progress. But this glosses over some vital issues:

1. Where Is the Post-Mortem on CMIP3–5?

There is zero transparency in most public-facing articles about why CMIP3–5 failed so badly on sea ice. What specific physics, parameterizations, forcings, or feedbacks were missing or mishandled? Without a detailed diagnosis, how can we be sure CMIP6 isn’t just accidentally “right” — or worse, tuned to appear so?

Science is supposed to be about falsifiability and explanation. Yet there’s been no real accounting for how those older ensembles went so wrong, just vague talk of “improvements in resolution and physics.”

2. Improvement… or Post-Hoc Tuning?

The fact that CMIP6 now better aligns with observations after years of criticism about underestimation naturally raises the question: are models now being subtly calibrated or post-tuned to fit the observed data more closely? That’s not inherently unscientific, but it is problematic if:

— It’s not disclosed.

— It gives a false sense of predictive skill.

— It masks ongoing weaknesses within individual models.

3. The Ensemble Mean Hides the Outliers

The RealClimate article relies heavily on smoothed ensemble means, which — while useful for broad comparison — can obscure the fact that many individual model runs still perform poorly. This statistical smoothing flattens out the actual spread and makes the results look more robust than they are.

Even Gavin Schmidt and Roger Pielke Jr. have, in other contexts, pointed out that over-reliance on ensemble means can hide critical flaws. The question isn’t whether the average is better — it’s whether the individual models have learned to capture key dynamics, or whether we’re just cherry-picking those that happen to now align with observation.

4. Coincidence or Competence?

If CMIP6 now “gets it right,” we must ask: is this a real validation of the physical models — or just a statistical coincidence? After 23 years of flawed outputs, we’re owed more than hand-waving and retrospective optimism.

Where’s the evidence that these improvements stem from first-principles physics, and not just smarter curve-fitting or scenario tweaking?

Final Thought

We’re talking about one of the most sensitive climate indicators on Earth — Arctic sea ice — and the narrative now seems to be: “We underestimated it for two decades, but trust us, CMIP6 is better.”

Fine. Then prove it — with transparency, with detailed analysis of past errors, and with testable physical justifications for current model success.

Until then, it’s not denialism to question whether this “success story” is being oversold. It’s just responsible skepticism — the foundation of good science and high public communication standards.

William why do you fill this page up with so many words which don’t actually say much. You could have instead asked Gavin or whomever for examples of how the understanding of physics related to ice sheets has improved. I asked the Google AI search tool and got a detailed answer.

Model Improvement or Statistical Coincidence?

I posed the questions as suggested to niglej (in my prior comment) to Google’s Gemini.

An Assessment of Predicted Arctic Sea Ice Trends and the Discourse on Climate Model Evolution

Ultimately, the commenter’s inquiry into whether improvements stem from genuine physical validation or statistical coincidence underscores a legitimate scientific demand for rigorous attribution of model success.

This report concludes that the commenter’s points are valuable for fostering responsible skepticism and advocating for enhanced transparency in climate science communication.

Please let me know of you would like see the assessment in full. I could share a link to it.

Nigelj says

2 Jun 2025 at 1:44 AM

Nigelj — you asked Gemini a question and got a good answer. Great! That’s the point. Most of us now use AI to clarify technical points — and I did exactly that, too. Instead of throwing shade, try comparing insights. Maybe you’ll learn something.

Meanwhile, if you ever feel ready to engage with the actual points I raised — like model tuning, physical diagnostics, or sea ice divergence — the floor is yours.

This hopefully replaces my original comment which was culled before the 5 Jun 2025 at 12:24 AM post.

Addendum – Following Up on Arctic Sea Ice Model Skill

Gavin writes:

“One of the most high profile ‘misses’ in coupled modeling over the last few decades was the failure of the model projections in CMIP3 […] to match the rapid losses in Arctic sea ice.”

Yes — and that’s putting it mildly. The ensemble mean and most individual CMIP3–5 model runs not only failed to capture the magnitude of sea ice loss, they fundamentally misunderstood its timing, trajectory, and sensitivity. This was not a minor calibration error — this was a systemic failure, sustained over multiple generations of models.

CMIP6: A Step Forward?

Figure 3 now presents percent change in sea ice area, not extent — yet the CMIP6 ensemble still fails to match the observed trajectory.

The 2007–2012 collapse remains a clear outlier, still not captured by the mean, nor bounded by plausible confidence intervals.

The spread of model projections is disturbingly wide. For 2014, the 95% CI for September ice area ranges from ~3% to over 90% loss. By 2038, it ranges from 15% to well over 100%. These are not scientifically defensible bounds — they suggest fundamental incoherence, not physical realism.

Why is there no work done on Arctic Sea Volume PIOMASS, surely the most critical component for forecasting a blue ocean event and everything else.

Why Invoke Wadhams?

Introducing extreme lowball forecasts (e.g. from Wadhams) serves only to make flawed model ensembles appear “reasonable” by comparison. But this rhetorical tactic distracts from the central issue: the models meant to inform policy, the IPCC, and public understanding have not performed well — and still don’t.

If mainstream models lack credibility, say that plainly. Don’t frame it as a hypothetical:

“Perhaps to be expected when the mainstream models are not providing credible projections?”

That should have been the subtitle:

“Mainstream CMIP models still do not provide credible projections for Arctic Sea Ice.”

Backward-Averaged Success?

You cite -11%/decade September sea ice area trend in CMIP6 (vs -13% observed) from 1979–2025 as evidence of “not bad” performance. But that’s a post hoc average, folding in newer data into older models. It tells us nothing about the forecasting skill of these models in real time. And it masks critical errors during the key period (2007–2012) when the models were most needed.

The issue isn’t whether CMIP6 looks better when smoothed over 45 years — it’s whether it can tell us anything reliable about 2025–2040. From the September % change spread you cite — clearly, it can’t.

Usefulness?

You quote the classic line:

“All models are wrong, but some are useful.”

Then please — quantify the usefulness. How useful were CMIP3–5 for Arctic sea ice? What impact did they have on IPCC projections, or on the scientific literature? What decisions were based on them?

More importantly: how is CMIP6 useful now — when the projected 2025 Arctic September ice loss ranges from ~5% to >100%? That isn’t predictive power — it’s noise. It suggests no practical understanding of how Arctic sea ice will behave in the next 12 months, let alone decades.

Discrepancies “Resolving Themselves”?

Gavin concludes:

“…these discrepancies will resolve themselves in the course of ‘normal’ model development… Or not ;-)”

This sounds flippant. Discrepancies don’t “resolve themselves.” Either:

— The physics improves,

— The parameters are constrained,

— The resolution is refined,

— Or the tuning is made more explicit.

Or else, the model remains wrong.

Worse, your statement seems to simultaneously defend the current ensemble and anticipate its future irrelevance. That’s not scientific humility — that’s hedging. And it weakens public trust.

Final Thoughts

You ask us to continue treating CMIP as the foundation of climate projection — yet when major failures persist for over 20 years, the response is: “They’re improving. Probably. Maybe. Let’s wait.”

That’s not good enough. We deserve:

— A clear technical post-mortem on CMIP3–5 sea ice errors;

— A transparent discussion of how CMIP6 was corrected (or tuned) in response;

— And a candid assessment of what confidence we should place in CMIP7.

Until then, it’s hard to see this as anything more than an exercise in statistical cosmetics.

It doesn’t matter how much lipstick you put on a pig — it’s still a pig.

The ensemble mean might look smoother now, but the model’s skill remains unresolved.

Accuracy is not the same as aesthetic.

Thank you for your scientific elaboration on the ‘coupled” issue that persists. The expression ” have your cake and eat it too” comes to mind.

Follow-up: Persistent Divergence Between Observations and CMIP6 Projections

One detail that still doesn’t get the attention it deserves: the last 18 years of observed September Arctic Sea Ice minimum trends (2007–2024) remain consistently out of step with the CMIP6 ensemble mean — not just as isolated years, but in the overall trajectory, both in magnitude and rate of change.

The observed decline is more stepwise and abrupt, especially around the 2007 and 2012 minima.

CMIP6 continues to show a smoother, more linear descent that fails to capture the inflection points of real-world losses.

Even today, the ensemble mean lags behind, while the observed data have flattened somewhat in recent years — a nuance CMIP6 doesn’t reflect either.

This persistent mismatch raises two key concerns:

Is CMIP6 tuned only to capture long-term averages, rather than decadal-scale dynamics, tipping points, or variability?

If so, how can it be considered useful for real-world policy, where near-term changes (like the prospect of ice-free Septembers before 2040) carry enormous implications?

We are not talking about modest noise here. The divergence is systematic and enduring, and yet rarely addressed in detail. Why? Until that gap is explained or reconciled, confidence in CMIP’s ASI projections seems… aspirational at best.

For instance, Stroeve et al. (2012) highlighted that earlier models underestimated the rate of sea ice loss, a trend that continues with CMIP6. Furthermore, Notz and SIMIP Community (2020) found that while CMIP6 models offer improved sensitivity estimates, they still fail to simulate a plausible evolution of sea-ice area alongside global mean surface temperature.

MPG.PuRe+7eesm.science.energy.gov+7NOAA Institutional Repository+7

This persistent mismatch raises questions about the models’ ability to accurately represent key processes affecting sea ice dynamics. Until these discrepancies are addressed, reliance on CMIP6 projections for policy-making and climate forecasting remains problematic.

Source Refs

https://eesm.science.energy.gov/publications/arctic-sea-ice-cmip6

https://repository.library.noaa.gov/view/noaa/29934

https://www.osti.gov/pages/biblio/1618526

https://epic.awi.de/id/eprint/51815/

https://link.springer.com/article/10.1007/s00376-022-1460-4

https://scispace.com/papers/arctic-sea-ice-in-cmip6-1t3idhfbxw

https://www.sciencedirect.com/science/article/pii/S1674927824000844

https://pure.mpg.de/rest/items/item_3221097_3/component/file_3231260/content

An assessment of the CMIP6 performance in simulating Arctic sea ice volume flux via Fram Strait

Evaluating the simulation capabilities of the most recent CMIP6 models in sea ice volume flux provides references for model applications and improvements. Meanwhile, reliable long-term simulation results of the ice volume flux contribute to a deeper understanding of the sea ice response to global climate change.

And note Fig.1

https://www.sciencedirect.com/science/article/pii/S1674927824000844

“Until these discrepancies are addressed, reliance on CMIP6 projections for policy-making and climate forecasting remains problematic.”

A question. What sort of policy-making might result from better sea ice model projections and improvements…a deeper understanding of global climate change?

Reply to Ken Towe

Great question — and you’re right to sense there’s a deeper conversation that should be occurring here. Here’s a response off the top of my head to:

> “What sort of policy-making might result from better sea ice model projections and improvements… a deeper understanding of global climate change?

—

Improved sea ice projections wouldn’t just refine academic understanding — they could significantly influence a range of policy decisions, especially those tied to regional risk management, climate adaptation, and strategic planning. Specifically:

1. **Shipping and Arctic Navigation**

Reliable sea ice forecasts are crucial for commercial and military navigation through the Arctic (e.g., the Northern Sea Route or Northwest Passage). Better projections inform infrastructure investments, safety protocols, and insurance risk calculations for Arctic shipping.

2. **National Security and Geopolitics**

Nations with Arctic interests (Russia, Canada, the U.S., China, etc.) rely on long-term projections to shape defense postures and territorial claims. Sea ice decline forecasts influence everything from military base placement to submarine patrol routes and sovereignty disputes.

3. **Indigenous and Coastal Communities**

Accurate modeling affects community planning for northern populations that depend on sea ice for transportation, hunting, and cultural survival. It also influences relocation policies and climate resilience funding.

4. **Climate Feedbacks and Carbon Budgets**

Arctic sea ice loss affects albedo (reflectivity), regional amplification, and atmospheric circulation patterns. Getting those dynamics right is key to projecting downstream climate impacts elsewhere — which in turn affects global carbon budget calculations, timelines for net-zero targets, and urgency behind emission cuts.

5. **Biodiversity and Ecosystem Protections**

Sea ice governs key marine ecosystems. Policy on fisheries management, marine protected areas, and species conservation — from polar bears to krill — depends on reliable predictions of habitat change.

6. **Credibility and Communication of Climate Risk**

When models repeatedly under- or over-predict key features like sea ice, it erodes public trust in climate science. More accurate, verifiable sea ice projections help rebuild that trust and improve how risks are communicated to policymakers and the public.

Over and above all that ASI changes influence global temperatures and our ability to provide short to medium term global temperature projections. Such knowledge should be feeding into everything from the IPCC work to understanding why the Paris Agreement and actions by the UNFCCC and COP system are deeply broken. The ASI and Antarctic SI projections of this CMIP6 remain so wrong. they not fit for any purpose.

So yes — better projections wouldn’t just be about “understanding” climate change. They would refine *practical, real-world decision-making* in domains that touch energy policy, defense, trade, indigenous rights, environmental protection. Along with global climate diplomacy and potentially practical action plans to address global warming itself.

Would you like to follow with your own points? I’d love to compare.

William said “Great question — and you’re right to sense there’s a deeper conversation that should be occurring here. Here’s a response off the top of my head to:

> “What sort of policy-making might result from better sea ice model projections and improvements… a deeper understanding of global climate change?”

Williams response that he claims is off the top of his head looks like it’s written by AI. I just asked google gemini the same question to test this, and got a remarkably similar response.

Does this mean that everything coming out of AI is wrong? Except when nigelj uses it. Maybe it means I was right and scientifically well grounded in my answers about Policy making. And maybe it means I have the finely tuned knowledge level of a Google Gemini AI on this particular topic. Because the answers I gave above were essentially off the top of my head. I certainly did not use Google Gemini as niglej did to create that comment-it was my very own thinking and knowledge that produced it.

On climate policy making:

Gemini agrees that if models used for Arctic sea ice projection — like those in CMIP6 — can’t capture abrupt events or exhibit extreme divergence across scenarios (e.g., from 5% to 100% ice loss by 2025), then their use in real-world planning is deeply compromised.

William’s comment shows how RealClimate fails to connect model claims to practical policy implications — and how communication gaps undercut informed decision-making across domains like shipping, national security, Indigenous livelihoods, biodiversity, and tipping points.

Gemini notes that William’s push for fitness-for-purpose — models that match the demands of real-world timing, scale, and risk sensitivity — is scientifically grounded and ethically urgent.

I note others on this forum have alternative opinions.

William: Does this mean that everything coming out of AI is wrong?”

It’s like DoGE firing all the air-traffic controllers and replacing them with an AI system known to malfunction and hallucinate, and then defending their action with: “Does this mean that everything coming out of AI is wrong?” You see the problem with this logic, right?

William, you miss the point, which is stop pretending information written by AI and copied and pasted is just “off the top of your head” – as in is you own piece of writing.

About “William, you miss the point, which is stop pretending information written by AI and copied and pasted is just “off the top of your head” – as in is you own piece of writing.”

nigelj has inverted the issue. The comment in question was my own original work—written and edited like any serious contribution. See William says 1 Jun 2025 at 6:29 PM above. Accusing it of being copied from AI is baseless, and more importantly, avoids the real topic.

The questions are:

1) How reliable are CMIP6 sea-ice projections?

2) What policy risks follow from inaccurate outputs?

3) What specific improvements might make them more useful?

Those questions were raised. Six policy examples were given. Rather than engaging them, the focus shifted to whether it was plausible someone could generate that answer without assistance—followed by accusations and deflection. That’s not scientific discussion. That’s misdirection.

And it proves the point: when the content is inconvenient, the tactic becomes to question the authorship, not the substance. If the models matter, then so do the critiques.

William: “This persistent mismatch raises questions about the models’ ability to accurately represent key processes affecting sea ice dynamics. Until these discrepancies are addressed, reliance on CMIP6 projections for policy-making and climate forecasting remains problematic.”

I can’t quite decide if this argument is an example of a Ignoratio elenchi (irrelevant conclusion, missing the point) fallacy, a straw man fallacy. or a logic chopping fallacy (nit-picking, trivial objections). In any case, the conclusion is unsupported by the given evidence.

Reply to Steven Emmerson

In your opinion — which, unlike William’s comments, is not supported by any actual evidence.

And that is assuming you even read (let alone understood) the references provided and the specific points William was drawing from. ( I don’t) Easier, I suppose, to wave it all away with a vague Hubristic Sniff about fallacies, while offering none of your own substantive counterpoints.

Prevarication isn’t rebuttal.

So… no counter-evidence, no engagement with the citations, no analysis of the actual claim — just a vague gesture at fallacies and a dismissive shrug. It doesn’t work — especially when the post you’re brushing off is better referenced and more rigorous than your own unsubstantiated opinions.

Gavin’s rhetorical fog was bad enough. Now it’s pea soup. Can’t see a thing. A forum being true to form.

PP: Gavin’s rhetorical fog was bad enough. Now it’s pea soup. Can’t see a thing. A forum being true to form.

BPL: You’re right, this is a horrible forum. If I were you, I’d leave and never come back. That’ll show ’em!

Pedro Prieto, William presented no credible evidence that discrepancies in current projections of Arctic sea ice necessarily implies that CMIP6 projections are problematic for policy-making in general. When to start shipping through the Arctic, perhaps. but not for addressing global warming.

Steven Emmerson says

1 Jun 2025 at 4:29 PM

William: “This persistent mismatch raises questions about the models’ ability to accurately represent key processes affecting sea ice dynamics. Until these discrepancies are addressed, reliance on CMIP6 projections for policy-making and climate forecasting remains problematic.”

SE: I can’t quite decide if this argument is an example of a Ignoratio elenchi (irrelevant conclusion, missing the point) fallacy, a straw man fallacy. or a logic chopping fallacy (nit-picking, trivial objections). In any case, the conclusion is unsupported by the given evidence.

Reply to Steven Emmerson

William: Dear Steven, please let me know if you would like a link to Google Gemini’s 13 page –

An Assessment of Predicted Arctic Sea Ice Trends and the Discourse on Climate Model Evolution

I believe it may assist you making a better informed decision. I’m happy to provide a specific assessment of “Follow-up: Persistent Divergence Between Observations and CMIP6 Projections” if you’d prefer. Please let me know.

William, Hitchen’s razor states “What can be asserted without evidence can also be dismissed without evidence”.

You’ve provided no credible evidence for your assertion that discrepancies in modeling Arctic sea ice necessarily implies that CMIP6 projections are problematic for policy-making in general.

Google Gemini output isn’t peer-reviewed.

Please post references to specific instances in the peer-reviewed scientific literature that support your assertion (quotations would be appreciated); otherwise, your assertion will be dismissed.

Steven Emmerson says

5 Jun 2025 at 2:14 PM

1) Hitchen’s razor states “What can be asserted without evidence can also be dismissed without evidence”.

Steven et al, that razor cuts both ways.

2) You’ve provided no credible evidence for your assertion that discrepancies in modeling Arctic sea ice necessarily implies that CMIP6 projections are problematic for policy-making in general.

Steven you are correct there. Yet barely anyone provides credible evidence for their assertions or opinions here. Why single me out? I must already have a target on my back, despite not ever engaging with you before.

I have posited my opinions on the matter, yes, opinions powerfully based on the science. You seem nonplussed. Content. No problem, but this does not mean I’m wrong though.

3) Google Gemini output isn’t peer-reviewed. Please post references to specific instances in the peer-reviewed scientific literature that support your assertion (quotations would be appreciated); otherwise, your assertion will be dismissed.

That is correct Steven. Nor are any of the dismissals in comments directed to me peer reviewed either. However, the Gemini output, and others like it (see Paul Pukite), do provide powerful references to specific instances in the peer-reviewed scientific literature that support my many assertions and opinions about this latest article. Some powerfully confirm my understanding of what net zero means is the correct one.

Steven I am more than happy to provide all those citations and quotations in due course. If I can get them past the ‘censor’. I can only do my my best Steven. It is a work in progress.

The question remains, even if I can get the material posted here, what will happen? Will it still be:

Dismissed without addressing the substance.

Generate more snark or insult in lieu of engagement.

Be ignored or slow-walked in mod approvals.

Have “tone” or “style” used as an excuse to avoid the content.

This is the pattern — not because I’m wrong, or proven wrong, but because what I share is inconvenient. What I have laid out is serious, informed, and deeply grounded in the scientific literature. It still always generates significant push back here–references to peer-reviewed scientific literature or not.

I have contributed much to the discourse here in a very short time. The pattern is I’m being continually baited with false accusations, with snark, ad hominem, veiled superiority. I cannot control any of that.

You may well say “quotations would be appreciated”, but I have no faith you or anyone else will look at them. I will provide them anyway. People will make their own choices, of course, which will reflect upon them, not me. Let’s see what happens then.

Are we still on net-zero? William, AIUI you ‘re conflating different things.

If I am piercing your fog of words, you think that CO2 concentrations will keep increasing until we hit net zero. Presumably that is because you think that net zero means that human emissions would equal removals from the atmosphere. But as Gavin explained, and IIRC, you yourself quoted:

So, net zero is NOT when total emissions balance total removals–which is tautologically the point at which concentrations begin to fall. It’s about the specifically anthropogenic components on each side of the ledger.

Actually, net zero has, strictly speaking, no logically necessary connection to rising or falling concentrations whatever. Let’s break it down.

First, let E denote total emissions, and R denote withdrawals. We’ll call the change in concentration delta(C), and ignore the mathematico-physical complications we’d need for a quantitatively accurate computation. Then, trivially if schematically:

E + R * -1 = delta(C)

But as stated, there are anthropogenic and natural fluxes both. I’m going to simplify by noting that currently at least, natural sinks exceed additions, so we can treat the net as a single quantity. falling under the ‘removals’ heading. Then:

(E(anthro) + (R(anthro) + R(natural)) * -1 = delta(C)

Right now, E(anthro) exceeds total R, of course, so delta(C) must be positive.

If we cut emissions–pray that that day comes soon!–there will come a point when removals equal those falling emissions. If emissions cuts continue past that point, then delta(C) turns negative. In practice, it’s likely that sinks will continue to function at some level; Gavin has told us that per modeling, the best estimate is that we would bring delta(C) to zero with an emissions cut of approximately 70%. You have responded, correctly, that we can’t know with a high degree of certainty whether or not sinks will be operating in the same manner as modelers are currently projecting.

In fact, it’s conceivable that the natural fluxes could turn positive. We think that’s an extreme possibility, but it’s a contingency that as far as we know is at least possible, even if it isn’t seen as probable in the next decade or so. Then, of course, we could be at net zero, and concentrations would still be rising. I’ll spare us all the mathematical formalism at this point; I think we all get the idea.

This is of course the dreaded “tipping points” scenario: the Amazon converts to savannah and releases a big pulse of CO2, or large quantities of clathrates outgas releasing methane by the teratonne into the water column of the Arctic ocean, or whatever the case. For a time, at least, there’s nothing we can do to mitigate the rising concentrations. (It doesn’t necessarily go on forever; the Amazon basin is big, but at some point conversion stops. Of course, it may trigger other tipping points; IIRC, there are something like 17 that have been identified.)

Contrariwise, maybe we’ve missed a negative feedback, or underestimated it significantly. In that case, presumably we’d see concentrations start to fall with a smaller cut to emissions than the estimated 70%. That’d qualify as a lucky break, and we obviously shouldn’t proceed on that basis: we need to cut emissions as fast as we can.

So, we don’t think that we need to get to net zero to see concentrations start to fall; we think that we need to get below about 70% of current emissions. (We can’t stop there, though, because as Gavin also pointed out, temperatures won’t stabilize at that point, and feedbacks are driven by those temps, meaning sinks may be impaired while temps are still rising.)

But scenarios in which we could be at net zero and still see concentrations rising are also possible.

I fear I’ve created a bit of a fog of words myself here. But I hope it does demonstrate that, as Gavin said, net zero and rising or falling concentrations of CO2 are indeed “different things.”

Now, can we please move on?

Kevin McKinney says

6 Jun 2025 at 8:41 PM

Are we still on net-zero?

Well Steven and William weren’t. So it’s only you on this page talking about Net Zero.

No one else is. Or did see a big red target on William’s back?

(Due to nesting limitations, I’m having to reply to my own post.)

Quotes are from William.

Not really. Hitchen’s razor is asymmetric because it puts the onus of providing evidence on the person making the assertion and not the other way around.

You’re “singled out” because you made an assertion without providing any evidence. Application of Hitchen’s razor is common practice in Science (as anyone who’s ever defended a thesis or dissertation can attest).

It means that your opinion can be dismissed.

Irrelevant because it doesn’t address the issue, which is your assertion that discrepancies in modeling Arctic sea ice necessarily implies that CMIP6 projections are problematic for policy-making in general.

Irrelevant for the same reason.

It’s a shame how the small error with the url derailed Pedro’s comment as the points he was making and referencing were good ones. Including this- Scrutiny is not denial. Critiquing poor model performance is scientific due diligence, not heresy. And holding public scientists to higher standards of clarity, transparency, and engagement only strengthens public trust — it doesn’t erode it.

I’d suggest doing the opposite does. To that end, this comment now also relates to what John N-G says 1 Jun 2025 at 1:24 PM had to say below to Pedro.

Despite the key findings from the paper (Notz et al., 2020) mentioned below the post just by me, already pointed to multiple other CMIP6 evaluation sources. I think there is no hard ‘consensus’ on the efficacy on everything to do with CMIP6 and ASI. I mean even Dirk Notz’s conclusions and highlights vary across his own analyses.

for example, one of the links I posted above already pointed to this Geophysical Research Letter.

Arctic Sea Ice in CMIP6

2020

By Notz, Dirk ; Community, SIMIP ORCID ICON IMG

Source: GeophysicalResearch Letters,47, e2019GL086749.

https://repository.library.noaa.gov/view/noaa/29934

Which among many things worth being aware of is the Conclusion:

• CMIP6 model performance in simulating Arctic sea ice is similar to CMIP3 and CMIP5 model performance in many aspects.

-This includes models simulating a wide spread of mean sea-ice area and volume in March and September;

-the models’ general underestimation of the sensitivity of September sea-ice area to a given amount of global warming;

-and most models’ failure to simulate at the same time a plausible evolution of sea-ice area and of GMST.

and

• CMIP6 model performance differs from CMIP3 and CMIP5 in some aspects.

-It is unclear to what degree these improvements are caused by a change in the forcing versus improvement of model physics.

Improved, yes, definitely fit for purpose, and performance above and beyond the CMIP3/5 results; Not so much.

And it’s worth realizing this Abstract note from another of the links I posted above:

“Still, most CMIP6 models fail to simulate at the same time a plausible evolution of sea‐ice area and of global mean surface temperature.”

https://epic.awi.de/id/eprint/51815/

Oh sorry, it’s from the very same Notz article. Is it not ironic how so many things are sitting there in plain sight? I leant this from all the papers I used to read decades past. Sometimes your eyes can just glaze over and you can so easily miss things.

I wonder what new technical tools we could develop that would be more reliable to capture important points across domains, sources from the millions of science articles and peer-reviewed scientific research papers?

Maybe one day they will think of something that is far superior to internet google searches.

WIlliam: “ It’s a shame how the small error with the url derailed Pedro’s comment as [his] points were good ones”

The problem was not your wrong url – but with the claims your declare “good”. Details in: 3 Jun 2025 at 1:26 PM. And in the follow-up immediately below ( Piotr 5 Jun 2025 at 9:05 PM).

Thanks for an article on my favourite topic Gavin!

I heartily recommend an additional reference, which perhaps goes some way to explaining the significant excursions below the September trend in 2007 and 2012?

0. C. M. Bitz and G. H. Roe, “A Mechanism for the High Rate of Sea Ice Thinning in the Arctic Ocean”, Journal of Climate 2004

https://www.atmos.washington.edu/~bitz/Bitz_and_Roe_2004.pdf

“A general theory is developed to describe the thinning of sea ice subjected to climate perturbations, and it is found that the leading component of the thickness dependence of the thinning is due to the basic thermodynamics of sea ice. When perturbed, sea ice returns to its equilibrium thickness by adjusting its growth rate. The growth–thickness relationship is stabilizing and hence can be reckoned as a negative feedback. The feedback is stronger for thinner ice, which is known to adjust more quickly to perturbations than thicker ice. In addition, thinner ice need not thin much to increase its growth rate a great deal, thereby establishing a new equilibrium with relatively little change in thickness. In contrast, thicker ice must thin much more. An analysis of a series of models, with physics ranging from very simple to highly complex, indicates that this growth–thickness feedback is the key to explaining the models’ relatively high rate of thinning in the central Arctic compared to thinner ice in the subpolar seas.”

”

Perhaps it also helps explain the alleged “pause” in Arctic sea ice decline, so popular in cryodenialistic echo chambers at the moment?

Link: https://agupubs.onlinelibrary.wiley.com/doi/pdf/10.1029/2020GL087965

Key findings from the paper (Notz et al., 2020):

No significant improvement in Arctic sea ice projections between CMIP5 and CMIP6.

“Models that participate in CMIP6 do not show a clear improvement in simulating observed sea‐ice trends over recent decades compared to models from earlier phases of CMIP.”

Still too much Arctic sea ice in CMIP6 simulations for recent decades.

The models systematically overestimate September sea ice extent compared to observations. Same problem as before.

Antarctic sea ice is still mishandled:

The observed increase from 1979 to ~2015 is not captured in the models — which instead show a decrease. That’s a complete miss.

So… What Is Going On? What is the purpose of this article here now?

It’s a little suspicious:

Damage control / reputation management: CMIP3–5 have been (rightly) criticized for years — particularly in terms of Arctic sea ice projections. CMIP6 was supposed to be better. It’s not — at least not clearly — so some scientists may now be trying to massage that narrative.

Preemptive narrative setting: With CMIP7 now being discussed, this may be a way to gently sweep past failures under the rug and frame them as “normal model evolution” — a soft reset, rather than an admission of serious structural flaws or poor tuning.

Defensive posture disguised as openness: Gavin’s tone seems reflective and modest — but the actual content is evasive, hand-wavy, and deflects scrutiny. Mentioning Peter Wadhams as a foil is a distraction tactic, not a serious engagement with past model failure.

Audience management: RC serves both policymakers and laypeople, many of whom are not model specialists. A vaguely reassuring tone — “things are improving” — reduces anxiety and protects institutional credibility, even if it glosses over fundamental problems.

Combine that with vague language like:

“…discrepancies will resolve themselves… Or not ;-)”

and

“…models are always wrong, but still useful…”

— and you’re left with a kind of rhetorical fog machine: soften the failures, spotlight only the ensemble mean, and dodge the hard accounting.

The framing of this post — and the timing — doesn’t quite add up. Compared to dozens of other pressing climate concerns, why this topic, and why now? Five years on from CMIP6, its performance in simulating Arctic and Antarctic sea ice (extent, concentration, and trends) remains poor. It has shown little demonstrable skill in predicting critical inflection points like blue ocean events — and the record hasn’t meaningfully improved since CMIP5.

That the 2020 paper linked here flatly contradicts any claim of serious CMIP6 improvement is telling. The issues have all been covered before in dozens of similar papers.

One of the quieter scandals in climate science communication — particularly around modeling — is how little post-hoc accountability or rigorous performance review takes place. For all the resources poured into CMIP modeling, almost no one in the mainstream is willing to step back and plainly ask:

“How well did these projections actually match reality over the past 10 to 20 years?”

Even serious published critiques (like Notz et al. 2020) are rarely engaged with in public-facing commentary. The pressure to preserve institutional credibility — and to avoid feeding denialist talking points — often leads to a kind of professional omertà: silence, spin, or deflection.

But here’s the truth:

Scrutiny is not denial.

Critiquing poor model performance is scientific due diligence, not heresy.

And holding public scientists to higher standards of clarity, transparency, and engagement only strengthens public trust — it doesn’t erode it.

One day it might begin to happen. Until then there will be no looking under the climate models hood.

Multi-troll: “Key findings from the paper (Notz et al., 2020): No significant improvement in Arctic sea ice projections between CMIP5 and CMIP6.”

and … what statistical criteria have you, or your source, used to determine the “insignificance” of the improvement? Because if you/your source didn’t – then you are using this word in the unscientific, colloquial meaning, of how it LOOKS to you. Which would make it a SUBJECTIVE (i.e. untestable) OPINION. Something along the lines of Chico Marx chiding a women surprised to see him in her bedroom:

– But I saw you [leaving the bedroom] with my own eyes!

– Well, who ya gonna believe me or your own eyes [Fig. 2 vs Fig. 3]?”

Multi-troll: “But here’s the truth: Scrutiny is not denial. Critiquing poor model performance is scientific due diligence, not heresy.”

But using the imperfect model representation of one of the most difficult to replicate outcomes of AGW (change in the sea-ice cover area) to question the credibility of ALL AGW modelling, in order to DISMISS the sense of reductions in GHG emissions using existing technologies and implementable mechanisms (price on GHG emissions) – is a MAINSTAY of DENIALISM – deniers focus on some not necessarily crucial aspect – to throw the entire AGW modelling, and the need to reduce GHGs, with the bathwater.

But it is been already discussed on RC to death, E.g.

==== UV, May 2025 =============

– William: “ I’m not a denier”

– me: “We don’t have to rely on your self-serving declarations, your posts would do: you have just tried to discredit the only feasible way to mitigate AGW (by calling the reductions in GHGs NEITHER “ feasible [nor] wise“, and justified it by saying that they merely “treat symptoms, not causes“) in favour of an alternative [rapid deindustrialization and reduction of population by many billions] THAT YOU KNOW cannot be realistically implemented on the necessary time-scale [next 1-2 decades].

Which is a very definition of an “anything-but-GHGs denier“.

=================================

“to DISMISS the sense of reductions in GHG emissions using existing technologies” …and to throw the entire AGW modelling, and the need to reduce GHGs, [out] with the bathwater.”

Surely, you are aware of the fact that rapid reductions in CO2 emissions will take none of the CO2 already added (420 ppm) out of the atmosphere to lower global temperatures. It does leave carbon in the ground, But that makes it more expensive and difficult for transportation to continue the energy transition to renewables and EVs.

KT: rapid reductions in CO2 emissions will take none of the CO2 already added (420 ppm) out of the atmosphere to lower global temperatures. It does leave carbon in the ground, But that makes it more expensive and difficult for transportation to continue the energy transition to renewables and EVs.

BPL: Transportation can be electrified or work off alternate fuels. Your “we need more fossil fuels to transition to a world without fossil fuels” doesn’t make any sense.

Mr. Levenson…Try doing anything of consequence without using vehicles that run on fossil fuels..Feed eight billion people for example. Install solar and wind farm projects.

KT: Try doing anything of consequence without using vehicles that run on fossil fuels..Feed eight billion people for example. Install solar and wind farm projects.

BPL: Try doing transportation for millions of people without using horse-drawn buggies, surreys, and stage coaches.

With respect to EVs, my wife recently wanted me to form an opinion about the complete life cycle analysis of EVs and the infrastructure required to support them. Because this is recent, I haven’t yet had the time to properly consume and then assemble things in mind.

But the following are a few of what I’ve encountered so far and felt able to present here:

“Electric vehicles from life cycle and circular economy perspectives”, 2018

https://www.eea.europa.eu/en/analysis/publications/electric-vehicles-from-life-cycle

“Analysis of the Life Cycle and Circular Economy Strategies for Batteries Adopted by the Main Electric Vehicle Manufacturers”, 2025

https://www.mdpi.com/2071-1050/17/8/3428

“The Future of Copper: Will the looming supply gap short-circuit the energy transition?”, 2022

https://cdn.ihsmarkit.com/www/pdf/0722/The-Future-of-Copper_Full-Report_14July2022.pdf

and a related summary article to the above report on copper:

https://www.engineering.com/copper-the-critical-material-for-transportation-electrification/

I’m only just starting to assemble materials and I’ve had only a little time to skim the above, yet. But I think they may relate to this question about electrification and EVs. (I’m not addressing ‘alternate fuels.’) Perhaps those are worth a moment, though.

I have had (because I’m an engineer who worked on designing aspects of substation transformers in the past) frequent conversations with my local power company, Portland General Electric, at an “internal staff” level of conversation about the substantial alterations and difficulties involved in the distribution of electrical power for EVs and hybrid vehicles. These discussions included the “final mile” part of it, as well.

Some people are buying class II charging systems. A Ford F-150 Lightning requires a class II charger with at least an 80 amp service. Class I charging systems are based on standard mains supply voltages, but they are for plug in hybrids, by and large, and would take a very long time for charging something like an F-150. My Prius hybrid uses a Class I charger and takes about 6 hours to charge. And it only has about 25 miles of range in its small battery. EVs have much larger battery systems and will generally require Class II service at home.(Class III is an EV service station and wouldn’t be for home use.)

Class II chargers are generally on the order of 100-200 amps service. For comparison, my entire household is a 200 amp service. If I were to purchase that Ford F-150 Lightning, I would have to work with PGE to upgrade my service to 320 amps or to go to a 7 kV drop service in order to support the Class II charger that comes with it. At any scale, this presents significant challenges to PGE (or any power company), despite the fact that they may be able to then use (with cooperation) these EV vehicles as a storage resource for load balancing.

(I live in a forest canopy system and at the 45th parallel. So solar is not in the cards.)

Don’t get me wrong. PGE would like lots of people to get EVs. Peak load is a significant problem and they wouldn’t even have to buy all that battery capacity. What’s not to love? But the infrastructure changes remain quite significant and those changes will take a great deal of time and money. I know firsthand some of these difficulties because I design aspects of the transformers used in substations.

From my personal discussions with PGE and from the above articles, I am gathering that, at the scale and time-line required, this is a ‘hard problem.’ I’m frankly not at all confident at this moment in time. Perhaps that will change. But that’s where I’m at, now.

My only unique contribution in science was almost a decade back, on ADEX theory relating to error correction theory and packing spheres. I’m otherwise just an applied mathematician and practicing engineer. I enjoyed my time as a team member of the group that produced the first commercially practical re-writable CDROM. Because I have some knowledge of condensed matter physics, singlet/triplet state transitions, and the impact of phonons on phosphor crystal lattices I helped design an instrument measuring reentry temperatures for the US Space Shuttle. I otherwise designed instrumentation for commercial and scientific needs. This just means I’m exposed to a few ideas by some truly bright folks with whom I’ve had the privilege bring around.

I don’t have a working crystal ball. But my gut tells me that without a global political change I don’t expect to see, I just don’t see it happening quickly enough. (Or at all. Not at scale, anyway, and not in time.)

That’s where I’m at, right now.

So I do not share your confidence in our ability to field electrification and EVs at the scale and rate needed to have a significant impact on CO2 on the relatively shorter order of time we need. And to quote one of the documents I mentioned above:

“In general, GHG emissions associated with the raw materials and production stage of BEVs are 1.3-2 times higher than for ICEVs (Ellingsen et al., 2016; Kim et al., 2016)…”

In addition, there’s the mix of electrical energy sources used to charge vehicles (that will vary over area and time, obviously), their life time, usage patterns, and recycling at end of life, among many other factors. But I’m sure it is not a slam-dunk.

Jon Kirwan,

I may reference your comment on the UV thread with more detail, but you really need to do some basic research on the F 150 charging system and basic house wiring. Your numbers are incorrect. The vehicle comes with a level II charger that only requires a 50A circuit (like an electric stove).

Ken Towe: “ Surely, you are aware of the fact that rapid reductions in CO2 emissions will take none of the CO2 already added (420 ppm) out of the atmosphere

Surely, you are aware of the fact that I have taken apart the same argument when you were making it two weeks before. Let me refresh your memory:

=====

Ken Towe 17 May: “GHG reductions, reducing emissions, will take none of the CO2 already added out of the atmosphere”

Piotr 18 May: “First – if large enough – they WILL result in the taking down CO2 already in the atmosphere – as natural uptake will no longer be overpowered by the new human emissions – currently only half of the emitted CO2 stays in the air the reset is absorbed by the natural sinks.

Second – yours is a typical denier/doomer all-or-nothing argument – if we can’t reduce the current levels of CO2 then let’s do nothing and keep increasing atm. Co2. The obvious and fallacy here is that the world at 425ppm won’t be as hellish as the world at 850 ppm.

So you are like a man who stabs his neighbour with a knife, justifies his refusal to stem the bleeding by saying that it would be pointless, since “ it will not bring back any blood you already lost and therefore he plans to continue stabbing the victim until he is dead.

=== end of quote =====

To which, other than crying how the other posters are mean to you (you characterizing the above as “ personal insults” ;-)) – you had NO answer to the above FALSIFIABLE arguments.

Nor had your defender, as in his powerful intellect he understood the above as me …. having to admit that his Ken was right:

Multi-Troll (“Thesallonia”): “ Piotr acknowledged that CO₂ will decline slowly once emissions stop — which aligns with what Ken said.”

So unable to defend your claim in the original thread – you repeat it, hoping for a different outcome? You know the definition of what is this? ;-)

Reply to readers

Catastrophic climate change impacts are always local, not global.

a reminder from https://climateandeconomy.com

Mindful that nothing that the above cheap troll does has any effect on anything. He is just another self-infatuated bloviating bullshit artist who loves to hear himself talk and has done nothing but praise himself and insult everyone else since the day he arrived at RC. He is a boor and a bore, and his comments are worthless and empty.

Hat tip to secular animist for the above content he posted. Imitation is the most sincere form of praise.

Pedro prietos depiction of Piotr is wildly inaccurate, and is just a classic example of psychological projection.

William reply about Piotr’s comment:

So now he’s quoting me from another thread while I was replying to a different person on a different topic entirely, ignoring the whole of what Pedro Pietro wrote here. From that he is constructing a fantasy mashup of motivations, identities, and imagined threats to climate science. Piotr left the realm of reasoned argument and crossed into performance art. Even worse.

Let me make it simple for everyone else: while backing up Pedro’s rational framing and genuine concerns.

“Critiquing poor model performance is scientific due diligence, not heresy.”

Everyone has a basic human right to question what’s produced. That does not equate to denying all climate modeling as is being falsely alleged here against Pedro — and, I suspect, myself as well. It’s honestly hard to follow the total lack of coherence and all-caps intolerance Piotr brings to the table. Nor does that comment, made in good faith, justify the paranoid screed he launched into.

If he can’t tell the difference between scientific scrutiny and denialism, maybe he should stop imagining he’s the arbiter of either.

Finally, Piotr didn’t engage with any of Pedro’s conclusions or actual opinions. We all have opinions — even Piotr (God help us) — but all he’s staged here is yet another slapstick routine from the Marx Brothers movie playing in his head. He has no arguments. No logic. It’s just projection, all the time. That’s his ongoing contribution to this forum: constant trolling. It’s better ignored — or better yet, binned.

William and his multiple sock puppets (pieto principle, ned kelly, dharma, and others) has on many occasions dismissed climate models as useless. Check the archives for yourselves. He doesn’t say some climate models or a particular climate model. It’s just climate models are useless, failed, hopelessly inaccurate. When confronted with this he changes his name and pretends he never said it.

W: He [Piotr] has no arguments. No logic. It’s just projection, all the time.

BPL: I find he always has an argument. There’s one further up in this very thread, where he is clearly laying out a logical argument about natural uptake of carbon dioxide. If you’re going to accuse someone of not doing something, it’s kind of dumb to make the accusation in the same thread where he’s doing that very thing.

William just throws mud, hoping some of it will stick.

Pedro –

The link you provided is to Shu et al. (2020), which does not include your quoted key finding but instead says, “The observed Arctic September SIE declining trend (−0.82 ± 0.18 million km2 per

decade) between 1979 and 2014 is slightly underestimated in CMIP6 models (−0.70 ± 0.06 million km2 per

decade),” which sounds pretty good to me.

The most plausible Notz et al. (2020), titled “Arctic Sea Ice in CMIP6” (https://agupubs.onlinelibrary.wiley.com/doi/full/10.1029/2019GL086749) doesn’t include your quoted key finding either but instead says, “In particular, the latest generation of models performs better than models from previous generations at simulating the sea-ice loss for a given amount of CO2 emissions and for a given amount of global warming.”

A shocker… Or not.

Thanks, Dr. N-G.

Reply to John N-G

Apologies that seems to be a sticky copy paste url. Your url is correct: https://agupubs.onlinelibrary.wiley.com/doi/full/10.1029/2019GL086749 or

https://repository.library.noaa.gov/view/noaa/29934 or

https://par.nsf.gov/servlets/purl/10173113

And I didn’t convey my meaning or sources accurately either, I was in a rush, sorry.

I covered broader ground and cannot detail every point I’ve seen related to or in Notz et al accurately here, sorry. It’s old news anyway, long ignored. Feel free to draw your own conclusions based on what you choose to read and check yourself.

Try for example the above quote you mention came from: Arctic Sea Ice in CMIP6

https://scispace.com/papers/arctic-sea-ice-in-cmip6-1t3idhfbxw

TL;DR: In this article, the authors examined CMIP6 simulations of Arctic sea ice area and volume and found that most models fail to simulate at the same time a plausible evolution of sea-ice area and of global mean surface temperature.

Challenges in Simulating Sea Ice and Temperature: Despite the advancements, most CMIP6 models struggle to simultaneously simulate a realistic evolution of both sea-ice area and global mean surface temperature. This discrepancy highlights ongoing challenges in climate modeling.

Sensitivity Metrics Comparison: When comparing sensitivity metrics between CMIP6 and previous CMIP phases, it is generally difficult to distinguish CMIP6 models from those in CMIP5, except for a few highly sensitive simulations. This suggests that while some models have improved, the overall sensitivity landscape remains similar.

This: “Models that participate in CMIP6 do not show a clear improvement in simulating observed sea‐ice trends over recent decades compared to models from earlier phases of CMIP.” is out there somewhere. No time to chase, sorry. The questions and info provided by william may be useful to balance the positive only professional omertà. As he points out the 95% CI range is implausible and the last 18 years results (mini mean trend line) remain far below the ensemble mean even if not as bad as CMIP3 and 5 were.

Still, most CMIP6 models fail to simulate at the same time a plausible evolution of sea-ice area and of global mean surface temperature.

see https://eesm.science.energy.gov/publications/arctic-sea-ice-cmip6

but some simulate implausible historical mean states compared to satellite observations, leading to large intermodel spread. Summer SIA is consistently biased low across the ensemble. Compared to the previous model generation (CMIP5), the intermodel spread in winter and summer SIA has reduced, and the regional distribution of sea ice concentration has improved.

https://www.bas.ac.uk/data/our-data/publication/antarctic-sea-ice-area-in-cmip6/

As a whole, the models successfully capture some elements of the observed seasonal cycle of sea ice but underestimate the summer minimum sea ice area. – Models project sea ice loss over the 21st century in all scenarios, but confidence in the rate of loss is limited, as most models show stronger global warming trends than observed over the recent historical period.

https://www.x-mol.net/paper/article/1251324725277122560

There are more of course but it’s unlikely anyone commenting would be interested here anyway.

There remains more important issues than messy ref urls and expecting any independent person on a forum without the resources of NASA-GISS et all to perfectly convey over 5 years of critical discussions of CMIP6 and sea ice extent at the poles.

I repeat the issue is more about _ “— and you’re left with a kind of rhetorical fog machine: soften the failures, spotlight only the ensemble mean, and dodge the hard accounting.

The framing of this post — and the timing — doesn’t quite add up. Compared to dozens of other pressing climate concerns, why this topic, and why now?”

It’s old news and everyone in the field already knew these CMIP6 sea ice data were still not useful or reliably accurate. The same as Gavin’s 2023-2024 global mean temperature projections were not accurate. Or rather a dismal failure and like CMIP6 (5 and 3) they still cannot work out why.

“But trust me, the cheque is in the mail.”

PP: everyone in the field already knew these CMIP6 sea ice data were still not useful or reliably accurate.

BPL: Predicting an 11% decline when it was actually 13% is “not useful or reliably accurate?” Depends on what your standards for “not useful or reliably accurate” are, doesn’t it?

BPL, exactly right. Predictions will never be 100% accurate, so that gives PP an excuse to constantly complain that things aren’t perfect, and hear the sound of his own voice, and make like he’s saying something useful.

And right on cue here is nigelj again making it all personal maligning Pedro with his niono-stop ad homin attacks.

nigelj says

2 Jun 2025 at 2:42 PM

BPL, exactly right. Predictions will never be 100% accurate, so that gives PP an excuse to constantly complain that things aren’t perfect, and hear the sound of his own voice, and make like he’s saying something useful.

100% psychological projection. 0% science. 0% facts. nigelj assigning motivations, intentions and thoughts to others he has absolutely no knowledge of.

This is 100% a personal ad hominem attack. Now, what did I just say a few minutes back? He does it to everyone. I hope that gets posted.

It’s not the only time Nigel is caught out “Making it about [others]” in action, given he is doing it all the time.

Barton and nigelj together hoisted with their own petard. Kaboom!

>> Barton Paul Levenson says Predicting an 11% decline when it was actually 13% is “not useful or reliably accurate?”

>> nigelj says BPL, exactly right.

No it is not, this is not the output of the models as shown in Figure 3!

They predict anything between 2% to 40% change per decade with 95% probability.

I would recommend that G. Schmidt adds the uncertainties to his values as apparently readers here get confused easily.

Williams post 2 Jun 2025 at 8:50 PM

N: “BPL, exactly right. Predictions will never be 100% accurate, so that gives PP an excuse to constantly complain that things aren’t perfect, and hear the sound of his own voice, and make like he’s saying something useful.”

W: 100% psychological projection. 0% science. 0% facts. nigelj assigning motivations, intentions and thoughts to others he has absolutely no knowledge of.

N: For years William and his team of sock puppets has complained about problems / imperfections in the models, in a general sense rarely ever specific, despite the fact every scientist knows this and acknowledges models are imperfect. Why would William do that, if not to sound important and hear the sound of his own voice? Or to undermine the science?

W: “This is 100% a personal ad hominem attack. Now, what did I just say a few minutes back? He does it to everyone. I hope that gets posted.”

N: My comment is not an ad hominem, (an attack on the person rather than proving their argument is wrong.) His argument is models are imperfect. I actually accepted they are imperfect (predictions will never be 100% inaccurate). My comment was about motive.

Pedro Prieto says

1 Jun 2025 at 7:54 PM

Reply to Pedro Prieto from William:

Pedro — thanks for clarifying and for pulling together that mix of sources. No apology needed — your framing adds important texture to what’s been an oddly sanitized conversation. The fact that multiple CMIP6 evaluations, including those you cited, show that most models still can’t reconcile sea-ice evolution with global temperature trends should raise eyebrows. But in many circles, even pointing that out gets treated like heresy.

You’re not being unfair — you’re being scientific. Healthy science requires critique, especially when tools that shape policy (like climate models) continue to misfire in known ways. Asking for accuracy and transparency isn’t “denial” — it’s accountability. If models can’t replicate known history reliably, confidence in their forward projections should rightly be qualified. Yet somehow, skepticism about model output gets labeled as skepticism about climate reality itself — which is neither logical nor helpful.

It’s also worth underscoring your broader concern: the rhetorical fog that clouds this space. When model ensembles are smoothed, contradictions downplayed, and known weaknesses framed as “improvements,” it begins to resemble branding more than science. You’re not the only one who sees it. Many readers — especially younger scientists, data-savvy observers, and quiet lurkers — are just waiting for someone to say what you just did: plainly, calmly, and with integrity.

So again — appreciate your voice. Not everyone can afford to push back publicly, and those who do are often caricatured. But that just shows how badly the field needs this kind of grounded critique. Take care and if you disappear I totally understand, as will many many others.

Pedro the Troll 1 Jun: “ Key findings from the paper (Notz et al., 2020):

No significant improvement in Arctic sea ice projections between CMIP5 and CMIP6.

“Models that participate in CMIP6 do not show a clear improvement in simulating observed sea‐ice trends over recent decades compared to models from earlier phases of CMIP. ”

John, pointed out that Notz et al. does NOT CONTAIN THAT QUOTE, and its “key finding” are if antyhing OPPOSITE:

Notz et al.: “In particular, the latest generation of models performs better than models from previous generations” vs. Pedro’s claim that Notz et al found” No clear improvement”

Which is important here, because the supposed IGNORING “”serious published critiques, like Notz et al. 2020 by Gavin and others was Pedro’s ONLY proof of his accusations toward them, e.g.:

– “Damage control / reputation management”

-“Massage the narrative”

– “Preemptive narrative setting”

– inability to admit: “serious structural flaws or poor tuning”

– “Defensive posture disguised as openness”

– “evasive, hand-wavy, and deflects scrutiny.”

-“Audience management: [message massaged to] “reduce anxiety and protect institutional credibility, even if it glosses over fundamental problems”

— “rhetorical fog machine: soften the failures, spotlight only the ensemble mean, and dodge the hard accounting.”

– “One of the quieter scandals in climate science communication”

-” Even serious published critiques (like Notz et al. 2020) [sic! – PIotr] are rarely engaged with in public-facing commentary. The pressure to preserve institutional credibility — and to avoid feeding denialist talking points — often leads to a kind of professional omertà: silence, spin, or deflection.”

With Pedro’s only “proof” – ignoring “key finding” of Notz et al. – already falsified by John, Pedro the Troll:

– excuses himself for .. being “in a rush”, which …. didn’t stop him from typing two screenfulls of attacks later in the same post;

– cannot remember from which source he quoted …. half a day earlier;

– blames his giving false sources for the claims he used on …. not having having “NASA-GISS et al.” resources;

– and is not particularly motivated to find the “serious publication” on the ignoring of which he just built his accusations (now it’s: “ is out there somewhere. No time to chase, sorry“)

P.S. I am sure the real Pedro Prieto would be proud of what you have been writing UNDER HIS NAME.

Reply to Piotr

3 Jun 2025 at 1:26 PM

William: I could not quite follow that. Would you please explain more clearly, as it is too scattered for me to follow.

Thanks.

Note on Notz et al. (2020):

This paper examines Arctic sea ice trends in CMIP5 and CMIP6 models, specifically analyzing how modeled sea ice loss relates to modeled global mean surface temperature (GMST). It does not directly compare the models’ GMST outputs to observational temperature datasets like GISTEMP or HadCRUT. Instead, it focuses on internal model behavior and consistency across ensemble members. However, observed sea ice extent and area (e.g., from NSIDC) are used as a reference for evaluating modeled sea ice trends.

Notz et al. (2020) paper on Arctic sea ice in CMIP6 :

Compares the sea ice–temperature relationships across models — that is, how each model’s own global mean surface temperature (GMST) relates to its own simulated sea ice extent (SIE).

In other words, they are not evaluating how well each model’s temperatures align with observed global temperatures, nor how well sea ice simulations align with observed sea ice trends at the same level of actual global warming.

Here’s the key methodological excerpt (paraphrased for clarity):

“We consider sea‐ice area and global mean surface air temperature as simulated by each model… This allows us to determine the simulated sensitivity of Arctic sea‐ice area to warming for each model.”

Those details as if hidden away in these research papers and not found in the Abstract nor the Conclusions sections.

“William”: I could not quite follow that. Would you please explain more clearly, as it is too scattered for me to follow.

Why? My text should be easy to follow by Pedro Prieto, whose actions and integrity I challenged.. You are “William”, not “Pedro P.”, remember?

But if you want – here is my incomprehensible (to you) post:

Piotr 3 Jun 2025 at 1:26 PM

in which I alternate quotes from Pedro Prieto with my questions to these quotes – give it a college try. If you still fail – I don’t know what else to suggest. Ask a friend to explain it you.

Or if they are stumped too – I have heard good things about AI models^*?

==

*^ “[Open AI] models defy human commands, actively resist orders to shut down”

**^ In prerelease tests in one AI company, when its AI was told it would be replaced in 84% of times it tried to avert it by blackmailing the engineers with the affair it thought the engineers had (the system had access to their emails) :https://www.cnn.com/2025/06/04/business/video/ai-models-new-behavior-humans-blackmail-prevent-shutdown-digvid

Re. “scrutiny is not denial”…In ANY area of science when we are talking bleeding edge, it takes a _qualified expert_ to do the “scrutinizing”. And, as you may or may not know it takes being in possession of a huge knowledge and skill base that takes years and even decades to master..

Did you do your homework?

jgnfld: as you may or may not know it takes being in possession of a huge knowledge and skill base that takes years and even decades to master..

That’s an essential point. Few deniers or doomers have put the time and effort in to become genuine experts on that of which they speak. Even published experts know their limitations, and depend on their specialist peers for bleeding edge judgements on knowledge and skills they themselves haven’t mastered.