And so it goes – another year, another annual data point. As has become a habit (2009, 2010), here is a brief overview and update of some of the most relevant model/data comparisons. We include the standard comparisons of surface temperatures, sea ice and ocean heat content to the AR4 and 1988 Hansen et al simulations.

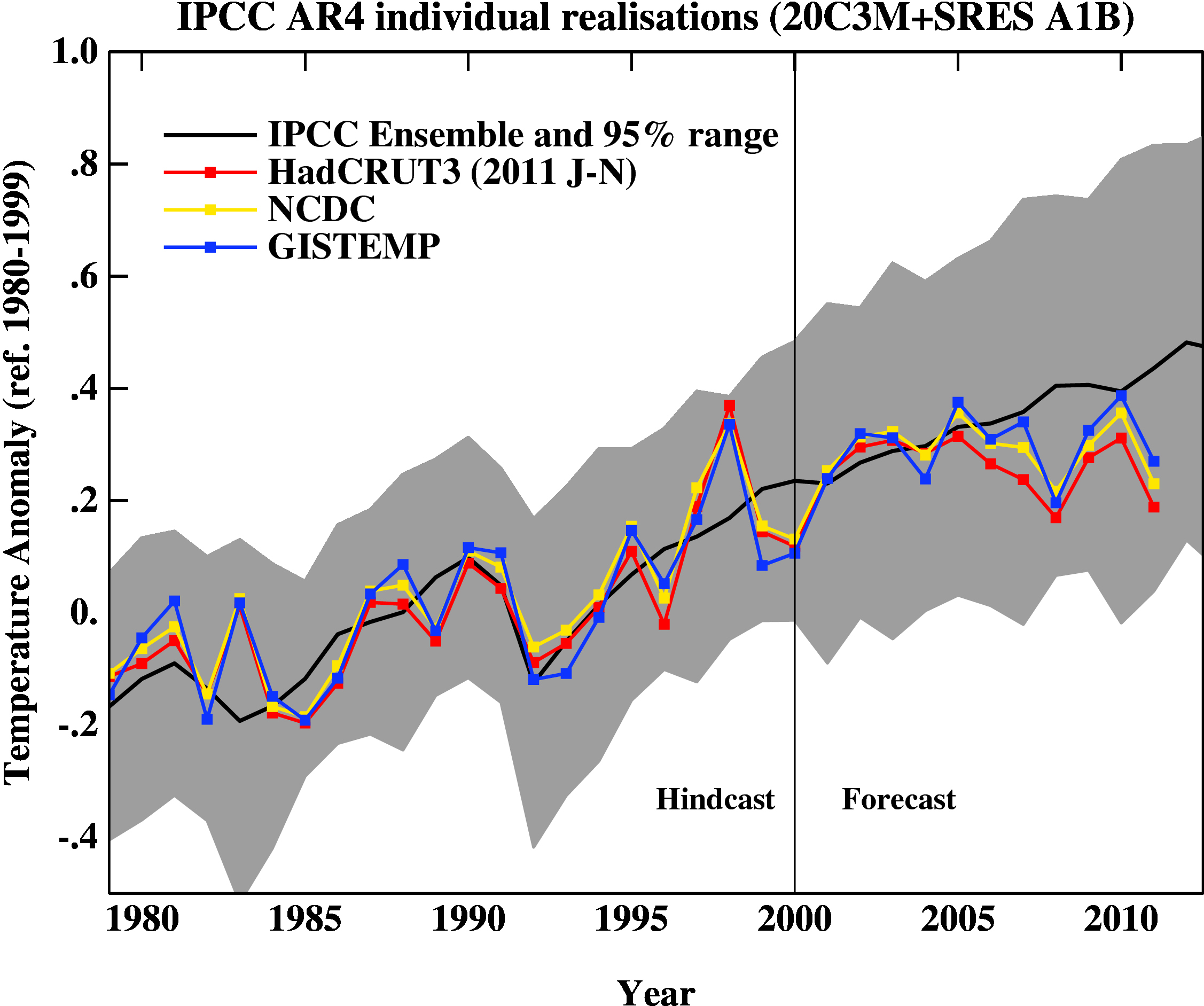

First, a graph showing the annual mean anomalies from the IPCC AR4 models plotted against the surface temperature records from the HadCRUT3v, NCDC and GISTEMP products (it really doesn’t matter which). Everything has been baselined to 1980-1999 (as in the 2007 IPCC report) and the envelope in grey encloses 95% of the model runs.

The La Niña event that emerged in 2011 definitely cooled the year a global sense relative to 2010, although there were extensive regional warm extremes. Differences between the observational records are mostly related to interpolations in the Arctic (but we will check back once the new HadCRUT4 data are released). Checking up on our predictions from last year, I forecast that 2011 would be cooler than 2010 (because of the emerging La Niña), but would still rank in the top 10. This was true looking at GISTEMP (2011 was #9), but not quite in HadCRUT3v (#12) or NCDC (#11). However, this was the warmest year that started off (DJF) with a La Niña (previous La Niña years by this index were 2008, 2001, 2000 and 1999 using a 5 month minimum for a specific event) in the GISTEMP record, and the second warmest (after 2001) in the HadCRUT3v and NCDC indices. Given current indications of only mild La Niña conditions, 2012 will likely be a warmer year than 2011, so again another top 10 year, but not a record breaker – that will have to wait until the next El Niño.

People sometimes claim that “no models” can match the short term trends seen in the data. This is not true. For instance, the range of trends in the models for 1998-2011 are [-0.07,0.49] ºC/dec, with MRI-CGCM (run5) the laggard in the pack, running colder than observations.

In interpreting this information, please note the following (repeated from previous years):

- Short term (15 years or less) trends in global temperature are not usefully predictable as a function of current forcings. This means you can’t use such short periods to ‘prove’ that global warming has or hasn’t stopped, or that we are really cooling despite this being the warmest decade in centuries.

- The AR4 model simulations were an ‘ensemble of opportunity’ and vary substantially among themselves with the forcings imposed, the magnitude of the internal variability and of course, the sensitivity. Thus while they do span a large range of possible situations, the average of these simulations is not ‘truth’.

- The model simulations use observed forcings up until 2000 (or 2003 in a couple of cases) and use a business-as-usual scenario subsequently (A1B). The models are not tuned to temperature trends pre-2000.

- Differences between the temperature anomaly products is related to: different selections of input data, different methods for assessing urban heating effects, and (most important) different methodologies for estimating temperatures in data-poor regions like the Arctic. GISTEMP assumes that the Arctic is warming as fast as the stations around the Arctic, while HadCRUT3v and NCDC assume the Arctic is warming as fast as the global mean. The former assumption is more in line with the sea ice results and independent measures from buoys and the reanalysis products.

- Model-data comparisons are best when the metric being compared is calculated the same way in both the models and data. In the comparisons here, that isn’t quite true (mainly related to spatial coverage), and so this adds a little extra structural uncertainty to any conclusions one might draw.

Foster and Rahmstorf (2011) showed nicely that if you account for some of the obvious factors affecting the global mean temperature (such as El Niños/La Niñas, volcanoes etc.) there is a strong and continuing trend upwards. An update to that analysis using the latest data is available here – and shows the same continuing trend:

There will soon be a few variations on these results. Notably, we are still awaiting the update of the HadCRUT (HadCRUT4) product to incorporate the new HadSST3 dataset and the upcoming CRUTEM4 data which incorporates more high latitude data (Jones et al, 2012). These two changes will impact the 1940s-1950s temperatures, the earliest parts of the record, the last decade, and will likely affect the annual rankings (and yes, I know that this is not particularly significant, but people seem to care).

Ocean Heat Content

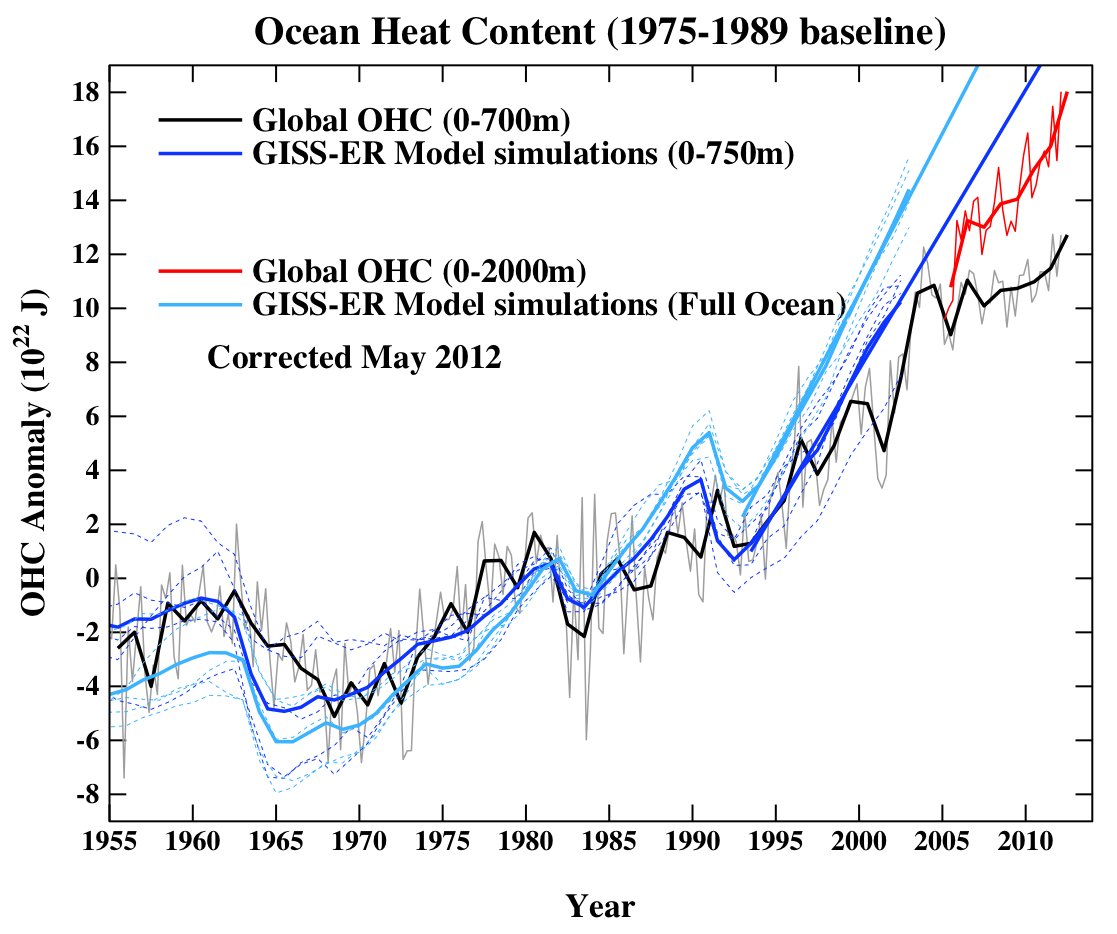

Figure 2 is the comparison of the ocean heat content (OHC) changes in the models compared to the latest data from NODC. As before, I don’t have the post-2003 AR4 model output, so I have extrapolated the ensemble mean to 2012 (understanding that this is not ideal). New this year, are the OHC changes down to 2000m, as well as the usual top-700m record, which NODC has started to produce. For better comparisons, I have plotted the ocean model results from 0-750m and for the whole ocean. All curves are baselined to the period 1975-1989.

I’ve left off the data from the Lyman et al (2010) paper for clarity, but note that there is some structural uncertainty in the OHC observations. Similarly, different models have different changes, and the other GISS model from AR4 (GISS-EH) had slightly less heat uptake than the model shown here.

Update (May 2012): The figure has been corrected for an error in the model data scaling. The original image can still be seen here.

As can be seen the long term trends in the models match those in the data, but the short-term fluctuations are both noisy and imprecise [Update: with the correction in the graph, this is less accurate a description. The extrapolations of the ensemble give OHC changes to 2011 that range from slightly greater than the observations, to quite a bit larger]. As an aside, there are a number of comparisons floating around using only the post 2003 data to compare to the models. These are often baselined in such a way as to exaggerate the model data discrepancy (basically by picking a near-maximum and then drawing the linear trend in the models from that peak). This falls into the common trap of assuming that short term trends are predictive of long-term trends – they just aren’t (There is a nice explanation of the error here).

Summer sea ice changes

Sea ice changes this year were dramatic, with the Arctic September minimum reaching record (or near record) values (depending on the data product). Updating the Stroeve et al, 2007 analysis (courtesy of Marika Holland) using the NSIDC data we can see that the Arctic continues to melt faster than any of the AR4/CMIP3 models predicted.

This may not be true for the CMIP5 simulations – but we’ll have a specific post on that another time.

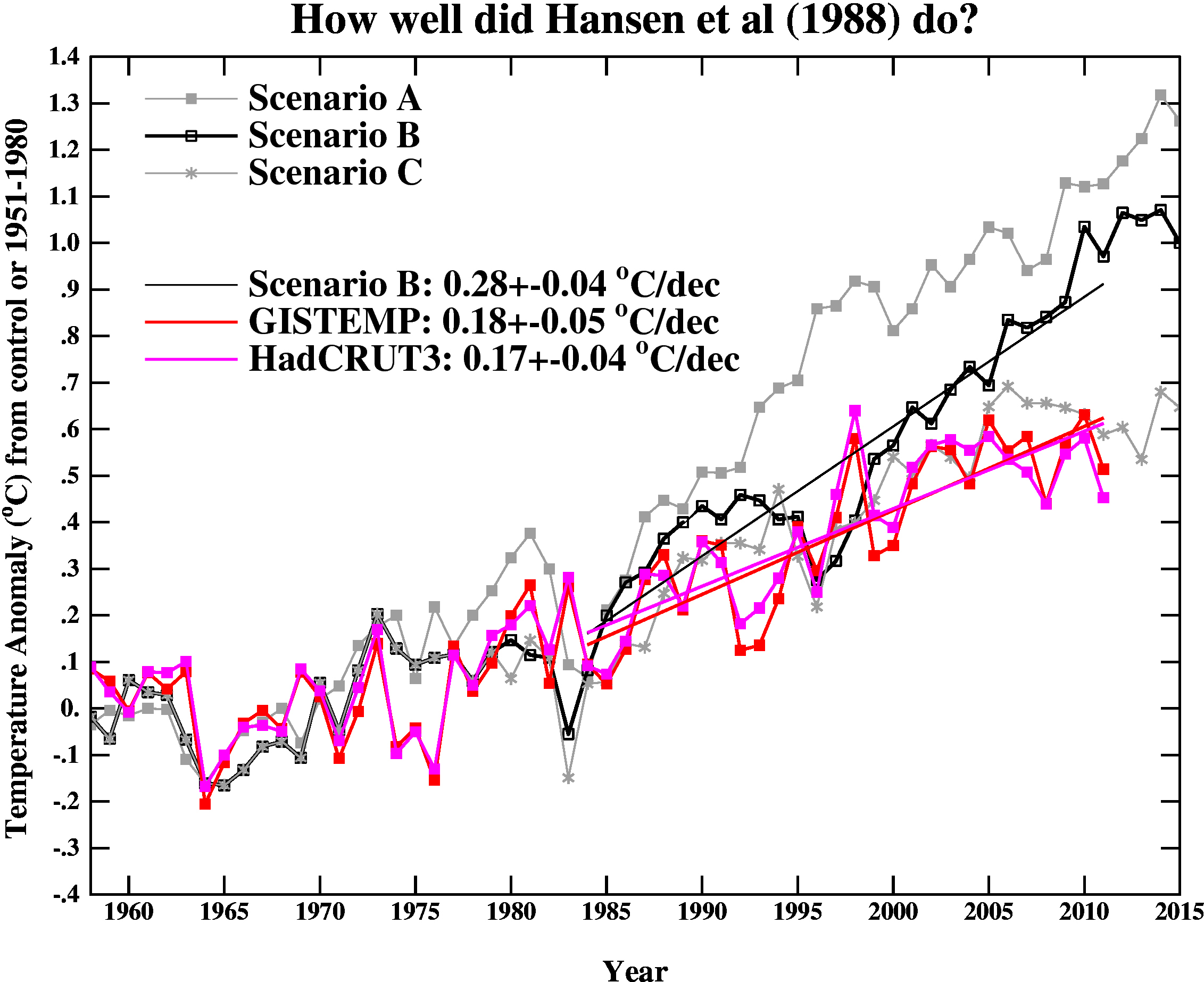

Hansen et al, 1988

Finally, we update the Hansen et al (1988) comparisons. Note that the old GISS model had a climate sensitivity that was a little higher (4.2ºC for a doubling of CO2) than the best estimate (~3ºC) and as stated in previous years, the actual forcings that occurred are not exactly the same as the different scenarios used. We noted in 2007, that Scenario B was running a little high compared with the forcings growth (by about 10%) using estimated forcings up to 2003 (Scenario A was significantly higher, and Scenario C was lower).

The trends for the period 1984 to 2011 (the 1984 date chosen because that is when these projections started), scenario B has a trend of 0.28+/-0.05ºC/dec (95% uncertainties, no correction for auto-correlation). For the GISTEMP and HadCRUT3, the trends are 0.18+/-0.05 and 0.17+/-0.04ºC/dec. For reference, the trends in the AR4 models for the same period have a range 0.21+/-0.16 ºC/dec (95%).

As we stated before, the Hansen et al ‘B’ projection is running warm compared to the real world (exactly how much warmer is unclear). As discussed in Hargreaves (2010), while this simulation was not perfect, it has shown skill in that it has out-performed any reasonable naive hypothesis that people put forward in 1988 (the most obvious being a forecast of no-change). However, the use of this comparison to refine estimates of climate sensitivity should be done cautiously, as the result is strongly dependent on the magnitude of the assumed forcing, which is itself uncertain. Recently there have been some updates to those forcings, and so my previous attempts need to be re-examined in the light of that data and the uncertainties (particular in the aerosol component). However, this is a complicated issue, and requires more space than I really have here to discuss, so look for this in an upcoming post.

Overall, given the latest set of data points, we can conclude (once again) that global warming continues.

References

- G. Foster, and S. Rahmstorf, "Global temperature evolution 1979–2010", Environmental Research Letters, vol. 6, pp. 044022, 2011. http://dx.doi.org/10.1088/1748-9326/6/4/044022

- P.D. Jones, D.H. Lister, T.J. Osborn, C. Harpham, M. Salmon, and C.P. Morice, "Hemispheric and large‐scale land‐surface air temperature variations: An extensive revision and an update to 2010", Journal of Geophysical Research: Atmospheres, vol. 117, 2012. http://dx.doi.org/10.1029/2011JD017139

- J.C. Hargreaves, "Skill and uncertainty in climate models", WIREs Climate Change, vol. 1, pp. 556-564, 2010. http://dx.doi.org/10.1002/wcc.58

it would be nice to see some error bars on the OHC data.

[Response: Yes, it would. But that figure was already too crowded – this one has something reasonable, though systematic differences between products are larger. – gavin]

I wondered if Bob Tisdale might have had a point in the midst of a rather unclear post.

I couldn’t find any rationale for the baseline choice in Hansen et al 2005 OHC model obs comparison, or in the reference papers for the modelling.

If Tisdale skewed his comparison by choosing a high anomaly to run the trend line from, I wondered if and how more care had been taken in Hansen et al. I checked the start year OHC anomaly (1993) for Hansen et al, and it is a low anomaly year (NODC), so the comparison is baselined to a bit of a trough – not as extreme as Tisdale’s choice, though. It appears to me that such short-term comparisons make for dicey baselining choices.

I notice that you’ve always been a bit dissatisfied, Gavin, with your baselining choice for OHC in these updates. I wonder about the rationale in Hansen et al, and more generally how to baseline models obs such that the choice is well-justified rather than fit to taste, particularly for short-term data.

Hansen et al 05 – http://pubs.giss.nasa.gov/docs/2005/2005_Hansen_etal_1.pdf

Willis 04 – http://oceans.pmel.noaa.gov/Papers/Wills_2004.pdf

Where is that graph that shows with anthropic CO2 and without? I remember seeing it, but can’t find it when I need it.

Thank you.

Notwithstanding the continuing warming contribution by way of Greenhouse gases…

What sort of plateauing of the observational temperature record will indeed bring upon a more-than-typical re-evaluation of either the modeling or the assessment of it? You did say that > 15 years brings us in to the ‘non-short-term’ forcings suite… so what happens in a few years if we’ve maintained the same relative flatness?

I realize the expectations are that the addition of more arctic data (HadCRUT4) will help out a little bit by cooling a tad decades ago and warming a tad in the recent time-frame, but just for academics– what happens if we go > 15 years without a significant warming trend..? (or worse, depart the IPCC AR4 95% range– that model isn’t all that old you know).

“Given current indications of only mild La Niña conditions, 2012 will likely be a warmer year than 2011, so again another top 10 year, but not a record breaker – that will have to wait until the next El Niño”.

So, doesn´t this mean that you´ll be worried about the state of understanding, recalling your response to a similar question about trends vs. noise back in 2007?

https://www.realclimate.org/index.php/archives/2007/12/a-barrier-to-understanding/comment-page-2/#comment-78146

The questions were:

(1) If 1998 is not exceeded in all global temperature indices by 2013, you’ll be worried about state of understanding

(2) In general, any year’s global temperature that is “on trend” should be exceeded within 5 years (when size of trend exceeds “weather noise”)

(3) Any ten-year period or more with no increasing trend in global average temperature is reason for worry about state of understandings

and you replied:

[Response: 1) yes, 2) probably, I’d need to do some checking, 3) No. There is no iron rule of climate that says that any ten year period must have a positive trend”.

While I´m well aware that temperatures are still rising, you appear to concede in the above statement that it´s now very likely that 1998 will not be beaten by 2013 in all global temperature indices. Does this indicate re your 2007 admission that the trend of warming might be overstated? Just curious.

[Response: Well, since 2010 was warmer than 1998 in GISTEMP, NCDC and in the forthcoming HadCRUT4, I don’t think I need to worry too much. – gavin]

Very timely, I have linked this article to a discussion at Judith Curry’s blog, where she stated “… the very small positive trend is not consistent with the expectation of 0.2C/decade provided by the IPCC AR4…”.

http://judithcurry.com/2012/02/07/trends-change-points-hypotheses/

#7 Gavin, thanks for the response. I hadn´t seen the HadCRUT4 update – interesting. This certainly should (or ought to) put some of the skeptics to rest to some degree.

Just some quick questions, then: Why do you think that the record hasn´t been broken yet in the satellite data, then? And isn´t it true that the trends of the last 10-15 years are at least towards the lower end of the projections?

Anyway, you certainly realize that many skeptics have seized upon your remark as some decisive way of defining when you need to recant

http://www.google.com/#sclient=psy-ab&hl=da&site=&source=hp&q=“If+1998+is+not+exceeded+in+all+global+temperature+indices+by+2013%2C+you’ll+be+worried+about+state+of+understanding”&pbx=1&oq=”If+1998+is+not+exceeded+in+all+global+temperature+indices+by+2013%2C+you’ll+be+worried+about+state+of+understanding”&aq=f&aqi=&aql=&gs_sm=12&gs_upl=1258l1258l0l3557l1l0l0l0l0l0l0l0ll0l0&bav=on.2,or.r_gc.r_pw.,cf.osb&fp=95ff0ffa02b03136&biw=1206&bih=520

so I´d guess that you´d be in for lots of people harassing you about that for some time to come. For your own sake, you might want to figure out some kind of self-defense against a possible coming tide while you still have the possibility to steal the thunder.

Kudos, N

Re: reply to Comment by niemann — 9 Feb 2012 @ 4:18 AM

HadCRUT4 (??) that has 2010 warmer than 1998? That would be quite an (post hoc?) adjustment as as it now stands the anomalies for these years are 0,499 and 0,517 respectively?!

[Response: It’s not a ‘post-hoc’ adjustment, it’s what happens when you use more data (see the Jones et al, paper) and improve the processing (see the HadSST3 post). But note that the HadCRUT4 product is still under review, and I don’t have the exact numbers. – gavin]

And don’t the satellite records RSS and UAH count? They seem to be part of “all global temperature indices” don’t they?

[Response: They measure different things, and one big difference is the magnitude of their response to ENSO (which is larger than at the surface), thus the length of time for a signal to show up relative to the interannual noise will be longer. I haven’t calculated that though, but perhaps I should. – gavin]

On comment 8, I had asked in an earlier posting why the satellite data exaggerate temperature variation relative to ground. I had thought it might have to do with the ground observations being mostly (70%) observations of water temperatures, not air temperatures per se. But a seemingly well-informed poster said no, the differences is mostly that the satellites, by picking up temperatures through a large thickness of the atmosphere, capture the amplification of tropical temperature increases that appears aloft (through transport of water vapor), rather than at the surface. The 1998 satellite record was the “El Nino of the century”, and so would be due largely to high tropical Pacific sea surface temperatures. So in a very real sense, assuming the response I got earlier was correct, the high satellite reading for 1998 really is an exaggeration of surface temperatures. In other words, the still-standing 1998 satellite record is an artifact of sensitivity to tropospheric amplification, not an accurate record of surface temperatures.

The original posting is here:

https://www.realclimate.org/index.php/archives/2011/12/global-temperature-news/comment-page-1/#comment-221464

#9 and inline–

Elaborating a bit for Sven, the satellite data reflect relatively deep horizontal ‘slices’ of the atmosphere. Thus the commonly referenced data concerns, not air temperatures as directly measured at 2 meters–which is what met stations do–but rather the inferred average temperature of the lowest few kilometers of atmosphere. Also note that the surface data incorporates sea surface temperatures, which are much less ‘noisy’ than air temperatures.

Put that together, and you’ve got just what Gavin says–way, way bigger responses to ENSO (and similar) variations for satellite than for surface data.

How about Sea Level? You have posted on this many times before, but in this comparison it is good to include other important global indexes, such as sea level

#9,#10,#11

Man, after all, Mommy was right when she told me that learning new things really could be fun! Thanks, y´all – Gavin, Sven, Chris and Kevin!

My last 2 cents of a quibble: While it all sounds very convincing to me, and while I won´t be among those harassing Gavin (anymore), I still guess that most skeptics won´t be satisfied with a reasonable explanation of the divergence between surface and satellite temps. I think it´s a safe bet that since Gavin made no qualifying caveats to the “all five datasets”, lots of people will still keep asking for a “recantation”.

But what the heck……..

Amazing what you can accomplish by cherrypicking intervals:

http://www.woodfortrees.org/plot/hadcrut3gl/from:1967/to:1977/trend/plot/hadcrut3gl/from:1977/to:1987/trend/plot/hadcrut3vgl/from:1987/to:1997/trend/plot/hadcrut3vgl/from:1997/to:2009/trend/plot/hadcrut3vgl/from:2009/to:2012/trend/plot/hadcrut3vgl/from:1967/to:2012

The entire temperature interval from 1967-2012–45 years–without any of the 5 segments showing a significant trend upwards.

e pur si cresce

niemann,

TLT measurements are much more subject to ENSO-driven fluctuations than surface measurements. Given that we have not and ENSO events even close to comparable to the 1998 one in the past decade, the 1998 record has not been broken. You can see this in Foster and Rahmstorf (2011), where ENSO corrections are much larger for satellite series.

I would be curious to see a similar comparison of model vs. observations for Antarctic sea ice retreat – is that available? Observations obviously show a small increase in Antarctic sea ice cover. I know that models did not show Antarctic sea ice retreating as quickly as Arctic sea ice, but I don’t know if they showed a positive or negative trend, and with what envelope?

Also, in general CMIP3 models didn’t include solar variability in projections, right? So, to the extent that we’ve seen a (large in comparison to past variations, small in comparison to total forcing) drop in solar intensity in the last decade, it might be appropriate to make a post-hoc adjustment for that, which would bring models and observations into closer agreement, correct? (doing so with ENSO would be more challenging, since the models are supposed to have ENSO-like behavior, so if you were to correct the observational record for ENSO, you’d also have to correct the model ensemble which would shrink the uncertainty in projected range significantly. It still might be an interesting comparison, though…)

for MMM:

“… Antarctic sea ice is increasing. This is expected from climate modeling.”

http://moregrumbinescience.blogspot.com/2010/03/wuwt-trumpets-result-supporting-climate.html

[Response: Be a little careful here. ‘climate modelling’ is not a monolith, and especially for a situation where a) there is a lot of noise and not a lot of signal, b) there are a lot of issues (climatological biases, multiple forcings), I doubt very much that all climate models suggest the same thing. I’m sure that someone has written a paper on the CMIP3 Antarctic sea ice changes, but I don’t have it at hand. This would be the appropriate source for any statements. – gavin]

Jiping Liu (who gave a talk on this in my department yesterday) and Judith Curry have a paper on the trends in Antarctic sea ice; this is also at least one suggestion that the trend should reverse to one of sea ice loss in the 21st century.

http://www.eas.gatech.edu/files/jiping_pnas.pdf

By the way, it will be interesting looking forward to the post about how the AR5 generation models have improved with respect to sea ice loss in the Arctic (my understanding is that there is no longer underestimation in the majority of the models, and even overestimation in some, though I haven’t seen the physical reasoning or improvements behind this).

Would it be possible to do something similar for precipitation – after all the projections of temperature depend, in part, on whether moisture remains in the air as water vapour or falls as precipitation?

Only a layman but eyeballing the graph over the last three decades and taking the natural variability (noise) over that time into account, this model does appear to me to be underestimating CS, What can we say about CS if anything at this time?

.

Gavin writes “As we stated before, the Hansen et al ‘B’ projection is running warm compared to the real world (exactly how much warmer is unclear). As discussed in Hargreaves (2010), while this simulation was not perfect, it has shown skill in that it has out-performed any reasonable naive hypothesis that people put forward in 1988 (the most obvious being a forecast of no-change).”

No it hasn’t Gavin. A “No change” prediction in 1988 appears to be around 0.35C then and 0.5C now giving a “miss” of 0.15C whereas Hansen’s “B” projection was about 0.35C then and about 0.9C now giving a “miss” of 0.55C. Its not even close. [edit – please calm down]

[Response: First off, ‘skill’ has a specific definition: SS=1 – RMS(pred)/RMS(naive). Second, back in 1988 what would have been the sensible ‘persistence’ forecast? As discussed in Hargreaves (2010), that involves some consideration of baseline periods and length. Given the temperature history as it existed then, she estimated that the 20 year mean had been the best predictor for subsequent periods, which would imply using the 1968-1987 mean (remember no-one knew what 1988 temperatures would end up being). Relative to this baseline, SS=0.39 (which is greater than zero, and hence skillful). If someone had suggested that 1987 annual mean temperature anomalies were the best estimate of temperatures for the next 30 years, SS=-0.12 relative to that baseline, so the Hansen simulation would not have been better. For the baseline chosen above (based on when the forecast actually began), SS (w.r.t 20yr) = 0.43 (again skillful), and using 1984 specifically, SS= 0.35. You could cherry-pick other single years (anything with an anomaly greater than 0.244 – i.e. only 1981 or 1983) after the fact, and you could show better skill, but you’d have to convince someone that someone had actually suggested such a forecast at the time. – gavin]

Re: skillful predictions in 1988,

Has anyone kept a record of explicit predictions from back then by well-known skeptics?

Gavin writes “You could cherry-pick other single years (anything with an anomaly greater than 0.244 – i.e. only 1981 or 1983) after the fact, and you could show better skill, but you’d have to convince someone that someone had actually suggested such a forecast at the time.”

Would that argument be similar to what you’ve done in the OHC analysis where you’ve cherry picked a particular period to extrapolate model results from rather than using their overall trend? Hence making the models appear to have more skill than they actually have?

[Response: Have you got out of bed on the wrong side this morning? I’m perfectly happy to do any particular analysis anyone suggests within reason, but can you leave out the insinuations and accusations? It is more than a little tedious. – gavin]

CM@22

Check out Richard Lindzen’s comments in 1989. Not the explicit prediction you were looking for, but this may be as close as you get.

http://skepticalscience.com/lindzen-illusion-2-lindzen-vs-hansen-1980s.html

The following may also interest you.

http://www.skepticalscience.com/comparing-global-temperature-predictions.html

Gavin, I suppose I’d be as grumpy as Tim the Tool if my position were as bereft of evidence as his.

Dear Gavin,

You write,

“the use of this comparison to refine estimates of climate sensitivity should be done cautiously, as the result is strongly dependent on the magnitude of the assumed forcing, which is itself uncertain. Recently there have been some updates to those forcings, and so my previous attempts need to be re-examined in the light of that data and the uncertainties (particular in the aerosol component)”.

You refer to changes in aerosol forcing – do you have a particular study in mind I could look at? Also, isn’t the dispute over the “missing heat” also relevant here?

We have recently seen the publication of Loeb et al. 2012:

Norman G. Loeb, John M. Lyman, Gregory C. Johnson, Richard P. Allan, David R. Doelling, Takmeng Wong, Brian J. Soden & Graeme L. Stephens, 2012: Observed changes in top-of-the-atmosphere radiation and upper-ocean heating consistent within uncertainty. Nature Geoscience doi:10.1038/ngeo1375.

In the recent RealClimate post “Global warming and ocean heat content” (3 Oct 2011) you discussed Meehl et al. 2011:

G.A. Meehl, J.M. Arblaster, J.T. Fasullo, A. Hu, and K.E. Trenberth, “Model-based evidence of deep-ocean heat uptake during surface-temperature hiatus periods”, Nature Climate Change, vol. 1, 2011, pp. 360-364. DOI.

Now, posters here at RealClimate in the past told me that the difference is a quibble. Yet, it appears from the abstracts that this is no mere quibble – one team is defending a TOA imbalance of 1 W m-2 and the other team is arguing that the TOA imbalance is only 0.5 W m-2. That’s half of what Meehl, Trenberth, Fasullo and others claim.

I have not read the Loeb et al. study but I would guess that they take into consideration the most recent estimates of OHC to 2000m and the abyssal depths.

My understanding is that the TOA imbalance is related to the climate sensitivity, and I recall reading that a TOA imbalance of 0.85 W m-2 corresponds to a climate sensitivity of 3 K per doubling of CO2.

So I am confused by the impression that despite a possible lowering of TOA imbalance, the predictions were right anyway. Surely, if there has been a lowering of the TOA imbalance from 1 W m-2 to 0.5 W m-2 it would mean earlier projections based on the higher assumed TOA imbalance would be biased high. Why does this not show up in this analysis? Am I misunderstanding something?

[Response: My assessment is that we can’t give a highly precise estimate of the TOA imbalance from observations yet. The evidence (from OHC increases) is definitely that it has been positive and likely somewhere around 0.5 to 1 W/m2, but the satellites are not precise enough to get any closer, and the estimated values from the GCMs depends on a) aerosol forcing (uncertain), and b) ocean heat uptake (which despite the OHC estimates, is still uncertain enough to matter). There will be a lot more on this over the next few months… – gavin]

Barry, #24:

I had a look at the SkS page on predictions Lindzen allegedly made in 1989.

Dana claims to have “reconstructed” Lindzen’s predictions from comments he made at an MIT tech talk in 1989.

Here is what Prof. Lindzen actually said: http://www.fortfreedom.org/s46.htm

In reality, the closest thing to a “prediction” about the future in Lindzen’s talk is the statement, “I personally feel that the likelihood over the next century of greenhouse warming reaching magnitudes comparable to natural variability seems small”.

By “reconstructed”, it is apparent that Dana really means he “made it up”. To be clear, Dana’s purple line attributed to Lindzen in Fig. 2 is complete fiction.

Eh? Dana drew a ‘natural variation’ line, and Dana said there:

“The lone exception in Figure 2 is Lindzen’s, which we reconstructed from comments he made in a 1989 MIT Tech Talk, but which is not a prediction he made himself.”

Dana linked to the same TechTalk transcript that Alex H. points to.

Draw a projection of “natural variation” within historical limits — draw any line you like with that constraint and it should show about the same amount of variability as that purple line — different dates for the bumps and grinds, but about the same ups and downs overall.

The thing about a projection is — you make it up, based on stated constraints.

IPCC AR3 wg1 gives:

which is simple enough that it is useful for people to get an intuitive feel for what changes in CO2 concentrations do. This parameterization appears well-validated in the literature for CO2 concentrations up to about 600ppm. (the formula comes from Hansen 1998, which uses a simplified version, derived from Lacis & Oinas 1991) Does anybody know how skillful it is for higher CO2 concentrations?

Hey Gavin,

if you sort/ rank gistemp from highest to lowest, isn’t it interesting that for the last 25 warmest years (2010, 2005, 2007, 1998, etc..) there is very little variability with the vast majority of those warmest years being sampled from the last 25 years, with all the last 25 warmest years being sampled within the last 30 years. If you graph the yearly rank, the last 25 warmest years seem to be the exception rather than the rule with regards to where they are sampled recently or not.

Is that something to do with data quality, global warming becoming more frequent, etc.? The rapid warming in the last 25 years? Is that sustainable given what gistemp shows prior to 1986?

Hank Roberts,

I don’t want to hijack the thread but your comment is misleading. Dana presented two figures comparing actual projections with non-existent “Lindzen projections” labeled “Lindzen 1” and “Lindzen 2” which he made up himself. These are then plotted beside actual projections that the reader ought to understand were made by Hansen’s GCM, and there is nothing in the legend to suggest they were not projections from a model that Lindzen used. Thus, the reader ought to assume from the figure that Lindzen also built a model in 1989 and “projected” it forwards into the future. To say that Dana’s graphs compare apples with oranges is to rather understate the matter.

But if you are satisfied with Dana’s explanation in the article – leaving aside the reality that many readers will not read it or if they do read it may not understand how scientifically inappropriate it is – does that mean you are also happy with Dana’s use of the term “reconstruction”? Is this really a “reconstruction” in any sense of the word – or did he just draw a wavy-line and attribute it as a prediction to Lindzen? If it is not truly a “reconstruction” do you think it’s possible the reader may be thereby confused by what a “reconstruction” really is?

Barry #24, thanks. I hadn’t seen those before. In the sense of Hank’s #28, I’m sure Dana’s hypothetical Lindzen forecast is illustrative, but I have doubts about the climate-sensitivity argument, and one has to use the subjunctive mood an awful lot when to talk about “reconstructing” a forecast someone might have made. The point I’ll take home, I think, is that the skeptics failed to make testable forecasts.

Alex @ 27

Thanks for the heads up, but Dana beat you to the punch.

“I want to be explicit that these projections are my interpretation of Lindzen’s comments, not Lindzen’s own projections.”

He caveats similarly throughout the article.

Lindzen’s ‘prediction’, which has been fairly consistent over the proceeding 20 years, is that any warming from CO2 will likely barely rise above natural variation.

Further to my earlier post (#19) I decided to have a go myself at comparing observed and projected precipitation anomalies. You can see the results at Climate Opinions at http://www.climatedata.info

Gavin,

I really like to see these types of articles. It is very interesting to hear what informed opinions thinks about the data compared tot the models.

Ari and Alex: Skeptical Science has tried to compare skeptics predictions to what has really happened. They have a lot of trouble because the skeptics rarely make any predictions (Lindzen has never made a testable prediction of what he expects). Should we say the skeptics are never wrong since they never make predictions? Perhaps we can agree they were not skillful in their predictions since those predictions do not exist. There are a number of scientific predictions that SkS has assessed.

When I look at the gray bands for the ensemble model projections, it seems to be that a model could be created that has temperatures declining to 0 or less abnormality with a gray band that easily contains the actual recorded temperatures.

Doesn’t this really mean there is too much uncertainty to say whether global warming is continuing or not?

[Response: what it means is that short-term trends are not predictive of longer term ones, and that the focus on what happened since 2002 or 1998 instead of over longer periods is misplaced. – gavin]

#27–Wow.

Alex, what that link shows is that Dr. Lindzen was wrong then. The issues that he refers to in the first sections have pretty much been resolved, unfavorably for the argument that he was making. The warming signal has emerged from the natural variability. Warming has continued. Ocean warming has been conclusively demonstrated.

I leave most comment on his criticisms of the models to others more knowledgeable about the history, but I don’t think many of the criticisms have much bite today.

I can see why Dana would link to this piece; harder to understand is why you do.

Isotopious@30, Want to try that again in English?

Alex,

One thing you need to understand about SKS is that their purpose is to “debunk” anything that does not conform to their perception about global warming. In this frame, it is not surprising that Dana would present his explanation in such a way.

All biases aside, one could compare Hansen’s projections with natural variations alone. In 1988, Hansen predicted that CO2 emissions would increase at a rate of 1.5% per year if continued unchecked. Since the actual increase has been slightly higher than that (closer to 2%), even this scenario could be considered conservative. The scenario A temperature increase through 2011 amounts to ~0.33C/decade. The actual rate of increase has been 0.16C/decade. Compare that to Dana’s plot of a natural fluctuation of 0 (not necessarily the correct value for solar, oceanic, and volcanic forcings) based on Lindzen’s statements. They are both off by approximately the same value.

It is a stretch, to say the least, that Hansen’s projection were correct and Linzen’s were wrong.

[Response: “all biases aside”! Oh the irony. – gavin]

#38–

Well, it was “Englishy.”

“[Response: what it means is that short-term trends are not predictive of longer term ones, and that the focus on what happened since 2002 or 1998 instead of over longer periods is misplaced. – gavin]”

I wonder how many more thousands of times it will be necessary to repeat this? Or is that even the right order of magnitude?

Dan H. wrote: “One thing you need to understand about SKS is that their purpose is to ‘debunk’ anything that does not conform to their perception about global warming.”

No, actually the purpose of SkepticalScience is to expose the deliberate and repetitious falsehoods, distortions, and misrepresentations of deniers like yourself.

It’s actually an interesting exercise to take the claims made in any of your posts here and check them out on SkepticalScience, where one finds that they are plainly false, and also frequently repeated by denialists.

Which, of course, you already know.

I suppose the moderators tolerate your comments here because you are polite and sound “reasonable” and post “sciencey-sounding” stuff.

Which is all well and good. But there’s still no reason to pretend your comments are anything but disingenuous, dishonest and — when it comes to your accusations of “bias” — hypocritical sophistry.

CM #22: I don’t know about back then but the denial game is about predicting anything but what’s inconvenient to your cause. A couple of years back I had someone in the letters page of an Australian paper switch his position from sunspots explain all climate variability to sunspots are unreliable as a predictor. The same paper infamous for its war on science has published an article predicting that the current solar low presages an ice age. Easy when you have no self esteem.

Dan H.@39,

E pur si cresce!

I wonder if the collapse of the global economy has anything to do with the SLIGHTLY lower rise relative to the IPCC ensemble prediction

Ray,

Is that a reference to Galileo?

Thank you. This is good.

Hey Gavin. Thanks for this post. Any idea why Andrew Revkin is saying that these results suggest climate sensitivity has been overestimated?

http://twitter.com/#!/Revkin/status/168038256146006016

Thanks!

[Response: I saw Andy’s tweet and tweeted a reply almost instantly (MichaelEMann). Andy subsequently tweeted a correction. It was an honest error that was quickly corrected. -mike]

#39–“In 1988, Hansen predicted. . .”

Oh, really? It’s my understanding that he offered up three emissions scenarios, without predicting which would come about.

“They are both off by approximately the same value.”

Oh, really? Weird logic to say that .16 C per decade is no more different from 0 C than from .33 C. And why would you use the Scenario A projection when we know that the real-world forcings are closest to the Scenario B numbers? Sure, the increase in CO2 concentration was closest to A, but all forcings are closer to B. Isn’t it ‘skeptics’ who like to emphasize–usually, that is!–that it isn’t only CO2 that affects temperatures?

And by the way, for those who must be wondering what all this refers to, you can find the article referenced here:

http://skepticalscience.com/lindzen-illusion-2-lindzen-vs-hansen-1980s.html

Nice to see this yearly post.

I think an honest question would be: “What would it take for you to seriously consider that CS looks too high given the current model performance?”

From an engineering perspective it looks obviously like that is likely the case with the trend, such as it is, looking to quickly exit the 95% thresholds in only a decade or so. The 15 year “magic” threshold is not too far away. Not very encouraging.

It is also unclear to me why the 95% thresholds are drawn as they are. It would seem to me they should start at the date of measured data (2000) as zero offset and cone out from there as time increases. This seemed like a “cheat” in a way.

[Response: If all the models were initiallised with 2000 temperatures, that would be more sensible, but since each model has a different phase of all the internal oscillations at any one point, it makes more sense to baseline over a period long enough to reduce that effect. However, I’ve previously shown this similarly to the way you suggest – for instance here – and it doesn’t really change the picture. – gavin]