Well, it’s not really all about me. But methane has figured strongly in a couple of stories recently and gets an apparently-larger-than-before shout-out in Al Gore’s new book as well. Since a part of the recent discussion is based on a paper I co-authored in Science, it is probably incumbent on me to provide a little context.

First off, these latest results are being strongly misrepresented in certain quarters. It should be obvious, but still bears emphasizing, that redistributing the historic forcings between various short-lived species and CH4 is mainly an accounting exercise and doesn’t impact the absolute effect attributed to CO2 (except for a tiny impact of fossil-derived CH4 on the fossil-derived CO2). The headlines that stated that our work shows a bigger role for CH4 should have made it clear that this is at the expense of other short-lived species, not CO2. Indeed, the attribution of historical forcings to CO2 that we made back in 2006 is basically the same as it is now.

[Read more…] about It’s all about me (thane)!

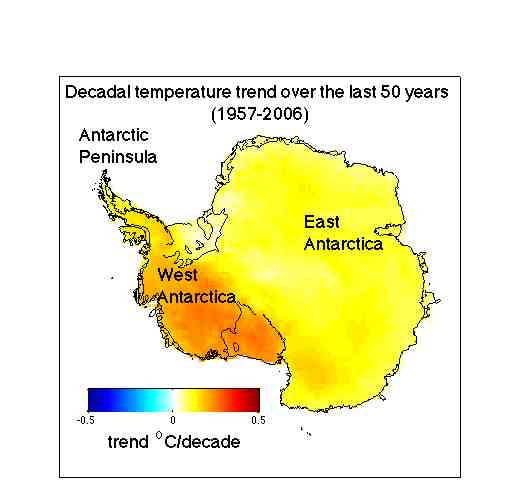

The paper shows that Antarctica has been warming for the last 50 years, and that it has been warming especially in West Antarctica (see the figure). The results are based on a statistical blending of satellite data and temperature data from weather stations. The results don’t depend on the statistics alone. They are backed up by independent data from automatic weather stations, as shown in our paper as well as in updated work by Bromwich, Monaghan and others (see their AGU abstract,

The paper shows that Antarctica has been warming for the last 50 years, and that it has been warming especially in West Antarctica (see the figure). The results are based on a statistical blending of satellite data and temperature data from weather stations. The results don’t depend on the statistics alone. They are backed up by independent data from automatic weather stations, as shown in our paper as well as in updated work by Bromwich, Monaghan and others (see their AGU abstract,