Annual updates to the model-observation comparisons for 2023 are now complete. The comparisons encompass surface air temperatures, mid-troposphere temperatures (global and tropical, and ‘corrected’), sea surface temperatures, and stratospheric temperatures. In almost every case, the addition of the 2023 numbers was in line with the long term expectation from the models.

[Read more…] about Not just another dot on the graph? Part IIModel-Obs Comparisons

The Scafetta Saga

It has taken 17 months to get a comment published pointing out the obvious errors in the Scafetta (2022) paper in GRL.

Back in March 2022, Nicola Scafetta published a short paper in Geophysical Research Letters (GRL) purporting to show through ‘advanced’ means that ‘all models with ECS > 3.0°C overestimate the observed global surface warming’ (as defined by ERA5). We (me, Gareth Jones and John Kennedy) wrote a note up within a couple of days pointing out how wrongheaded the reasoning was and how the results did not stand up to scrutiny.

[Read more…] about The Scafetta SagaReferences

- N. Scafetta, "Advanced Testing of Low, Medium, and High ECS CMIP6 GCM Simulations Versus ERA5‐T2m", Geophysical Research Letters, vol. 49, 2022. http://dx.doi.org/10.1029/2022GL097716

Some new CMIP6 MSU comparisons

We add some of the CMIP6 models to the updateable MSU [and SST] comparisons.

After my annual update, I was pointed to some MSU-related diagnostics for many of the CMIP6 models (24 of them at least) from Po-Chedley et al. (2022) courtesy of Ben Santer. These are slightly different to what we have shown for CMIP5 in that the diagnostic is the tropical corrected-TMT (following Fu et al., 2004) which is a better representation of the mid-troposphere than the classic TMT diagnostic through an adjustment using the lower stratosphere record (i.e. ![]() ).

).

References

- S. Po-Chedley, J.T. Fasullo, N. Siler, Z.M. Labe, E.A. Barnes, C.J.W. Bonfils, and B.D. Santer, "Internal variability and forcing influence model–satellite differences in the rate of tropical tropospheric warming", Proceedings of the National Academy of Sciences, vol. 119, 2022. http://dx.doi.org/10.1073/pnas.2209431119

- Q. Fu, C.M. Johanson, S.G. Warren, and D.J. Seidel, "Contribution of stratospheric cooling to satellite-inferred tropospheric temperature trends", Nature, vol. 429, pp. 55-58, 2004. http://dx.doi.org/10.1038/nature02524

2022 updates to model-observation comparisons

Our annual post related to the comparisons between long standing records and climate models.

As frequent readers will know, we maintain a page of comparisons between climate model projections and the relevant observational records, and since they are mostly for the global mean numbers, these get updated once the temperature products get updated for the prior full year. This has now been completed for 2022.

[Read more…] about 2022 updates to model-observation comparisonsIssues and Errors in a new Scafetta paper

Earlier this week, a new paper appeared in GRL by Nicola Scafetta (Scafetta, 2022) which purported to conclude that the CMIP6 models with medium or high climate sensitivity (higher than 3ºC) were not consistent with recent historical temperature changes. Since there have been a number of papers already on this topic, notably Tokarska et al (2020), which did not come to such a conclusion, it is worthwhile to investigate where Scafetta’s result comes from. Unfortunately, it appears to emerge from a mis-appreciation of what is in the CMIP6 archive, an inappropriate statistical test, and a total neglect of observational uncertainty and internal variability.

[Read more…] about Issues and Errors in a new Scafetta paperReferences

- N. Scafetta, "Advanced Testing of Low, Medium, and High ECS CMIP6 GCM Simulations Versus ERA5‐T2m", Geophysical Research Letters, vol. 49, 2022. http://dx.doi.org/10.1029/2022GL097716

- K.B. Tokarska, M.B. Stolpe, S. Sippel, E.M. Fischer, C.J. Smith, F. Lehner, and R. Knutti, "Past warming trend constrains future warming in CMIP6 models", Science Advances, vol. 6, 2020. http://dx.doi.org/10.1126/sciadv.aaz9549

Another dot on the graphs (Part II)

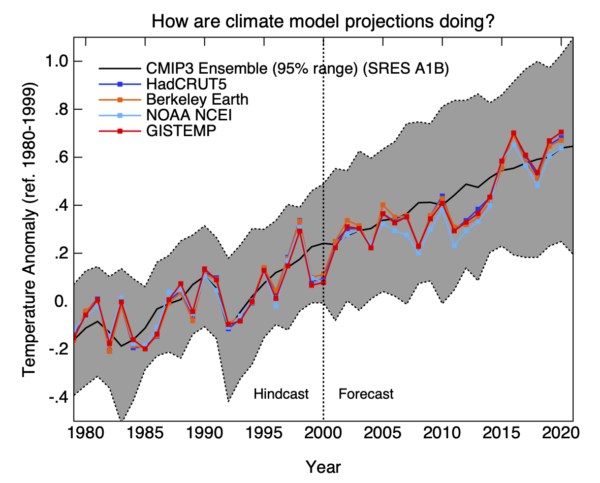

We have now updated the model-observations comparison page for the 2021 SAT and MSU TMT datasets. Mostly this is just ‘another dot on the graphs’ but we have made a couple of updates of note. First, we have updated the observational products to their latest versions (i.e. HadCRUT5, NOAA-STAR 4.1 etc.), though we are still using NOAA’s GlobalTemp v5 – the Interim version will be available later this year. Secondly, we have added a comparison of the observations to the new CMIP6 model ensemble.

[Read more…] about Another dot on the graphs (Part II)Update day 2021

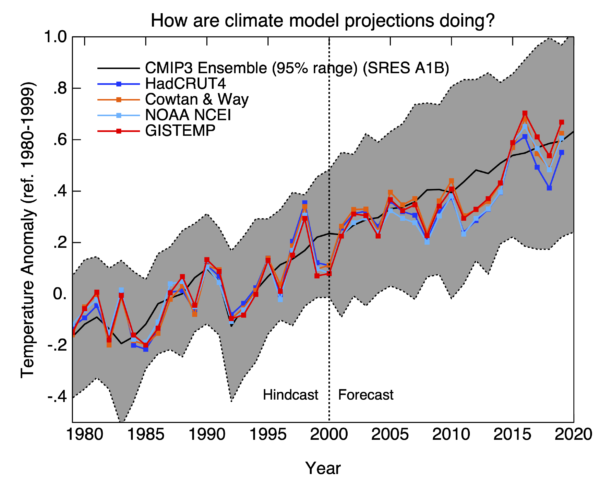

As is now traditional, every year around this time we update the model-observation comparison page with an additional annual observational point, and upgrade any observational products to their latest versions.

A couple of notable issues this year. HadCRUT has now been updated to version 5 which includes polar infilling, making the Cowtan and Way dataset (which was designed to address that issue in HadCRUT4) a little superfluous. Going forward it is unlikely to be maintained so, in a couple of figures, I have replaced it with the new HadCRUT5. The GISTEMP version is now v4.

For the comparison with the Hansen et al. (1988), we only had the projected output up to 2019 (taken from fig 3a in the original paper). However, it turns out that fuller results were archived at NCAR, and now they have been added to our data file (and yes, I realise this is ironic). This extends Scenario B to 2030 and Scenario A to 2060.

Nothing substantive has changed with respect to the satellite data products, so the only change is the addition of 2020 in the figures and trends.

So what do we see? The early Hansen models have done very well considering the uncertainty in total forcings (as we’ve discussed (Hausfather et al., 2019)). The CMIP3 models estimates of SAT forecast from ~2000 continue to be astoundingly on point. This must be due (in part) to luck since the spread in forcings and sensitivity in the GCMs is somewhat ad hoc (given that the CMIP simulations are ensembles of opportunity), but is nonetheless impressive.

The forcings spread in CMIP5 was more constrained, but had some small systematic biases as we’ve discussed Schmidt et al., 2014. The systematic issue associated with the forcings and more general issue of the target diagnostic (whether we use SAT or a blended SST/SAT product from the models), give rise to small effects (roughly 0.1ºC and 0.05ºC respectively) but are independent and additive.

The discrepancies between the CMIP5 ensemble and the lower atmospheric MSU/AMSU products are still noticeable, but remember that we still do not have a ‘forcings-adjusted’ estimate of the CMIP5 simulations for TMT, though work with the CMIP6 models and forcings to address this is ongoing. Nonetheless, the observed TMT trends are very much on the low side of what the models projected, even while stratospheric and surface trends are much closer to the ensemble mean. There is still more to be done here. Stay tuned!

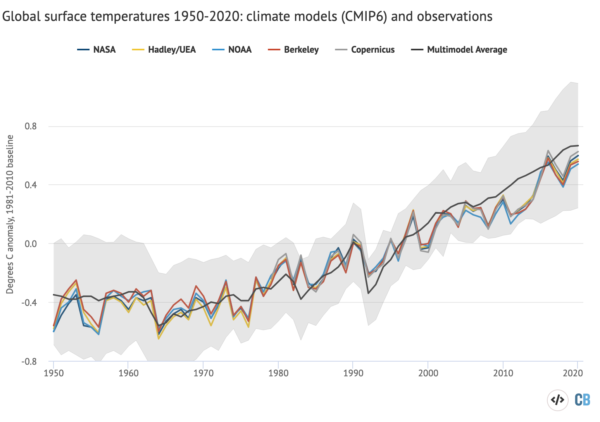

The results from CMIP6 (which are still being rolled out) are too recent to be usefully added to this assessment of forecasts right now, though some compilations have now appeared:

The issues in CMIP6 related to the excessive spread in climate sensitivity will need to be looked at in more detail moving forward. In my opinion ‘official’ projections will need to weight the models to screen out those ECS values outside of the constrained range. We’ll see if other’s agree when the IPCC report is released later this year.

Please let us know in the comments if you have suggestions for improvements to these figures/analyses, or suggestions for additions.

References

- Z. Hausfather, H.F. Drake, T. Abbott, and G.A. Schmidt, "Evaluating the Performance of Past Climate Model Projections", Geophysical Research Letters, vol. 47, 2020. http://dx.doi.org/10.1029/2019GL085378

- G.A. Schmidt, D.T. Shindell, and K. Tsigaridis, "Reconciling warming trends", Nature Geoscience, vol. 7, pp. 158-160, 2014. http://dx.doi.org/10.1038/ngeo2105

Update day 2020!

Following more than a decade of tradition (at least), I’ve now updated the model-observation comparison page to include observed data through to the end of 2019.

As we discussed a couple of weeks ago, 2019 was the second warmest year in the surface datasets (with the exception of HadCRUT4), and 1st, 2nd or 3rd in satellite datasets (depending on which one). Since this year was slightly above the linear trends up to 2018, it slightly increases the trends up to 2019. There is an increasing difference in trend among the surface datasets because of the polar region treatment. A slightly longer trend period additionally reduces the uncertainty in the linear trend in the climate models.

To summarize, the 1981 prediction from Hansen et al (1981) continues to underpredict the temperature trends due to an underestimate of the transient climate response. The projections in Hansen et al. (1988) bracket the actual changes, with the slight overestimate in scenario B due to the excessive anticipated growth rate of CFCs and CH4 which did not materialize. The CMIP3 simulations continue to be spot on (remarkably), with the trend in the multi-model ensemble mean effectively indistinguishable from the trends in the observations. Note that this doesn’t mean that CMIP3 ensemble means are perfect – far from it. For Arctic trends (incl. sea ice) they grossly underestimated the changes, and overestimated them in the tropics.

The CMIP5 ensemble mean global surface temperature trends slightly overestimate the observed trend, mainly because of a short-term overestimate of solar and volcanic forcings that was built into the design of the simulations around 2009/2010 (see Schmidt et al (2014). This is also apparent in the MSU TMT trends, where the observed trends (which themselves have a large spread) are at the edge of the modeled histogram.

A number of people have remarked over time on the reduction of the spread in the model projections in CMIP5 compared to CMIP3 (by about 20%). This is due to a wider spread in forcings used in CMIP3 – models varied enormously on whether they included aerosol indirect effects, ozone depletion and what kind of land surface forcing they had. In CMIP5, most of these elements had been standardized. This reduced the spread, but at the cost of underestimating the uncertainty in the forcings. In CMIP6, there will be a more controlled exploration of the forcing uncertainty (but given the greater spread of the climate sensitivities, it might be a minor issue).

Over the years, the model-observations comparison page is regularly in the top ten of viewed pages on RealClimate, and so obviously fills a need. And so we’ll continue to keep it updated, and perhaps expand it over time. Please leave suggestions for changes in the comments below.

References

- J. Hansen, D. Johnson, A. Lacis, S. Lebedeff, P. Lee, D. Rind, and G. Russell, "Climate Impact of Increasing Atmospheric Carbon Dioxide", Science, vol. 213, pp. 957-966, 1981. http://dx.doi.org/10.1126/science.213.4511.957

- J. Hansen, I. Fung, A. Lacis, D. Rind, S. Lebedeff, R. Ruedy, G. Russell, and P. Stone, "Global climate changes as forecast by Goddard Institute for Space Studies three‐dimensional model", Journal of Geophysical Research: Atmospheres, vol. 93, pp. 9341-9364, 1988. http://dx.doi.org/10.1029/JD093iD08p09341

- G.A. Schmidt, D.T. Shindell, and K. Tsigaridis, "Reconciling warming trends", Nature Geoscience, vol. 7, pp. 158-160, 2014. http://dx.doi.org/10.1038/ngeo2105

How good have climate models been at truly predicting the future?

A new paper from Hausfather and colleagues (incl. me) has just been published with the most comprehensive assessment of climate model projections since the 1970s. Bottom line? Once you correct for small errors in the projected forcings, they did remarkably well.

[Read more…] about How good have climate models been at truly predicting the future?Update day

So Wednesday was temperature series update day. The HadCRUT4, NOAA NCEI and GISTEMP time-series were all updated through to the end of 2018 (slightly delayed by the federal government shutdown). Berkeley Earth and the MSU satellite datasets were updated a couple of weeks ago. And that means that everyone gets to add a single additional annual data point to their model-observation comparison plots!