What do you need to know about climate in order to be in the best position to adapt to future change? This question was discussed in a European workshop on Copernicus climate services during a heatwave in Barcelona, Spain (June 12-14).

Snow Water Ice and Water and Adaptive Actions for a Changing Arctic

The Arctic is changing fast, and the Arctic Council recently commissioned the Arctic Monitoring and Assessment Programme (AMAP) to write two new reports on the state of the Arctic cryosphere (snow, water, and ice) and how the people and the ecosystems in the Arctic can live with these changes.

The two reports have now just been published and are called Snow Water Ice and Permafrost in the Arctic Update (SWIPA-update) and Adaptive Actions for a Changing Arctic (AACA).

[Read more…] about Snow Water Ice and Water and Adaptive Actions for a Changing Arctic

Predictable and unpredictable behaviour

Terms such as “gas skeptics” and “climate skeptics” aren’t really very descriptive, but they refer to sentiments that have something in common: unpredictable behaviour.

The true meaning of numbers

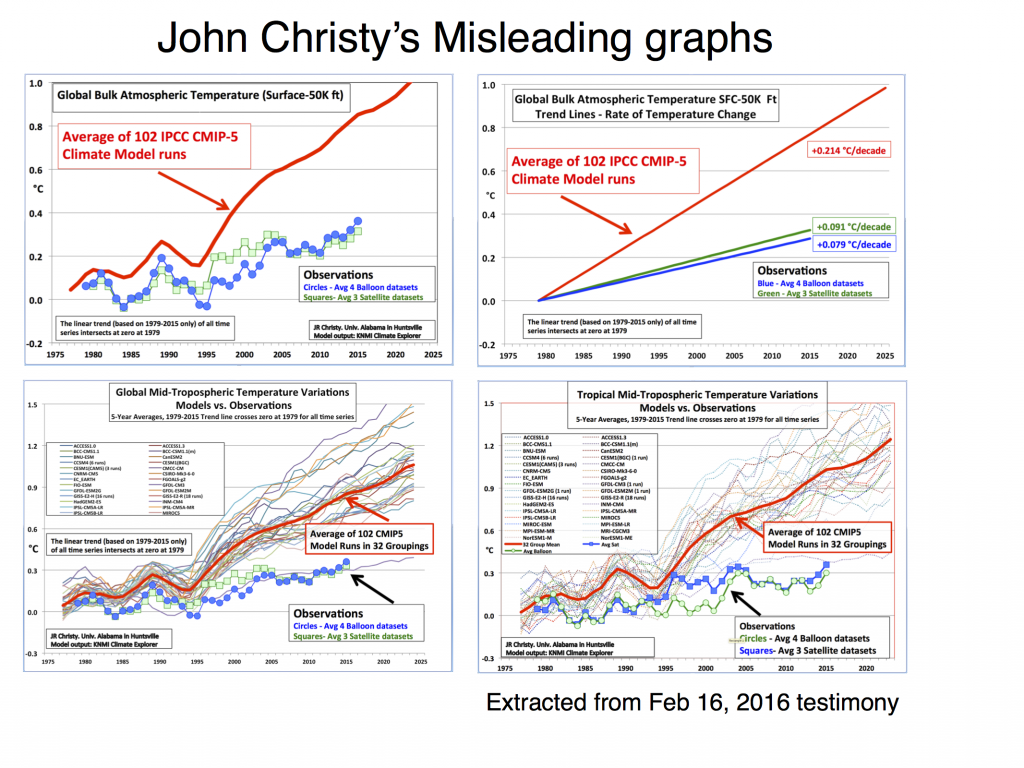

Gavin has already discussed John Christy’s misleading graph earlier in 2016, however, since the end of 2016, there has been a surge in interest in this graph in Norway amongst people who try to diminish the role of anthropogenic global warming.

I think this graph is warranted some extra comments in addition to Gavin’s points because it is flawed on more counts beyond those that he has already discussed. In fact, those using this graph to judge climate models reveal an elementary lack of understanding of climate data.

New report: Climate change, impacts and vulnerability in Europe 2016

Another climate report is out – what’s new? Many of the previous reports have presented updated status on the climate and familiar topics such as temperature, precipitation, ice, snow, wind, and storm activities.

The latest report Climate change, impacts and vulnerability in Europe 2016 from the European Environment Agency (EEA) also includes an assessment of hail, a weather phenomenon that is often associated with lightening (a previous report from EASAC from 2013 also covers hail).

Usually, there has not been a lot of information about hail, but that is improving. Still, the jury is still out when it comes to hail and climate change:

Despite improvements in data availability, trends and projections of hail events are still uncertain.

[Read more…] about New report: Climate change, impacts and vulnerability in Europe 2016

There was no pause

I think that the idea of a pause in the global warming has been a red herring ever since it was suggested, and we have commented on this several times here on RC: On how data gaps in some regions (eg. the Arctic) may explain an underestimation of the recent warming. We have also explained how natural oscillations may give the impression of a faux pause. Now, when we know the the global mean temperature for 2016, it’s even more obvious.

Easterling and Wehner (2009) explained that it is not surprising to see some brief periods with an apparent decrease in a temperature record that increases in jumps and spurts, and Foster and Rahmstorf (2012) showed in a later paper how temperature data from the most important observations show consistent global warming trends when known short-term influences such as El Niño Southern oscillation (ENSO), volcanic aerosols and solar variability are accounted for.

A recent paper by Hausfather et al. (2017) adds little new to our understanding, although it confirms that there has not been a recent “hiatus” in the global warming. However, if there are doubts about a physical condition, then further scientific research is our best option for establishing the facts. This is exactly what this recent study did.

The latest findings confirm the results of Karl et al. 2015 from the National Oceanic Atmospheric Administration (NOAA), which Gavin described in a previous post here on RC. The NOAA analysis received unusual attention because of the harassment it drew from the chair of the US House Science Committee and the subpoena demand for emails.

[Read more…] about There was no pause

References

- D.R. Easterling, and M.F. Wehner, "Is the climate warming or cooling?", Geophysical Research Letters, vol. 36, 2009. http://dx.doi.org/10.1029/2009GL037810

- G. Foster, and S. Rahmstorf, "Global temperature evolution 1979–2010", Environmental Research Letters, vol. 6, pp. 044022, 2011. http://dx.doi.org/10.1088/1748-9326/6/4/044022

- Z. Hausfather, K. Cowtan, D.C. Clarke, P. Jacobs, M. Richardson, and R. Rohde, "Assessing recent warming using instrumentally homogeneous sea surface temperature records", Science Advances, vol. 3, 2017. http://dx.doi.org/10.1126/sciadv.1601207

- T.R. Karl, A. Arguez, B. Huang, J.H. Lawrimore, J.R. McMahon, M.J. Menne, T.C. Peterson, R.S. Vose, and H. Zhang, "Possible artifacts of data biases in the recent global surface warming hiatus", Science, vol. 348, pp. 1469-1472, 2015. http://dx.doi.org/10.1126/science.aaa5632

A trigger action from sea-level rise?

Can a rising sea level can act as a boost for glaciers calving into the sea and trigger a surge of ice into the oceans? I finally got round to watch the documentary Chasing Ice over the Christmas and New Year’s break, and it made a big impression. I also was left with this question after watching it.

Climatology and meteorology are your friends

The Norwegian Meteorological institute has celebrated its 150th anniversary this year. It was founded to provide weather data and tentative warnings to farmers, sailors, and fishermen. The inception of Norwegian climatology in the mid-1800s started with studies of geographical climatic variations to adapt important infrastructure to the ambient climate. The purpose of the meteorology and climatology was to protect lives and properties.

[Read more…] about Climatology and meteorology are your friends

What has science done for us?

Where would we be without science? Today, we live longer than ever before according to the Royal Geographical Society, thanks to pharmaceutical, medical, and health science. Vaccines saves many lives. Physics and electronics have given us satellites, telecommunications, and the Internet. You would not read this blog without them. Chemistry and biology have provided use with all sorts of products, food, and enabled the agricultural (“green”) revolution enhancing our crop yields. The science of evolution and natural selection explains the character of ecosystems, and modern meteorology saves lives and help us safeguard our properties.

What is new in European climate research?

What did I learn from the 2016 annual European Meteorological Society (EMS) conference that last week was hosted in Trieste (Italy)?

[Read more…] about What is new in European climate research?